Maybe it was just my who idiotically named the file t5xxl_enconly hoping to avoid downloading it and

Maybe it was just my who idiotically named the file t5xxl_enconly hoping to avoid downloading it and resulting in in being overwritten every time

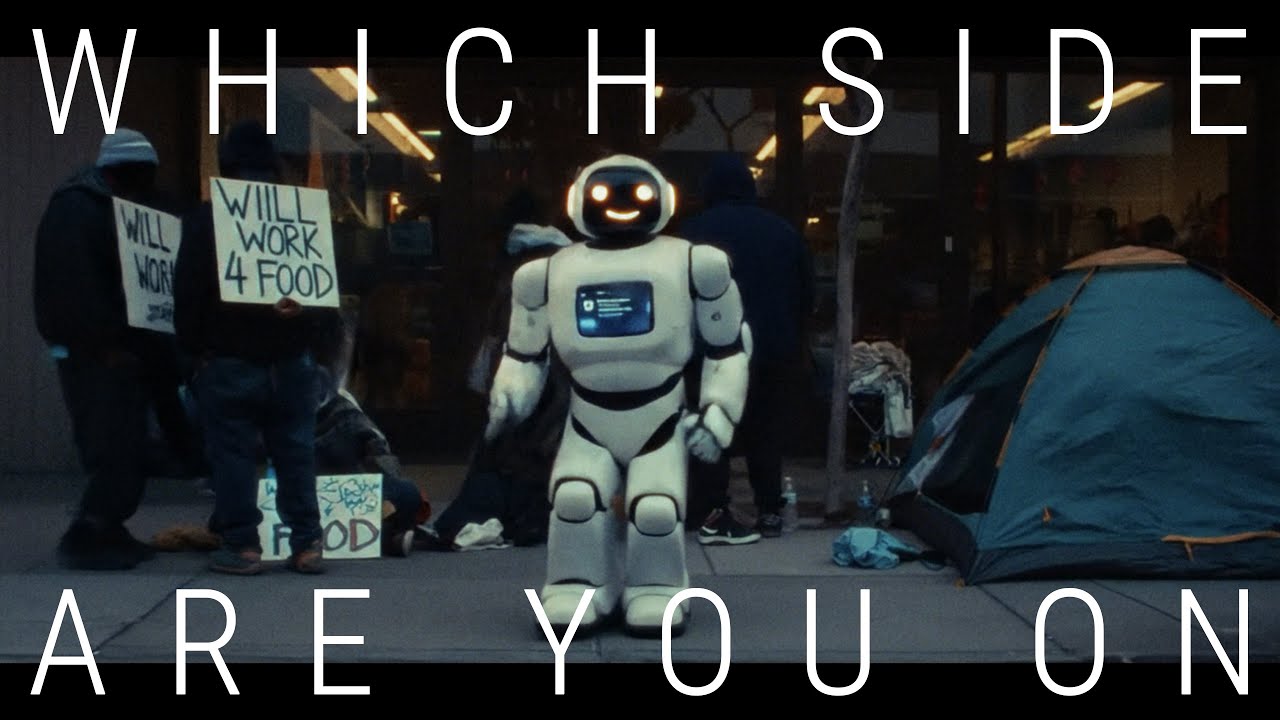

I need suggestions for good lip sync

I need suggestions for good lip sync