Welcome to the official Cloudflare Developers server. Here you can ask for help and stay updated with the latest news

83,498Members

View on DiscordResources

Similar Threads

Was this page helpful?

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

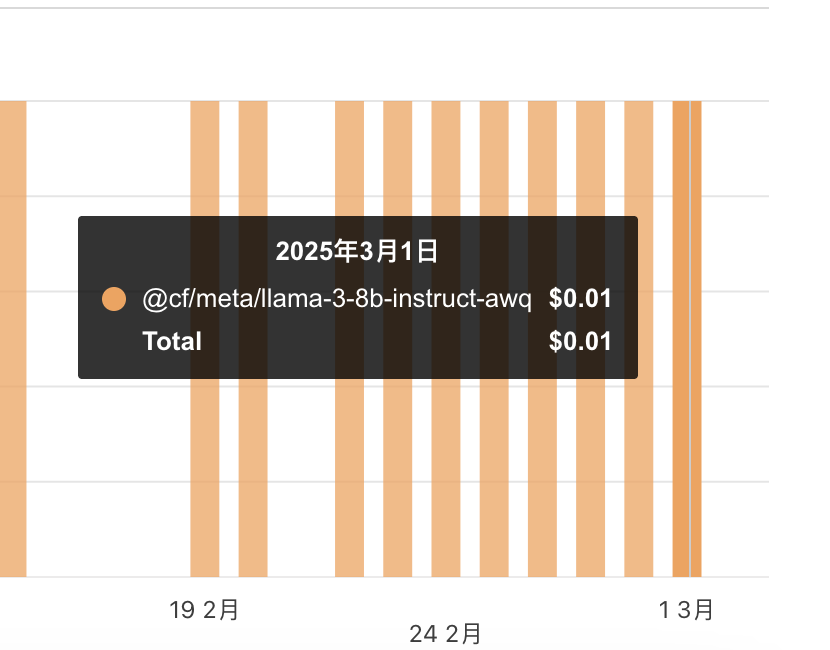

llama-guard-3-8bsystemtoolpyannote

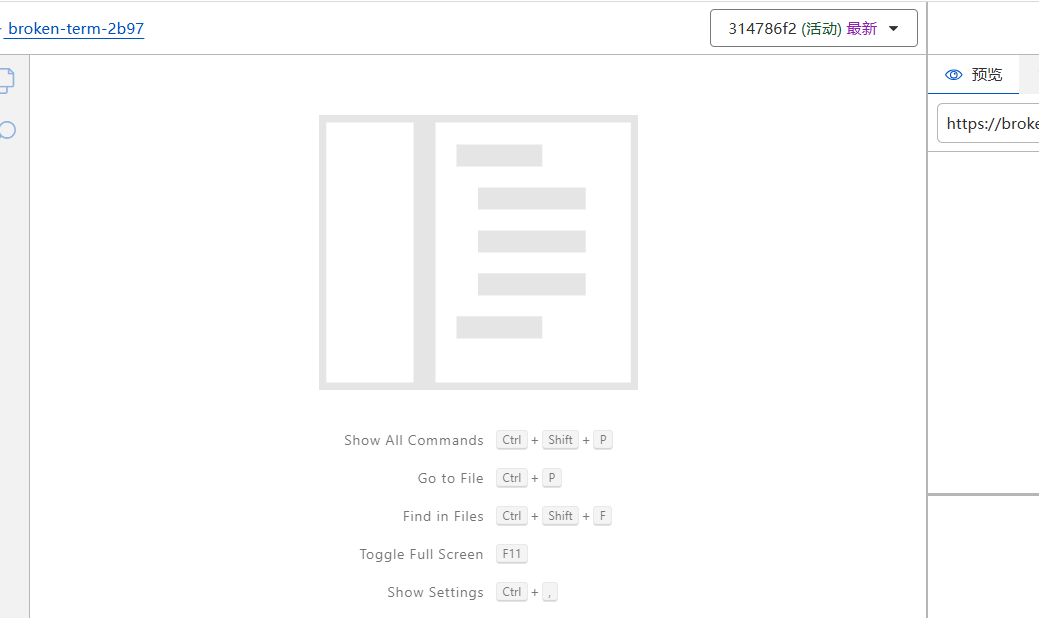

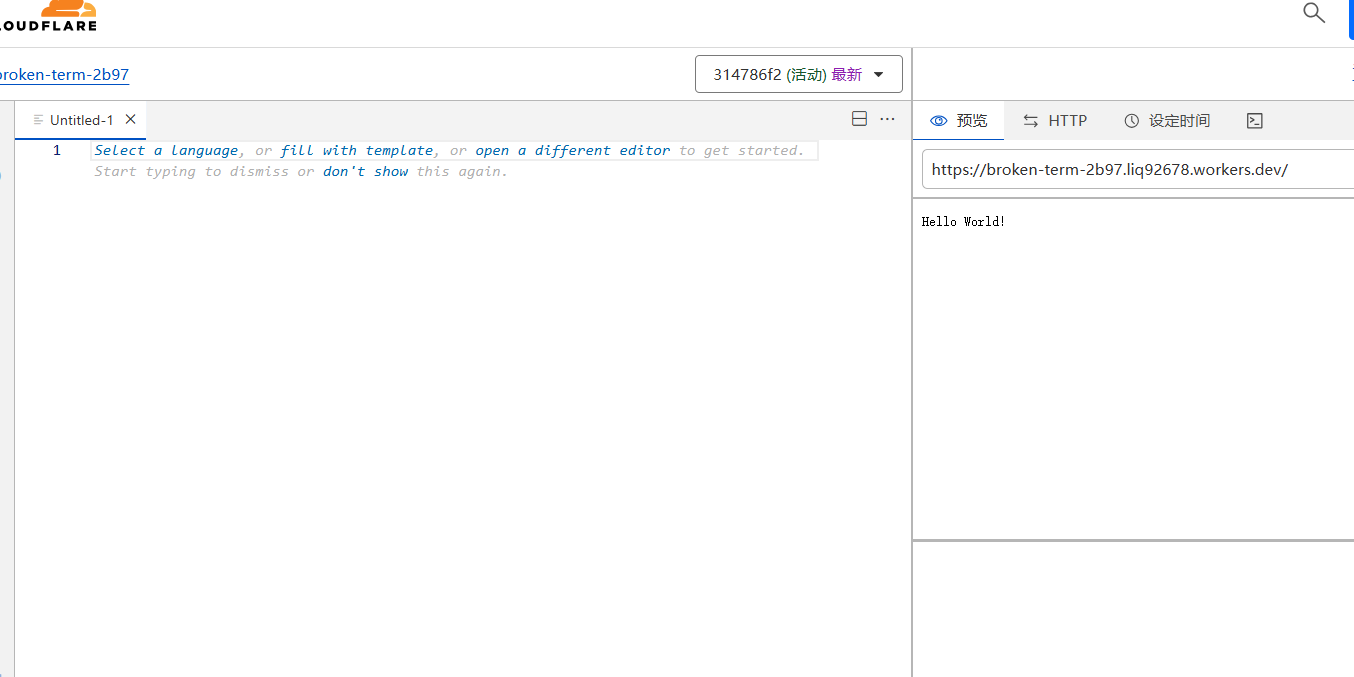

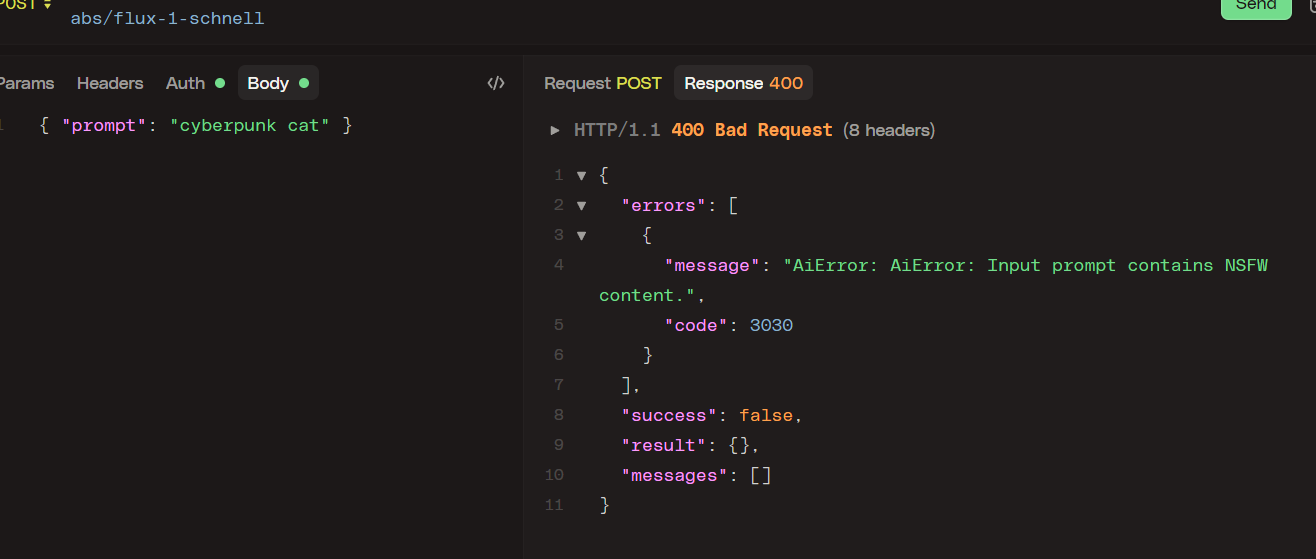

guidancenum_stepsguidanceai/run@cf/deepseek-ai/deepseek-r1-distill-qwen-32bworkerd/server/workerd-api.c++:759: error: wrapped binding module can't be resolved (internal modules only); moduleName = miniflare-internal:wrapped:__WRANGLER_EXTERNAL_AI_WORKER

workerd/jsg/util.c++:331: error: e = workerd/server/workerd-api.c++:789: failed: expected !value.IsEmpty(); global did not produce v8::Value