hello, is inpaiting ai workers working currently?

hello, is inpaiting ai workers working currently?

imagemask) for several models, but the only model that does inpainting is @cf/runwayml/stable-diffusion-v1-5-inpainting. imagemask when calling it. The format is mask: [...new Uint8Array(await maskImage.arrayBuffer())], where maskImage could be the result of e.g. await fetch(/* mask image url */).

@cf/openai/whisper-large-v3-turbo to convert audio into text. It works fine when recording on Pixel 9 in AAC format, but fails when recording on an older Oneplus 7T with AAC (I'm using Flutter to build the app). Using an AMR WB format works fine, though. Also all the AAC formats work fine when given to Google Gemini Flash/Pro modelsMPEG-4 AAC Low complexity and MPEG-4 High Efficiency AAC formats with identical resultsffprobe says this about the one recorded on Oneplus,@cf/baai/bge-m3 model but I keep getting the error below. Previously I was using the @cf/baai/bge-base-en-v1.5 model and everything was just fine.@cf/deepseek-ai/deepseek-r1-distill-qwen-32b missing the opening <think> tagThis is due to a quirk in the prompt template, which forces the <think> tag in order to require the model to think through it's response, but which results in this behavior

50 - 80 images with it. But my Daily Neurons Limits is still 0 (as in nothing used)80

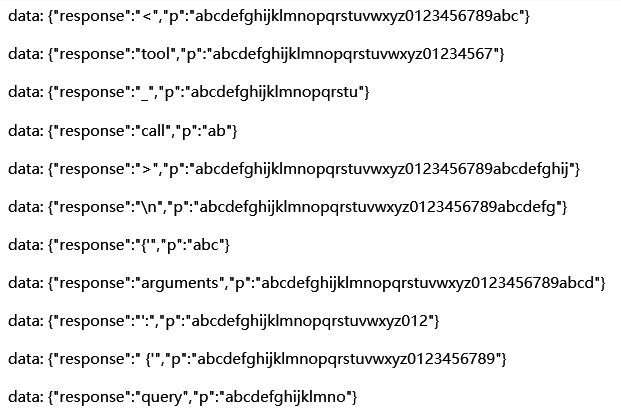

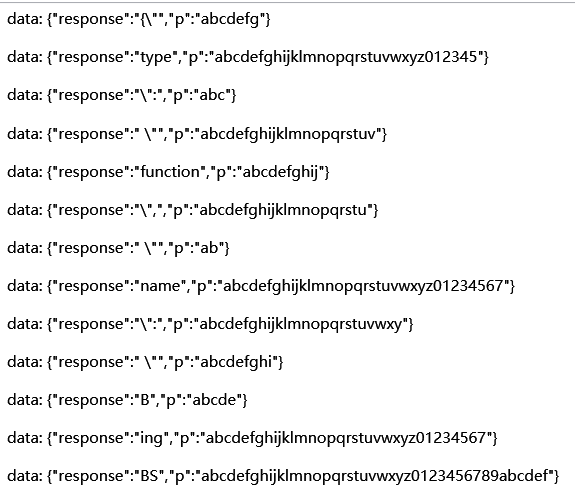

@cf/openai/whisper-large-v3-turboresponsecontent"response“: null, "tool_calls": [...]null@hf/nousresearch/hermes-2-pro-mistral-7b and @cf/meta/llama-3.3-70b-instruct-fp8-fast) behaves differently.<tool_call> label in stream mode, as shown in Image 1.

tool_calls field rather than response

maskmask@cf/runwayml/stable-diffusion-v1-5-inpaintingmask: [...new Uint8Array(await maskImage.arrayBuffer())]maskImageawait fetch(/* mask image url */)@cf/openai/whisper-large-v3-turbo@cf/openai/whisper-large-v3-turboMPEG-4 AAC Low complexityMPEG-4 High Efficiency AACffprobe[aac @ 0x560e66ce38c0] Number of scalefactor bands in group (46) exceeds limit (43).@cf/baai/bge-m3@cf/baai/bge-base-en-v1.5@cf/deepseek-ai/deepseek-r1-distill-qwen-32bdata: {"response":""}

data: {"response":""}

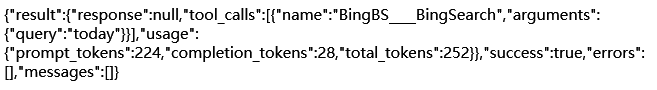

data: {"response":"","usage":{"prompt_tokens":0,"completion_tokens":1,"total_tokens":1}} 50 - 80 0 {"errors":[{"message":"AiError: AiError: Unknown internal error (8e2b7fc6-c9be-4abd-b5da-82def70cb5be)","code":3001}],"success":false,"result":{},"messages":[]}% "response“: null, "tool_calls": [...]{

"result": null,

"success": false,

"errors": [

{

"code": 7010,

"message": "Service unavailable"

}

],

"messages": []

}@hf/nousresearch/hermes-2-pro-mistral-7b@cf/meta/llama-3.3-70b-instruct-fp8-fast<tool_call>tool_calls$ curl https://api.cloudflare.com/client/v4/accounts/MY_ACCOUNT_ID/ai/run/@cf/mistral/mistral-7b-instruct-v0.2-lora \

-H 'Authorization: Bearer MY_API_TOKEN' \

-d '{

"messages": [{"role": "user", "content": "Hello world"}],

"raw": true,

"lora": "MY_LORA_ID"

}'

{"errors":[{"message":"AiError: AiError: Finetune MY_LORA_ID not found (07af68df-18b6-4a5d-bfeb-3453830da3be)","code":3037}],"success":false,"result":{},"

messages":[]}err : AiError: 5006: Error: required properties at '/' are 'contexts'