Nice to meet you

Having a nice weekend?

I need some advice.

Is it possible to access coudflare d1 database outside of worker/page?

?pings

Please do not ping community members for non-moderation reasons. Doing so will not solve your issue faster and will make people less likely to want to help you.

The general recommendation is to deploy a worker which acts as a HTTP API for your D1 database. You can just use the barebones Cloudflare HTTP API but that is slower

So, I need to access Worker, not D1 database, right?

How can I get the url of Cloudflare D1 database, so that I can set the db attribute of Payload CMS backend?

?d1-remote-connections

Accessing D1 from outside workers:

D1 is based on SQLite, which does not natively support remote connections and isn't MySQL/PostgreSQL wire compatible, nor does D1 itself offer that functionality. As such, your options are:

1 -> The Cloudflare API Query Endpoint (https://developers.cloudflare.com/api/operations/cloudflare-d1-query-database), with the upside of needing less setup, all requests have to go back to Cloudflare's core, so request latency is far from optimal from some locations. You would also be subject to the API Rate limit of 1200 requests per 5 minutes.

2 -> Create a worker to proxy requests to your D1 Database. Either tightly bound to your application/schema or loose and just accepting any query. This would allow your worker to execute potentially closer to your D1 Database and avoid the api rate limit.

D1 is based on SQLite, which does not natively support remote connections and isn't MySQL/PostgreSQL wire compatible, nor does D1 itself offer that functionality. As such, your options are:

1 -> The Cloudflare API Query Endpoint (https://developers.cloudflare.com/api/operations/cloudflare-d1-query-database), with the upside of needing less setup, all requests have to go back to Cloudflare's core, so request latency is far from optimal from some locations. You would also be subject to the API Rate limit of 1200 requests per 5 minutes.

2 -> Create a worker to proxy requests to your D1 Database. Either tightly bound to your application/schema or loose and just accepting any query. This would allow your worker to execute potentially closer to your D1 Database and avoid the api rate limit.

Oh, you're smart.

I think option 2 is good,.

I tried with 'npx create-payload-app' project.

But the problem here is to implement admin dashboard

Error: D1_ERROR: Currently processing a long-running export.

I need to synchronize the database from remote to local, but when I export, the system will be interrupted, my system needs to operate 24/7

I need to synchronize the database from remote to local, but when I export, the system will be interrupted, my system needs to operate 24/7

With the current design imports/exports block your database. Improvements of this are in the works but for now that's the state of it.

Maybe use another database? Copy the data to it and use it for the export so it doesn’t block your application

This looks really handy as a stop gap until dynamic bindings are eventually (hopefully) added

I see a bunch of questions in the past asking about per-user/tenant D1. How can I stay up to date on the topic over the next few months/years?

I've migrated off D1 for now but would like to migrate back once that's possible

I've migrated off D1 for now but would like to migrate back once that's possible

There'll be a blog post I imagine when it's actually possible. For now, if you want to do that, you can with Durable Objects, but it's basically not possible in any ergonomic way with D1. Nor would I recommend it for the vast majority of apps over a more traditional DB solution.

Hey all, I saw @yusukebe's recent Hono Vite template and wrote a prompt to add D1 (with drizzle), the prompt worked really well for me — would love to hear if it works for others too!

https://ctxs.ai/weekly/d1-database-setup-for-classifieds-app-a0m502

https://ctxs.ai/weekly/d1-database-setup-for-classifieds-app-a0m502

Regarding scaleing: So if D1 uses SQLite under the hood, does it scale for databases with hundrets of millions of records within one table?

"Scaling" doesn't mean much without specifying for which queries. If you are queries use proper indices, and provide

The caveat is that if at any point you try to modify millions of rows, things will not work well.

So, having millions of rows is not an issue (assuming they fit the storage limits), but modifying in your query millions of rows each time will lead to issues most likely.

WHEREThe caveat is that if at any point you try to modify millions of rows, things will not work well.

So, having millions of rows is not an issue (assuming they fit the storage limits), but modifying in your query millions of rows each time will lead to issues most likely.

So I have a question around D1 wrt dropping large tables. We have tables that sum to ~3.5 million rows and ~2.5GB each. Generally we will only have one of these, but when we need to update the data stored in them (they are used as a lookup of sorts) we will create a brand new table, switch to that, then drop the old table. The last part of this is causing us some significant issues and I am not sure how to work around it. While the

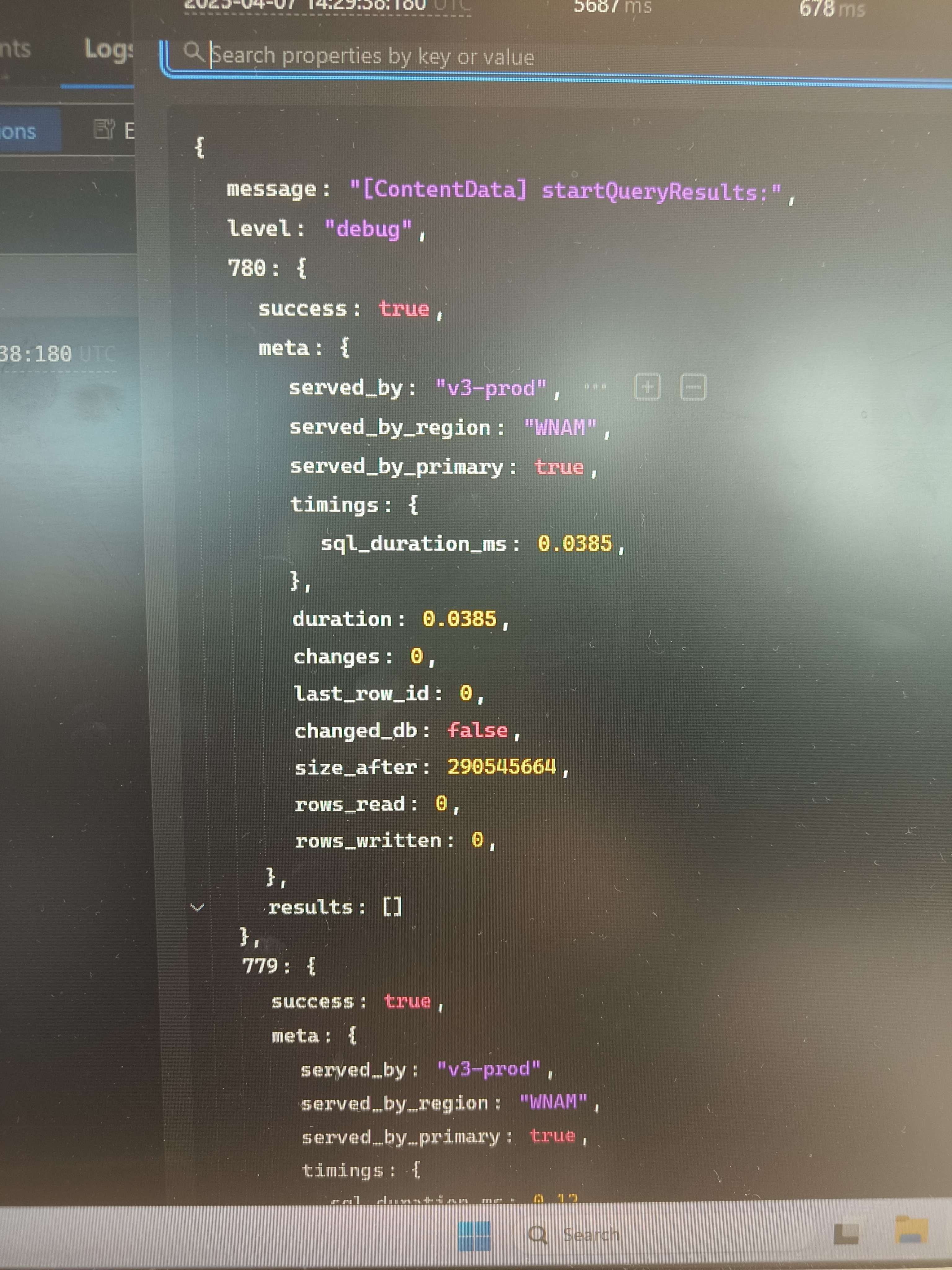

DROP TABLE {name} query is running, the table becomes completely unresponsive and can't serve other queries. We always get returned a failure for this query, whether using worker bindings or the API, however sometimes it actually does work and sometimes it doesn't (presumably the operation times out and a rollback is initiated). I wanted to know if this is something you guys are aware of, and if there are plans to improve this, because right now we can't really delete our old data without causing a temporary outage, and we may have to even try multiple times to delete the table. Cheers!It says in the documentation that the response from a .batch() call should be a array but I'm getting a json object with numberd keys for the batch statments? Is this something new?

Yes, we are aware, and unfortunately it's not something there is an obvious solution right now. When you delete 3.5 million of rows, that's causing pretty much writes for all those SQLite pages, and at some point you are hitting the Durable Object CPU limits (a D1 database is backed by a DO). Yes, each query is in a transaction and if it fails it rolls back.

The only recommendation we have for now is that those deletions/writes should be done in lower chunks.

The only recommendation we have for now is that those deletions/writes should be done in lower chunks.

Right, and that's something we considered. However that gets pretty expensive, given that a

DROP TABLE is 1 write, and DELETE FROM is 1 write per row deleted :/How are you printing that? An array in JS is an object with numbers as keys, and those numbers are the array's indices.

Maybe something could be done around that to make it less prohibitively expensive to delete tables in chunks, while this problem exists

A row value can be 8 bytes, or 2MBs. Really it depends.

Can't you replace the DB instead of dropping the table?

- Create new DB + insert data

- Replace worker binding

- Delete old DB

Yea, I wouldn't base anything necessarily off of my tables, we store some decent size blobs of data within each row

Hey! Quick question about D1—if I run a query to check whether a specific id exists in a table, would that count as just one read, or would it count as one read per row in the table?

If you have an index that SQLite can use for that query, it'd be a single read

If not, it'll scan the entire table

thats what I would do, might be harder in practice

Any regular process which involves dropping and recreating millions of rows of a table should be rethought architecture-wise, regardless if this was D1 or Postgres. If I was stuck on such a process, i'd probably run a blue/green deployment on this table to keep both pre-post hot to avoid migration downtime.

startDataUUIDs is a array of uuids

yea often times its easier to re-think the issue rather then solve obscure edge cases

That's an option, but one that will suck once D1 global replication exists

Given that will presumably replicate over time, and increase the PoP's it exists in, replacing the entire database will destroy that replication and you'll start from step 1

Something like this would be totally fine in postgres, it's an extremely simple operation that won't impact the database whatsoever

Once it lands, D1 replication is extremely quick so this should still be a reasonable method of replacing the DB

I've not seen any info on that tbh

I imagine the process of replication is fast, but I expect the process of learning where to replicate too is not necessarily as fast