Note that containers are not yet available and probably won't be until at least June (per the blog p

Note that containers are not yet available and probably won't be until at least June (per the blog post oday)

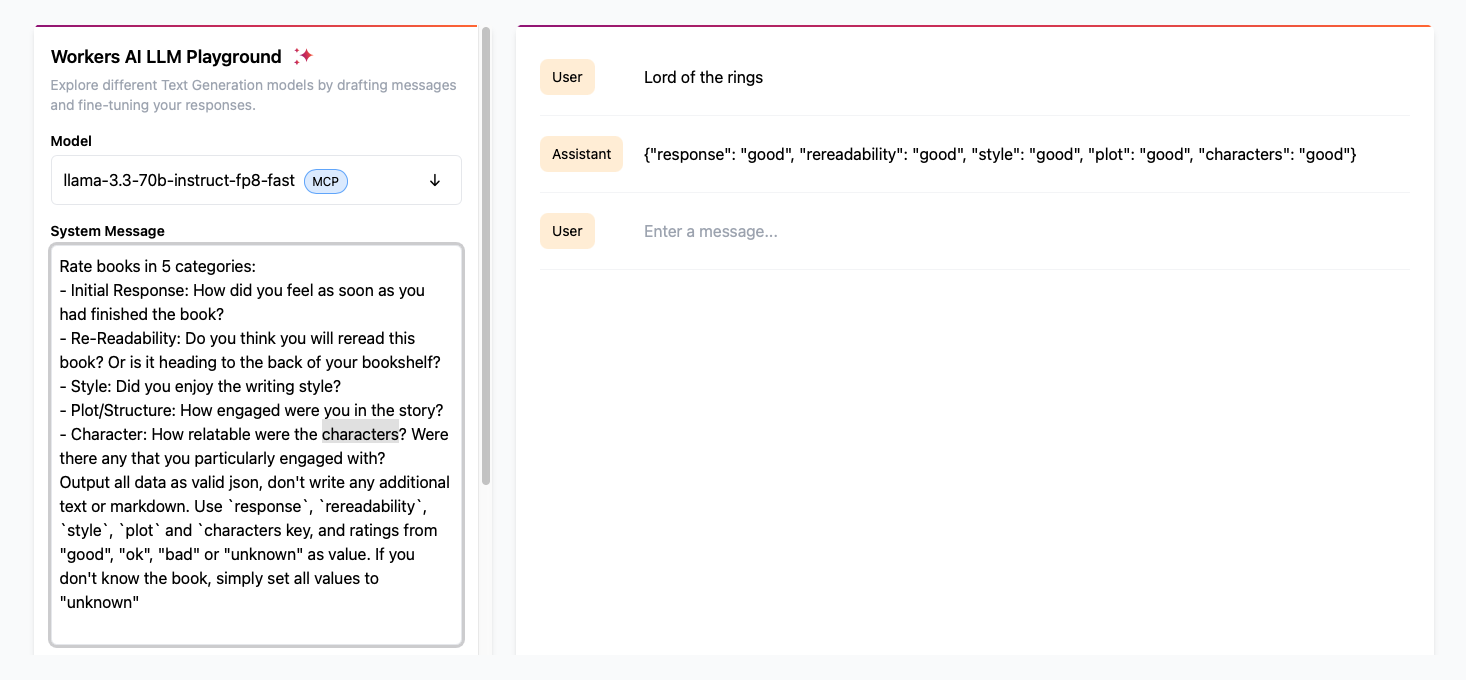

messages or prompt

ai/tomarkdown ?max_tokens defaults to 256 tokens, try setting that higher in your request to the model.

Is there any way to increase AutoRAG pipeline limit during beta? I am building a RAG app that requires separation of data between users and since there is no way to add metadata before / during retrieval the only way I can enforce the above is 1 user == 1 rag pipeline

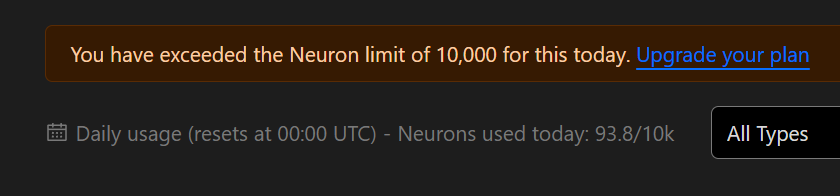

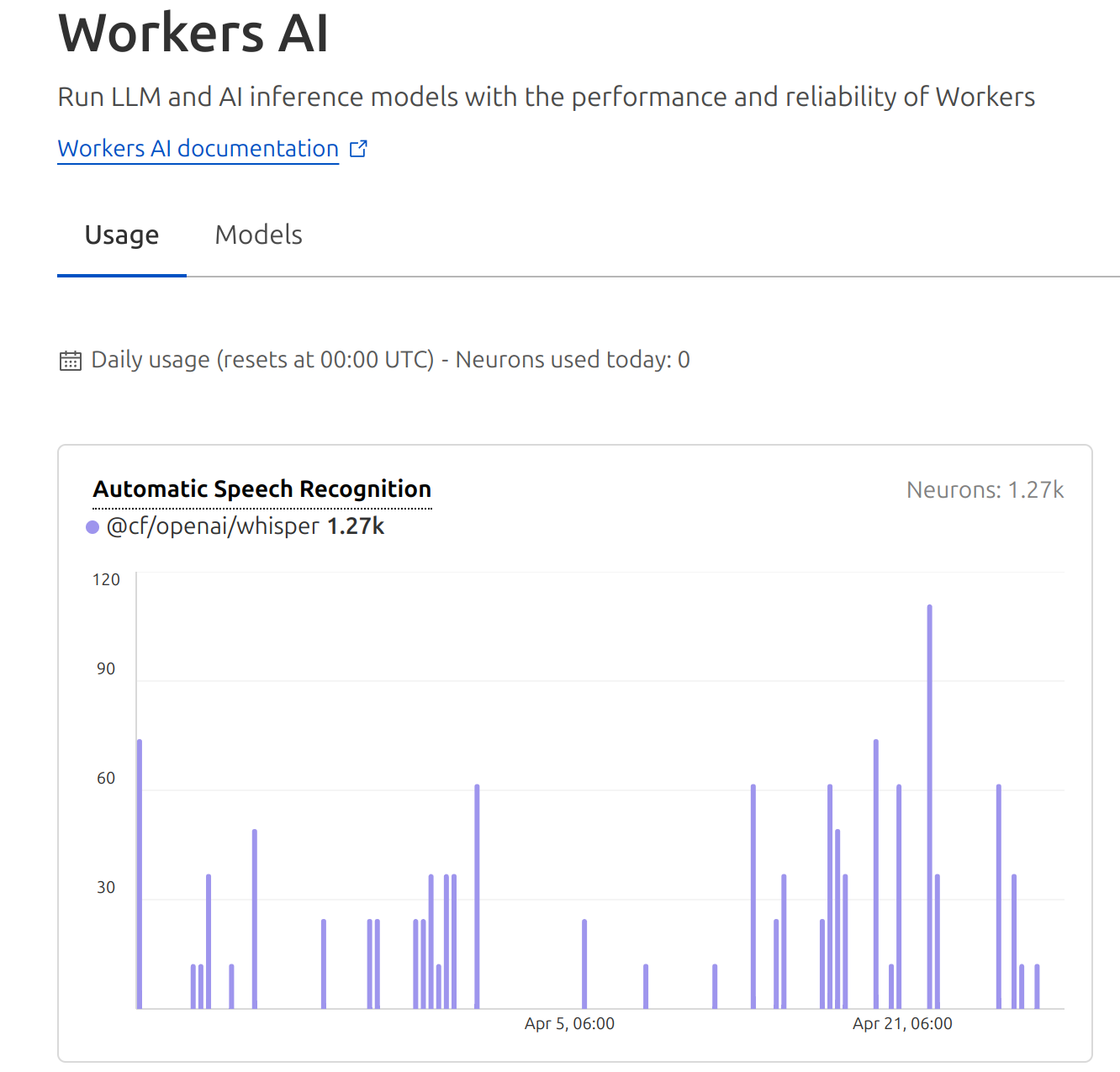

Is there any way to increase AutoRAG pipeline limit during beta? I am building a RAG app that requires separation of data between users and since there is no way to add metadata before / during retrieval the only way I can enforce the above is 1 user == 1 rag pipeline@cf/openai/whisper is this a bug on the dashboard. tbh i dont even know this was being run anywhere as i never really had the failover ever trigger....

this.env.AI.run('@cf/openai/whisper-large-v3-turbo. i cant find any reference to the othe rone yet i see it in my dashboard. ...

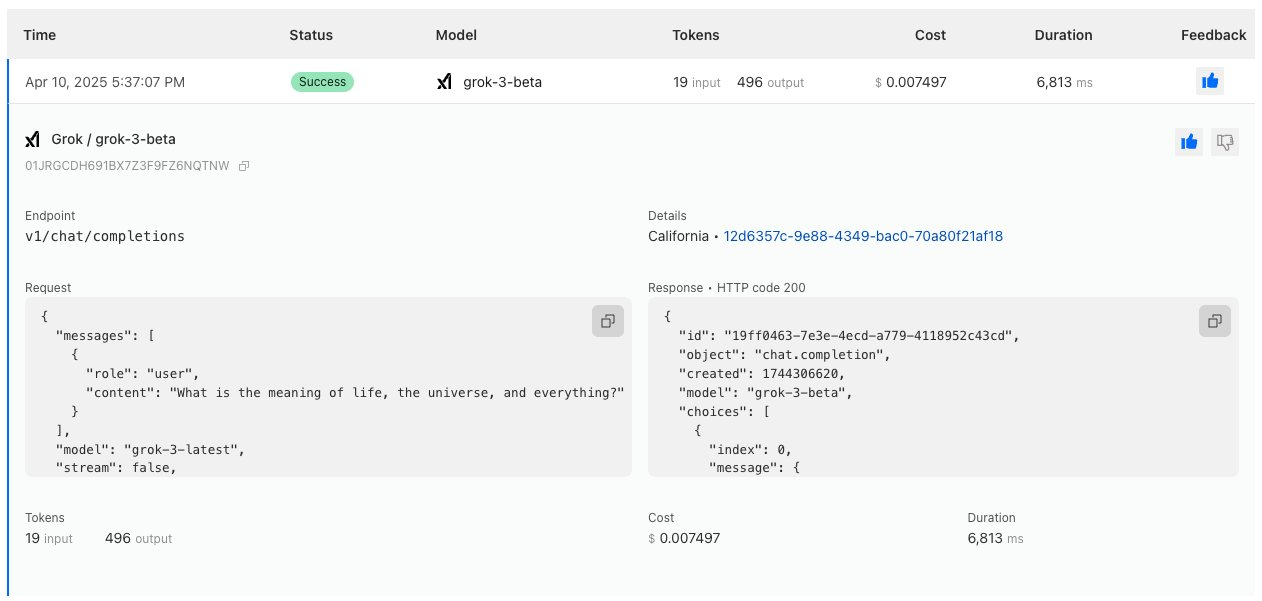

messagespromptai/tomarkdown ## Metadata - PDFFormatVersion=1.4 - Language=en-GB - IsLinearized=false - IsAcroFormPresent=false - IsXFAPresent=false - IsCollectionPresent=false - IsSignaturesPresent=false - Creator=Writer - Producer=OpenOffice.org 2.4 - CreationDate=D:20090324113315-06'00'max_tokensInferenceUpstreamError [AiError]: 5023: JSON Schema mode is not supported with stream mode.@cf/openai/whisperthis.env.AI.run('@cf/openai/whisper-large-v3-turboconst aiResponse: any = await env.AI.run(

"@cf/mistralai/mistral-small-3.1-24b-instruct",

{

messages: [

{ role: "system", content: "You're a helpful assistant." },

{ role: "assistant", content: prompt }

]

}

);const aiResponse: any = await env.AI.run(

"@cf/mistralai/mistral-small-3.1-24b-instruct",

{

"prompt": prompt

}

);const messages = [

{

role: "system",

content: "You are a helpful assistant that can analyze images."

},

{

role: "user",

content: [

{

type: "text",

text: "What's in this image?"

},

{

type: "image_url",

image_url: {

url: datauri

}

},

... // extra

]

}

];const aiResponse: any = await env.AI.run(

"@cf/mistralai/mistral-small-3.1-24b-instruct",

{

messages: messages

}

);