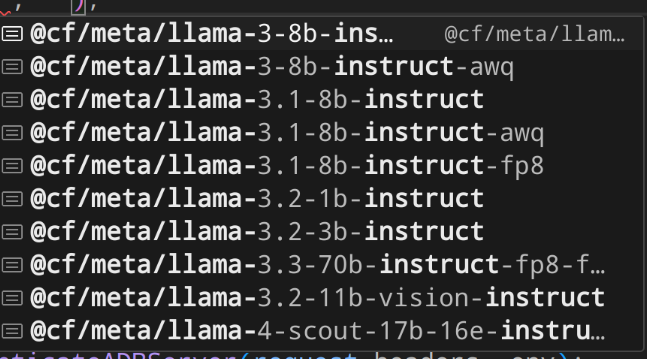

Is it possible to run HuggingFaceTB/SmolLM2-135M-Instruct on workersai?

Is it possible to run HuggingFaceTB/SmolLM2-135M-Instruct on workersai?

{ image: string }{ usage: { prompt_tokens: number, completion_tokens: number, total_tokens: number }}{ usage: { tiles: number, steps: number }}

401 Unauthorizedwhisper turbo large v3varswrangler.tomlWORKERS_AI_TOKEN = "gdhd"workers aithiswrangler.tomlAUDIO_PROCESSOR

env.AI.run(…)wrangler loginCapacity error, will retry: 3040: Capacity temporarily exceeded, please try againai-utilsAI.run

Error: 8001: Invalid input when I try to call tools using @cf/meta/llama-4-scout-17b-16e-instruct. If I call the model without tools it works fines, but if add the tools array it throws the error. Here is my tools array:await AI.run( model, { messages: messages, tools: tools });@cf/meta/llama-3.3-70b-instruct-fp8-fast instead of llama-4-scout the tool call works, but then the models responds incorrectly. Has there been a change in how tools are call between llama-3 and llama-4? Should I use Functions instead.AiError: 3043: Internal server error on some calls -- has anyone successfully debugged those?response_format and as result I get response as JSON that can be easily parsed and then used to call my functions. It basically provides the same result, with the exception of allowing the model to select the tool, but one could easily set a prop in the JSON for function selection. It still sucks that tool calling appears to be broken. try {

console.log(`[BYPASS] Attempt 3: Using AI binding with raw bytes`);

const aiTranscription = await this.env.AI.run('@cf/openai/whisper-large-v3-turbo', {

audio: [...audioBytes], // Convert Uint8Array to regular array

language: 'en',

});

try {

console.log(`[BYPASS] Attempt 2: Using AI binding with base64`);

const aiTranscription = await this.env.AI.run('@cf/openai/whisper-large-v3-turbo', {

audio: base64Audio,

language: 'en',

});

# Define the Durable Objects

[[durable_objects.bindings]]

name = "AUDIO_PROCESSOR"

class_name = "AudioProcessor"

# External DO binding for LLM worker

[[durable_objects.bindings]]

name = "LLM_PROCESSOR"

class_name = "LlmProcessor"

script_name = "llm"

# Add queue consumers with explicit handler functions

[[queues.consumers]]

[vars]

# Define the Durable Object class

[[migrations]]

tag = "v1"

new_classes = ["AudioProcessor"]

[[kv_namespaces]]

[[d1_databases]]

[[queues.consumers]]

[[queues.producers]]

[ai]

binding = "AI"

[observability]

enabled = true

head_sampling_rate = 1export class AudioProcessor {

constructor(state, env) {

this.state = state;

this.env = env;

}import { runWithTools } from '@cloudflare/ai-utils';

const model = '@cf/meta/llama-3.3-70b-instruct-fp8-fast';

const response = await runWithTools(

env.AI,

model,

{

messages: chatmessages,

tools: function_tools,

max_tokens: 8192,

temperature: 0.6,

},

{ strictValidation: true, maxRecursiveToolRuns: 1, verbose: true, streamFinalResponse: true }

);Error: 8001: Invalid input@cf/meta/llama-4-scout-17b-16e-instruct[

{

"name":"applyFilter",

"description":"Function that applies the filter...",

"parameters":{

"type":"object",

"properties":{

"query":{

"type":"object",

"description":"The query object..."

},

"filterDesc":{

"type":"string",

"description":"Description, for the user, of the filter..."

}

},

"required":["query","filterDesc"]

}

}

]await AI.run( model, { messages: messages, tools: tools });@cf/meta/llama-3.3-70b-instruct-fp8-fastllama-4-scoutFunctionsError: 5006: Error: oneOf at '/' not met, 0 matches: required properties at '/' are 'prompt', Type mismatch of '/messages/0/content', 'array' not in 'string', Type mismatch of '/messages/1/content', 'array' not in 'string', required properties at '/functions/0' are 'name,code'AiError: 3043: Internal server errorresponse_formatconst API_BASE_URL = "https://api.cloudflare.com/client/v4/accounts/{myID}/ai/run/"

const API_AUTH_TOKEN = "{myTOKEN}" //process.env.API_AUTH_TOKEN;

const model = "@cf/meta/llama-2-7b-chat-int8"

const headers = {

'Authorization':`Bearer ${API_AUTH_TOKEN}`,

//'Content-type':'application/type',

"Access-Control-Allow-Origin": "*",

"Access-Control-Allow-Methods": "GET,HEAD,POST,OPTIONS",

"Access-Control-Max-Age": "86400"

}

if (!API_BASE_URL || !API_AUTH_TOKEN) {

throw new Error('API credential is wrong or not configured from Github Action')

}

const inputs = [

{'role':'system', 'content':systemPrompt},

{'role':'user', 'content':userPrompt},

]

const payload = {

message: inputs

}

try {

console.log("Requesting to LLM...")

const response = await fetch(`${API_BASE_URL}${model}`, {

method: 'POST',

headers:headers,

body: JSON.stringify(payload),

mode: 'no-cors'

}

);

if (!response.ok) {

throw new Error (`Error request from LLM: ${response.status}`)

}

console.log("Requesting completed. Waiting for output...")

const output = await response.json(); // output

console.log(output)

}

catch (error) {

console.log("API Error", error);

throw error;

}