Welcome to the official Cloudflare Developers server. Here you can ask for help and stay updated with the latest news

83,498Members

View on DiscordResources

Similar Threads

Was this page helpful?

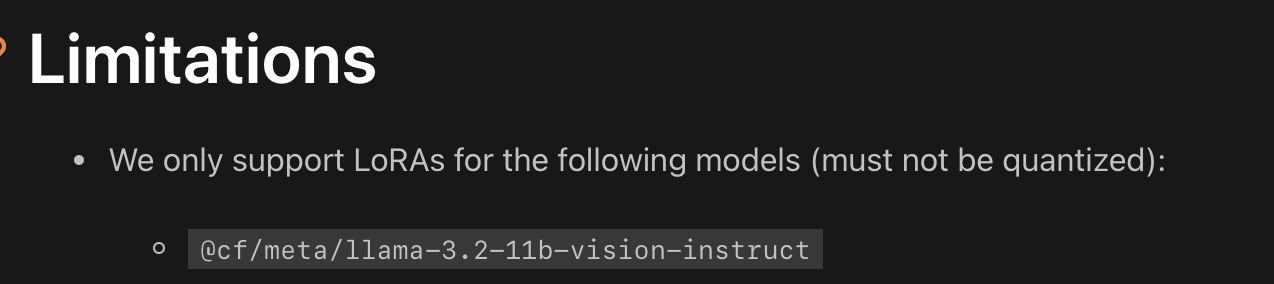

/ai/run/@cf/meta/llama-3.2-11b-vision-instruct

Creating new finetune "my-finetune-name" for model "@cf/meta/llama-3.2-11b-vision-instruct".

Creating new finetune "my-finetune-name" for model "@cf/meta/llama-3.2-11b-vision-instruct". Assets uploaded, finetune "my-finetune-name" is ready to use.

Assets uploaded, finetune "my-finetune-name" is ready to use.

Some kind of warning or error would have been nice though.

Some kind of warning or error would have been nice though.Non-Commercial Use Only. You may only access, use, Distribute, or create Derivatives of the FLUX.1 [dev] Model or Derivatives for Non-Commercial Purposes. If you want to use a FLUX.1 [dev] Model or a Derivative for any purpose that is not expressly authorized under this License, such as for a commercial activity, you must request a license from Company, which Company may grant to you in Company’s sole discretion and which additional use may be subject to a fee, royalty or other revenue share. Please see www.bfl.ai if you would like a commercial license.

Capacity temporarily exceeded, please try again.@cf/meta/llama-3.1-8b-instruct-fp8-fast@cf/meta/llama-3.2-3b-instructmessage: "AiError: 5025: This model doesn't suuport JSON Schema." . If the docs are wrong how can I determine which model support JSON Schema? Otherwise, what could be the problem?

. If the docs are wrong how can I determine which model support JSON Schema? Otherwise, what could be the problem?json_objectjson_schema

@cf/meta/llama-4-scout-17b-16e-instruct) with some tools/ function calling. With or without the compatibility mode (https://developers.cloudflare.com/workers-ai/configuration/open-ai-compatibility/) i got answer with no tool results, and no Ai message either. I am using an official Java Client library... would like to know if i need to keep pushing (tried with strict tools) and is it expected ?

json_object

{ temperature: 5, top_p: 1, seed: Math.floor(Math.random() * 9999999999) + 1 } to attempt to encourage different results every run... but no luck FAIL tests/index.test.ts [ tests/index.test.ts ]

SyntaxError: Unexpected token ':'

❯ Users/advany/Documents/GitHub/generator-agent/node_modules/ajv/lib/definition_schema.js?mf_vitest_no_cjs_esm_shim:3:18

❯ Users/advany/Documents/GitHub/generator-agent/node_modules/ajv/lib/keyword.js?mf_vitest_no_cjs_esm_shim:5:24

❯ Users/advany/Documents/GitHub/generator-agent/node_modules/ajv/lib/ajv.js?mf_vitest_no_cjs_esm_shim:29:21 resolve: {

alias: {

ajv: 'ajv/dist/ajv.min.js',

},

},

optimizeDeps: {

include: ['ajv'],

},"errors": [{

"message": "AiError: AiError: Lora not compatible with model. (c8afa8e1-48d6-42d4-871d-b04bce9b4c67)",

"code": 3030

}]const aiResponse = await env.AI.run(

'@cf/meta/llama-3.1-8b-instruct',

{

messages: [

{

role: 'system',

content: 'You are a helpful assistant that transforms text into different tones and styles.'

},

{

role: 'user',

content: fullPrompt

}

],

max_tokens: 2048

},

{

gateway: {

skipCache: false, // Enable caching (default behavior)

cacheTtl: 86400 // Cache for 24 hours (24 * 60 * 60 seconds)

}

}

);@cf/meta/llama-4-scout-17b-16e-instruct{ temperature: 5, top_p: 1, seed: Math.floor(Math.random() * 9999999999) + 1 }BGE-M3 batch request failed after all retry attempts

details: {

"attempts": 4,

"error": "10000: Authentication error",

"batchSize": 1

}