I don't have one because I don't know the answer to their question, but telling them to use Appscrip

I don't have one because I don't know the answer to their question, but telling them to use Appscript is not the answer.

image_url

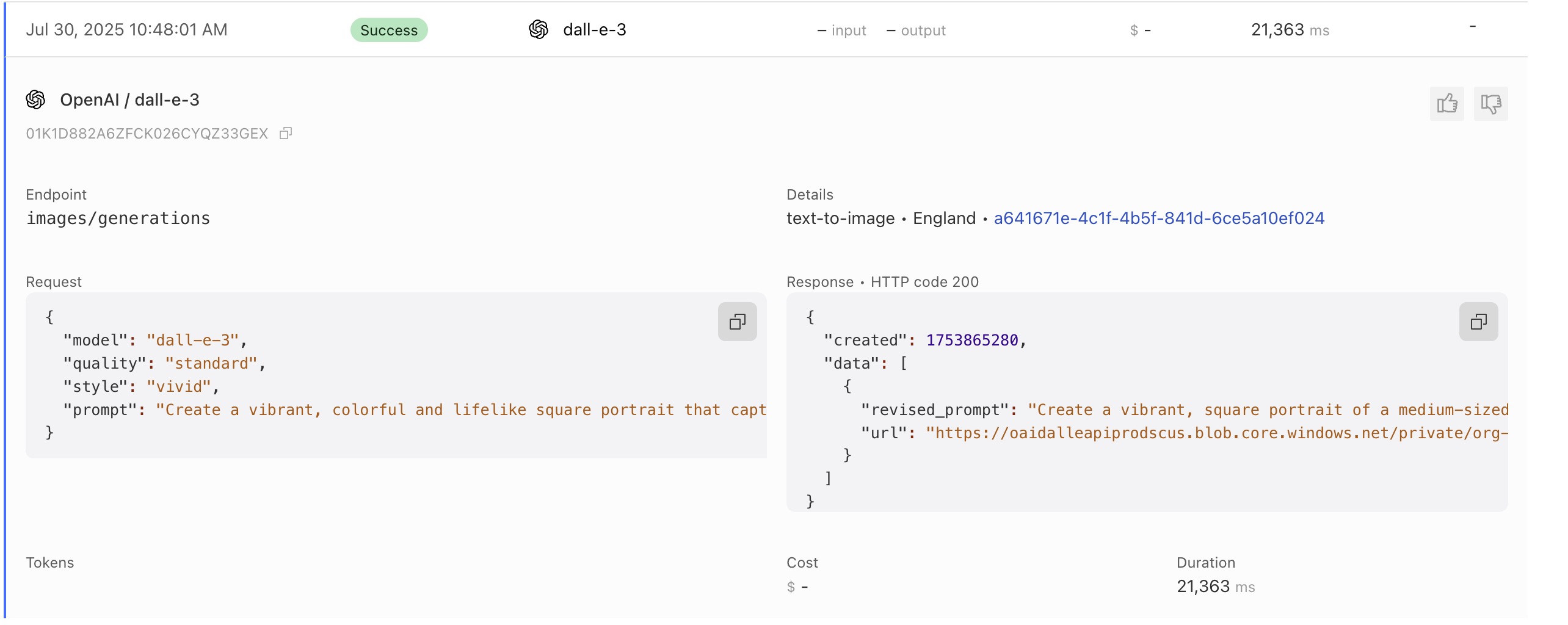

openAI/dall-e-3

thinking_budgetgoogle-ai-studiogemini-flash-2.5thinking_budgetcurl --location --request OPTIONS ‘https://your-gateway/openrouter/v1/models’ welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.7000Error: The requested Durable Object is temporarily disabled, please contact us for details7002: Not FoundPatch Gateway Log

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

/anthropic/v1/messagesasn and ip to the metadata key I cannot actually negatively filter themMetadata Value <does not equal> Some IP then it negatively matches all the ASNs because they do not have the value of the IP{

"model": "gpt-4o-mini-transcribe",

"response_format": "text",

"file": {}

}{

"error": {

"message": "Audio file processing failed",

"type": "server_error",

"param": null,

"code": null

}

}< HTTP/2 500

< date: Tue, 12 Aug 2025 08:49:47 GMT

< content-type: application/json

< content-length: 101

< server: cloudflare

< nel: {"report_to":"cf-nel","success_fraction":0.0,"max_age":604800}

< vary: Accept-Encoding

< cf-cache-status: DYNAMIC

< strict-transport-security: max-age=15552000; includeSubDomains; preload

< x-content-type-options: nosniff

< cf-ray: 96deb8af99b71d6d-SIN

< alt-svc: h3=":443"; ma=86400

{"success":false,"result":[],"messages":[],"error":[{"code":2002,"message":"Internal server error"}]}/anthropic/v1/messagesasnipmetadataMetadata ValueSome IPconst client = new OpenAI({

apiKey: apiKey,

baseURL: 'https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/compat',

defaultHeaders: {

'cf-aig-authorization': `Bearer ${apiKey}`,

},

});

const response = await client.chat.completions.create({

model: 'cerebras/gpt-oss-120b',

messages: [{ role: 'user', content: 'What is cloudflare?' }],

});