Browser Rendering API – Active Sessions Unusable

I’m seeing a consistent issue where sessions marked as active are actually unhealthy and unusable. It's been around 7 hours since this issue started.

🧪 I ran a health-check script across 30 active sessions (browser rendering logs don't show these 30 sessions - only can be seen through the puppeteer.limits API):

- Healthy: 0

- Unhealthy: 30

- All failed with:

Unable to connect to existing session [ID]... TypeError: Cannot read properties of null (reading 'accept')

💡 It looks like:

- Sessions aren’t properly cleaned up

- They're consuming limits but can’t be reused.

Happy to share logs or the script if needed.

8 Replies

Since yesterday, I’ve been encountering the following error:

Error: Unable to create new browser: code: 429: message: Too many browsers already running

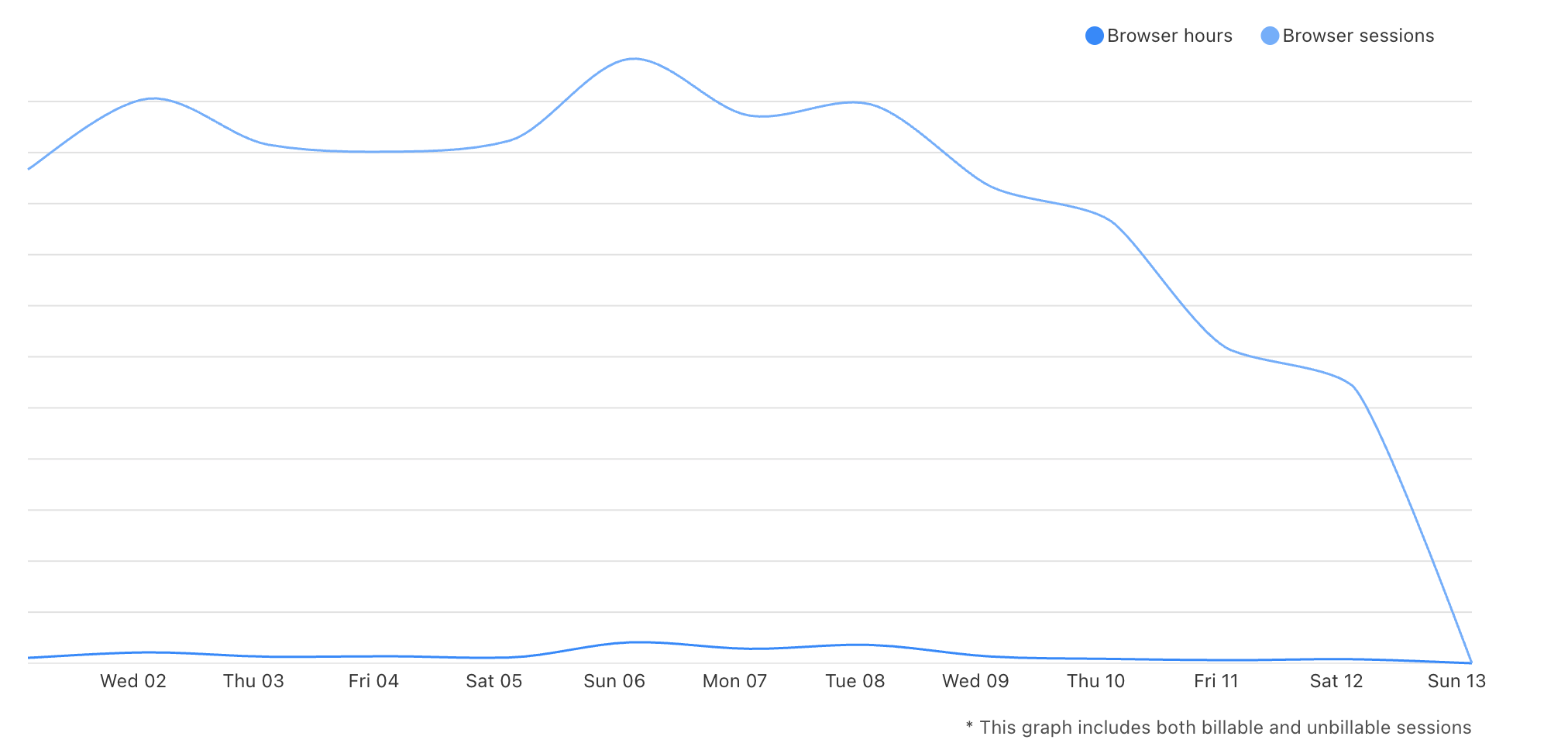

I check the CF dashboard and I see the browser session dropped !

This issue is affecting all users and all requests.

any help ?

cc: @Kathy | Browser Rendering PM

thanks for the ping @Vero .

@Himanshu @Nayef are you seeing this issue still?

@Kathy | Browser Rendering PM an you take a look on this ticket 01648851

link

https://www.support.cloudflare.com/support/s/case/500Nv00000PyYYKIA3/issue-with-puppeteer-rate-limit-error-cloudflare-browser-rendering

🙏

for some reason I'm not able to open that ticket. the issue should have been resolved on the 13th though. has it been resolved from your end?

Can you access it by ticket number 01648851 ?

any way will try to write down here as well

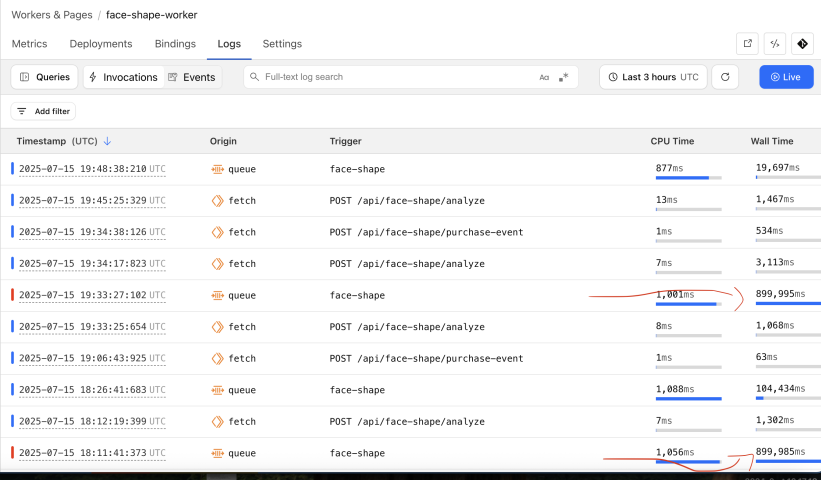

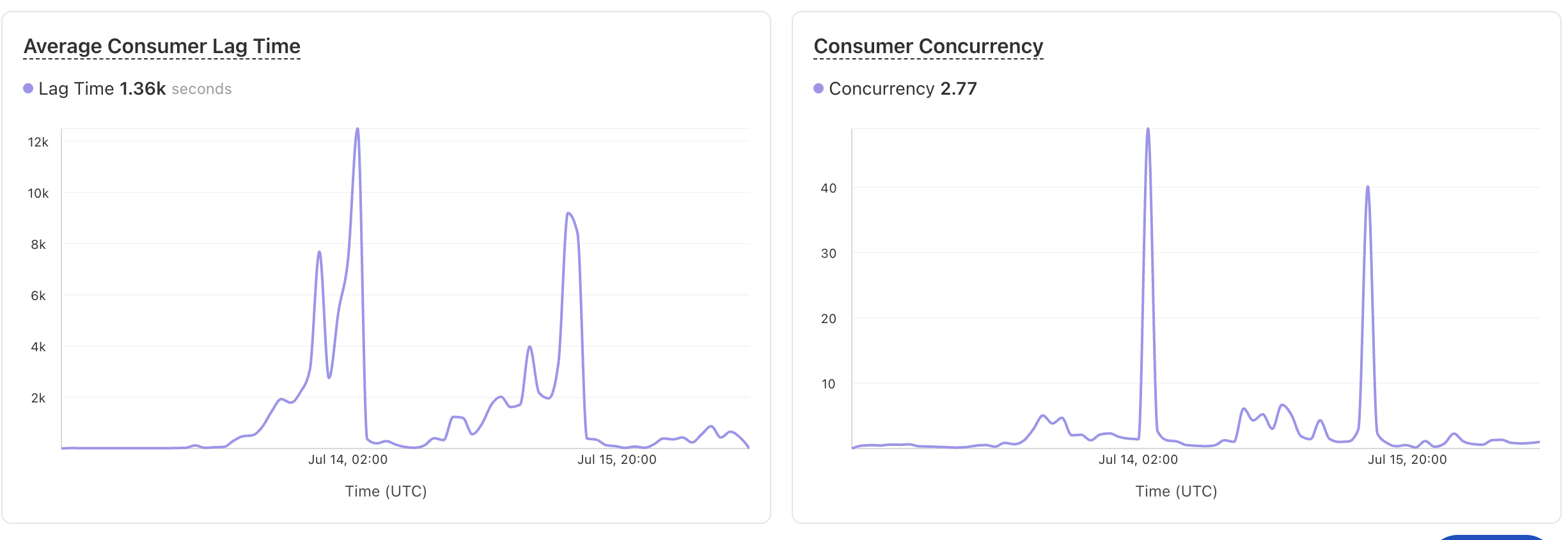

After further investigation into the queue that triggers the worker responsible for generating PDF files via browser rendering, I noticed some unexpected behavior:

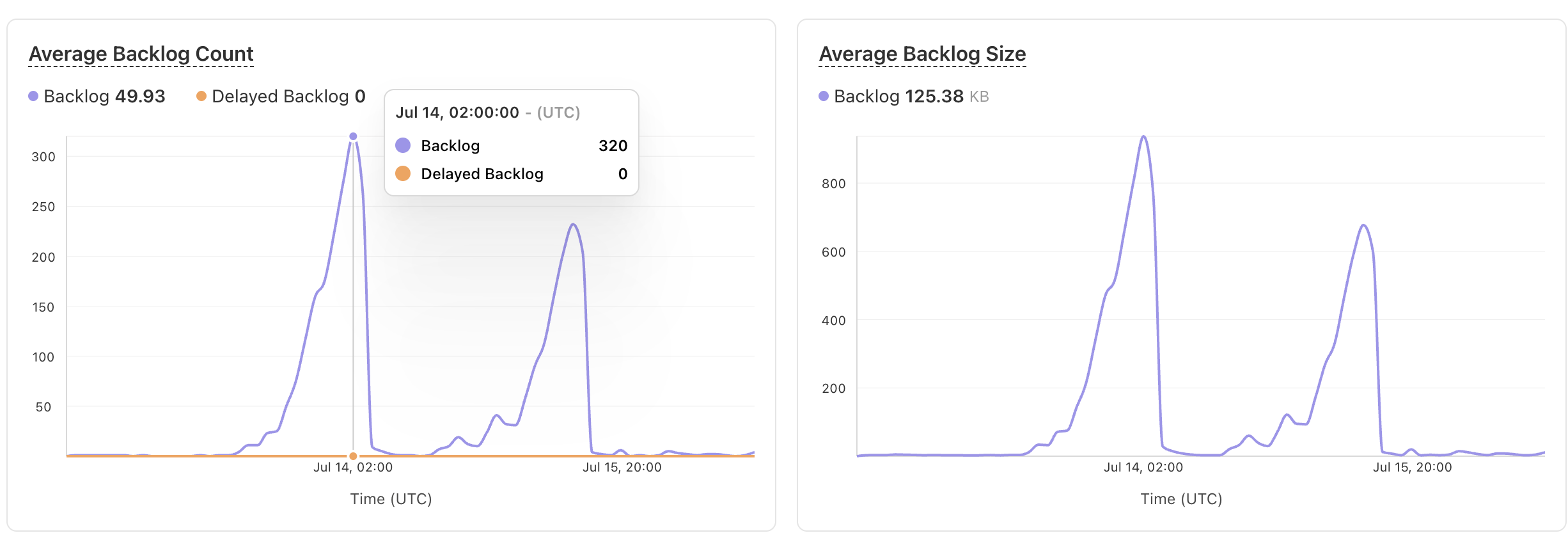

The queue appears to accumulate messages without immediately triggering the consumer worker. Instead of processing each message as it arrives, the queue waits until it collects hundreds of messages before invoking the consumer.

As a result, the browser rendering engine attempts to handle too many requests at once. This leads to hitting the browser rendering limit after processing the first ~10 messages, and the remaining messages fail with a

429

My main questions are:

Why does the queue retain messages without dispatching them to the consumer as they arrive?

Why does it release all messages simultaneously, rather than it once it deliverd as we define Delivery delay = 0 ?

Additionally, I observed that the consumer worker process remains open until it reaches the maximum timeout of 15 minutes—even if we send message.ack() and pdf generation it takes 30 to 60 second! (it for some request but not for all )

to acknowledge the message. This means the worker continues running unnecessarily until it hits the timeout and gets forcefully terminated. (Refer to the second image attached.)

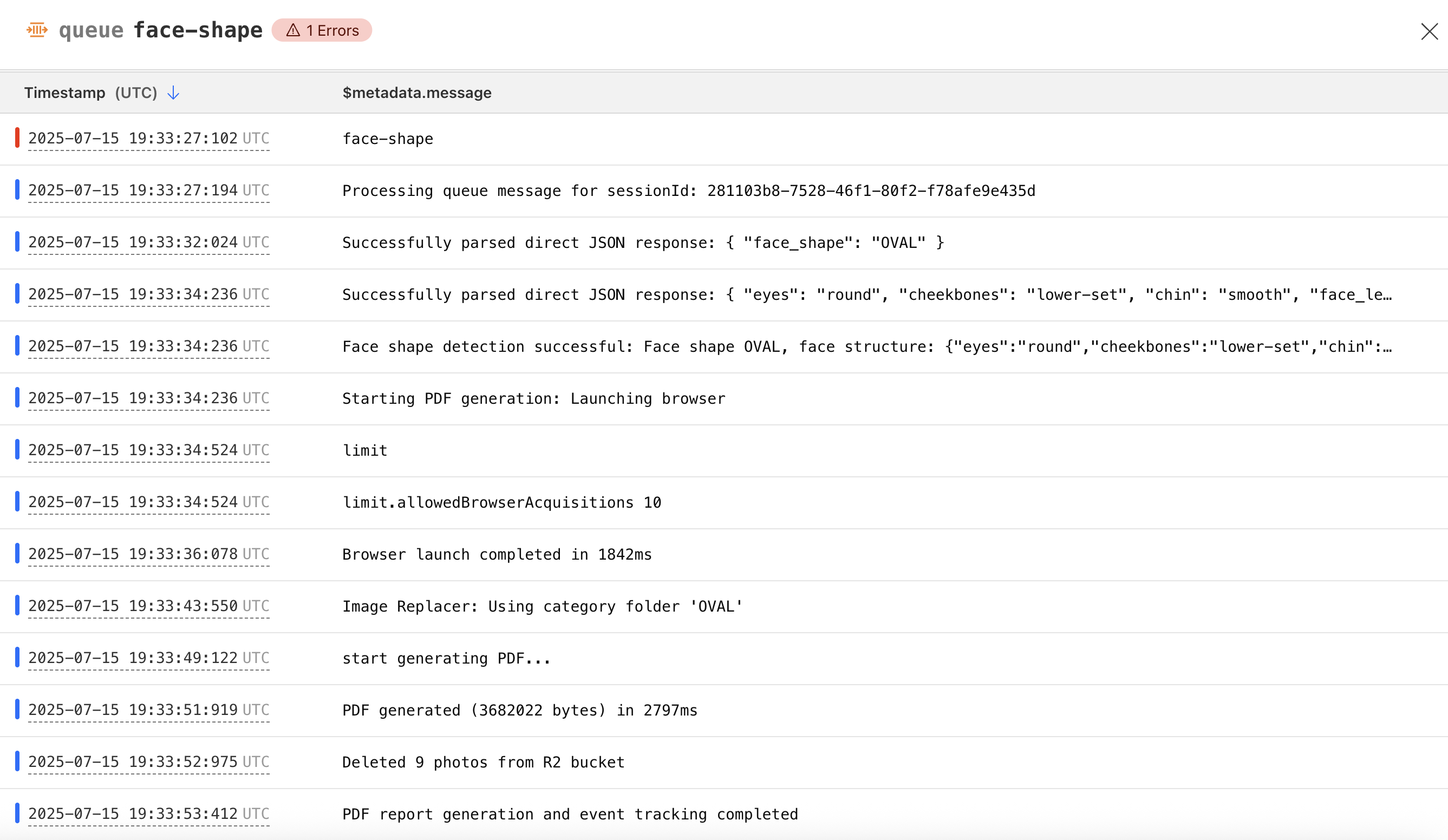

imagePlease check below image. It shows that all PDF files are successfully generated within 30 seconds. However, the worker continues to keep the request open for the full 15-minute timeout period. (this happend for some request only)

Notably, the final log entry — "PDF report generation and event tracking completed" — invoked immediately before the line

message.ack() in the code. This confirms that the acknowledgment is triggered right after the final log, yet the request still remains open.imagelook at message count that queue accumulate it before it released all messages at onceimagehere where we concurrency graph of queue

Thanks @Kathy | Browser Rendering PM can take a look on my response above ?

@Walshy 🙏