Duplicates uploaded to album via browser are stored in the filesystem anyway

Running on: UNRAID / DOCKER

Version: 1.135.3

DB: Postgres

Hi folks,

So I deployed a second copy of immich and migrated several libraries into it (my Google Photos had better metadata and I wantd to start with those files first). I used several methods to populate the second instance including:

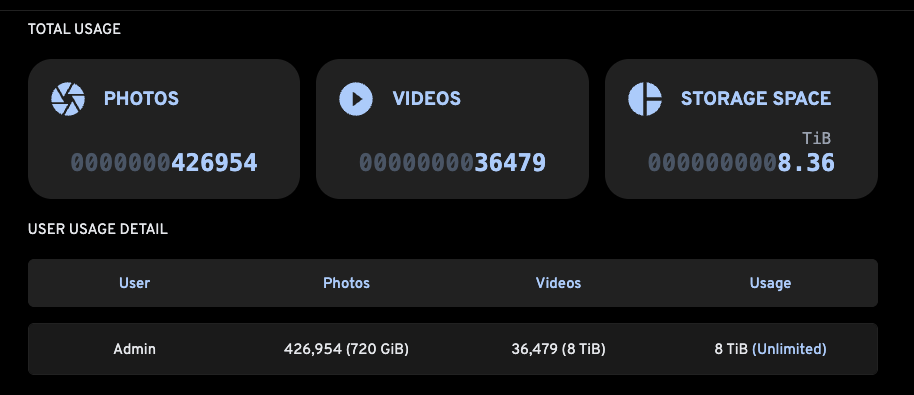

It appears by the storage being used (5TB previously to 7TB now) that immich may be storing the photos uploaded via browser that were ALREADY stored on the server, it found the duplicate, and added the on-server copy to the ablum. The amount of extra storage I'm seeing is approximately what I would expect from those rejected duplicates.

It is possible at all that immich is actually failing to remove those duplicates? I know it has to fully upload the file to get the checksum (in the browser). Has anyone experienced this type of behavior? Any idea how to solve it?

Thanks in advance.

Version: 1.135.3

DB: Postgres

Hi folks,

So I deployed a second copy of immich and migrated several libraries into it (my Google Photos had better metadata and I wantd to start with those files first). I used several methods to populate the second instance including:

- Immich CLI

- Immich Go (which uploaded the content but failed to create most albums beceause they had : is in the name)

- Uploading Via Browser DIRECTLY into album

It appears by the storage being used (5TB previously to 7TB now) that immich may be storing the photos uploaded via browser that were ALREADY stored on the server, it found the duplicate, and added the on-server copy to the ablum. The amount of extra storage I'm seeing is approximately what I would expect from those rejected duplicates.

It is possible at all that immich is actually failing to remove those duplicates? I know it has to fully upload the file to get the checksum (in the browser). Has anyone experienced this type of behavior? Any idea how to solve it?

Thanks in advance.