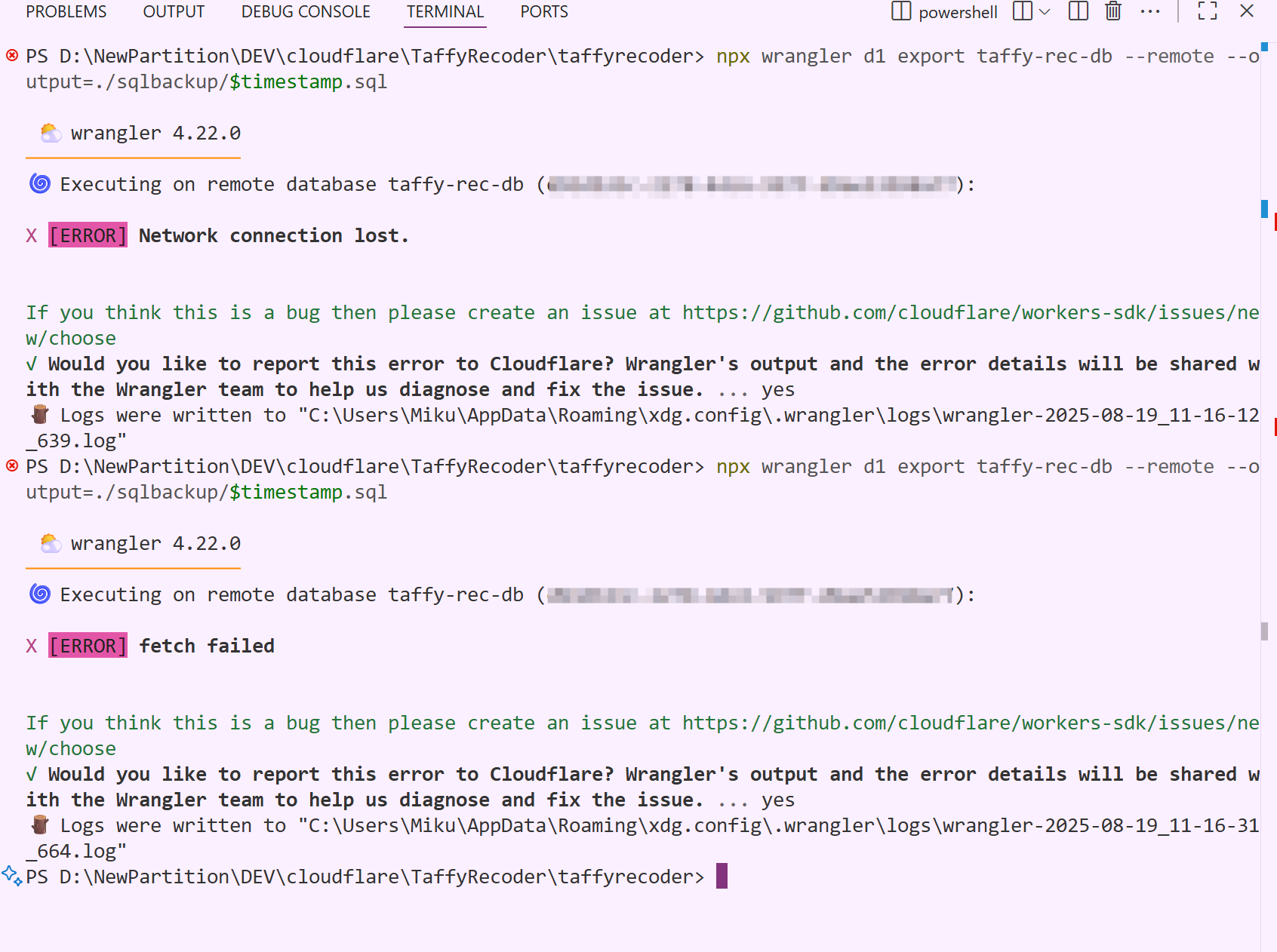

We are having tons of D1 issues this morning. Anyone else?

We are having tons of D1 issues this morning. Anyone else?

bind()

D1_ERROR

Home to Wrangler, the CLI for Cloudflare Workers® - cloudflare/workers-sdk

Home to Wrangler, the CLI for Cloudflare Workers® - cloudflare/workers-sdk

├── packages/

│ ├── worker/

│ └── db/

└── apps/

└── app1/

└── app2/