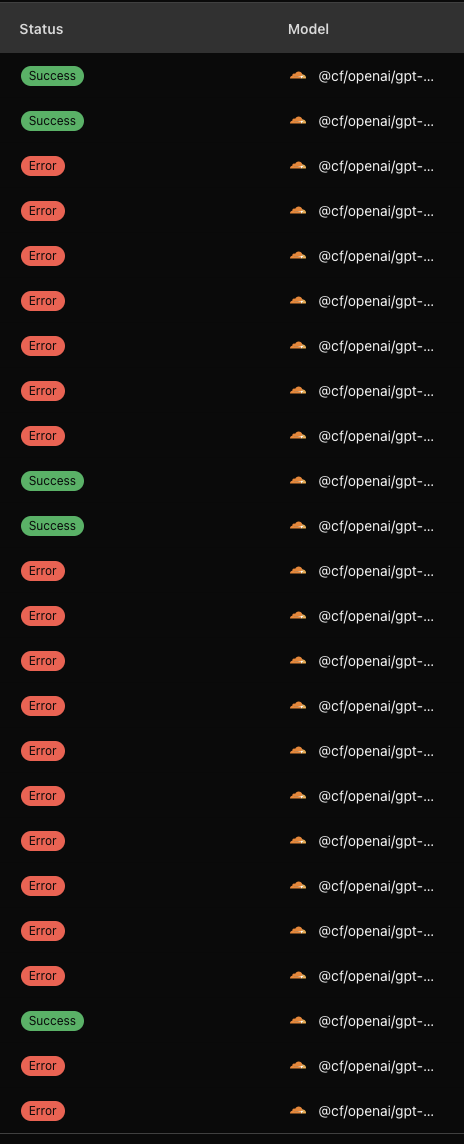

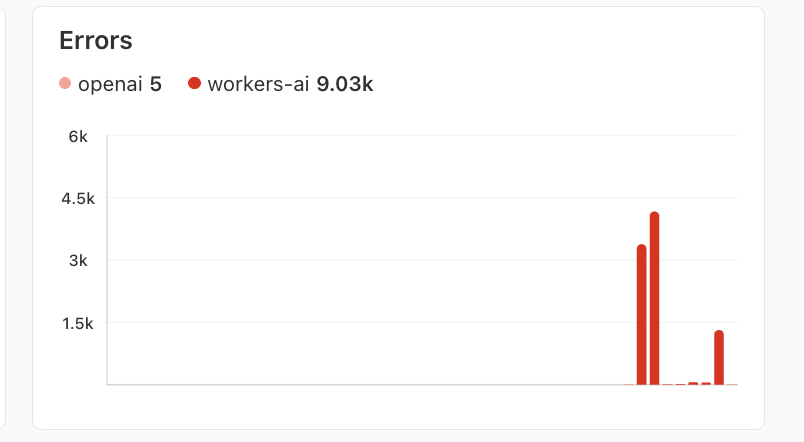

hey all, i'm getting 400s when using `@cf/openai/gpt-oss-120b`. is this a known thing? using the com

hey all, i'm getting 400s when using

should I be using responses instead? thanks.

@cf/openai/gpt-oss-120b. is this a known thing? using the completions API:should I be using responses instead? thanks.