AI models can run in a variety of inference engines or backends: vLLM, Python, and now our very own inference engine, Infire.

error executing callback "..." Error: Attempting to read .name on ScheduleJobAgent before it was set. The name can be set by explicitly calling .setName(name) on the stub, or by using routePartyKitRequest(). This is a known issue and will be fixed soon. Follow https://github.com/cloudflare/workerd/issues/2240 for more updates. I put setName everywhere in the codebase to fix it but no success. do u have any idea to temporary resolve it until major updates come?payload and I could resolve the error. although the error resolved and no more exceptions were thrown but when I was checking status of the batch job it was showing invalid parameters. so I decided to suspend my works around the batch processing until stable version of that is announced.const response = args.env.AI.run(

'@cf/openai/gpt-oss-120b' as any,

{

requests: [input],

},

{ queueRequest: true, gateway: { id: GATEWAY_ID } }

);input of type Array worked but I wasn't able to run it when input was of type string (openai compatible JSON format)gpt-oss-20bf55b85c8a963663b11036975203c63c0 Wanted to try out a model and the response type for it is completely broken.

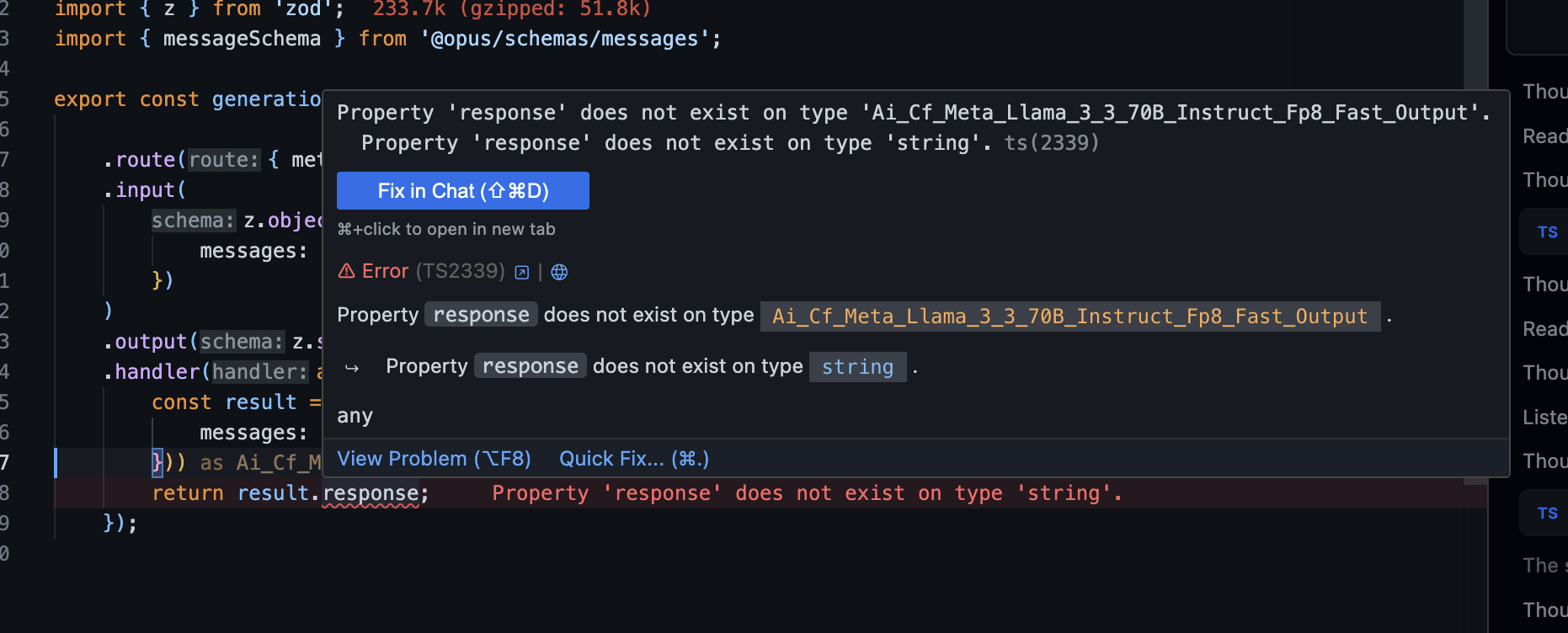

Wanted to try out a model and the response type for it is completely broken.

Daily usage (resets at 00:00 UTC) - Neurons used today: 32.48k/10k

You have exceeded the Neuron limit of 10,000 for this today. Upgrade your plan

All limits reset daily at 00:00 UTC. If you exceed any one of the above limits, further operations will fail with an error.

User: sum of 2 + 2? -> Model: the answer is 4 -> User:now multiply the result by 2 at last user user input, ai model should have a history of messages and know what the resulttoken_input and exponential cost

error executing callback "..." Error: Attempting to read .name on ScheduleJobAgent before it was set. The name can be set by explicitly calling .setName(name) on the stub, or by using routePartyKitRequest(). This is a known issue and will be fixed soon. Follow https://github.com/cloudflare/workerd/issues/2240 for more updates.payloadinvalid parametersconst response = args.env.AI.run(

'@cf/openai/gpt-oss-120b' as any,

{

requests: [input],

},

{ queueRequest: true, gateway: { id: GATEWAY_ID } }

);inputinputArraystringgpt-oss-20bAiError: AiError: EngineCore encountered an issue. See stack trace (above) for the root cause. (50d9f590-dc24-4cf3-92b4-313011575443)f55b85c8a963663b11036975203c63c0User: sum of 2 + 2? -> Model: the answer is 4 -> User:now multiply the result by 2token_input