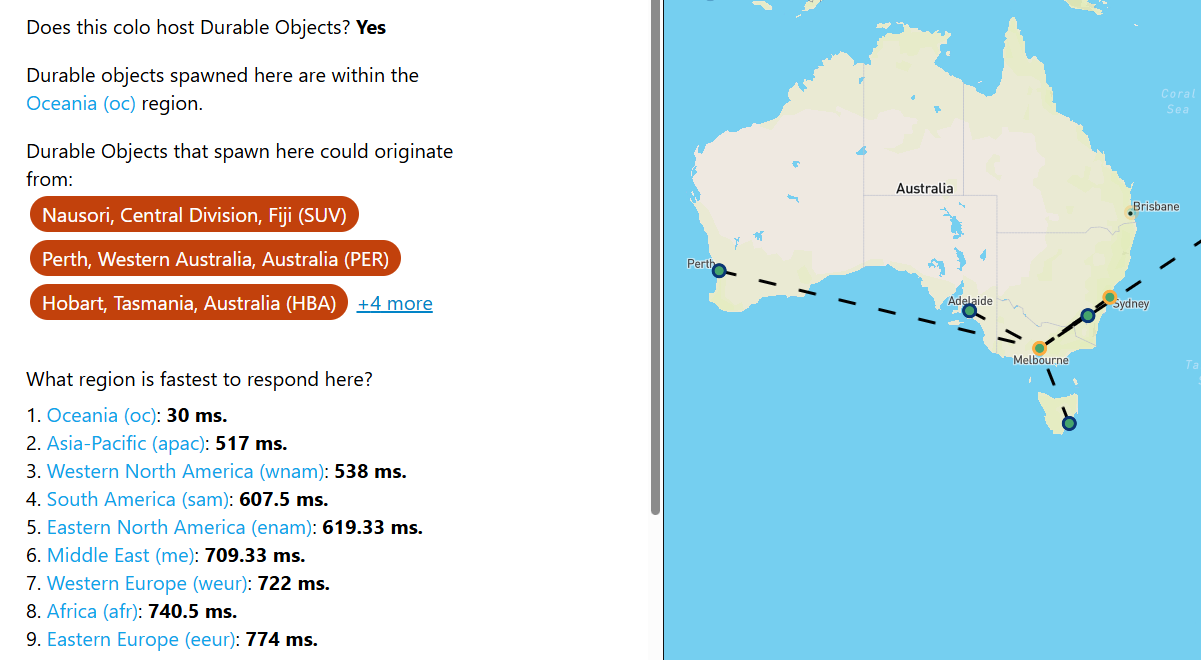

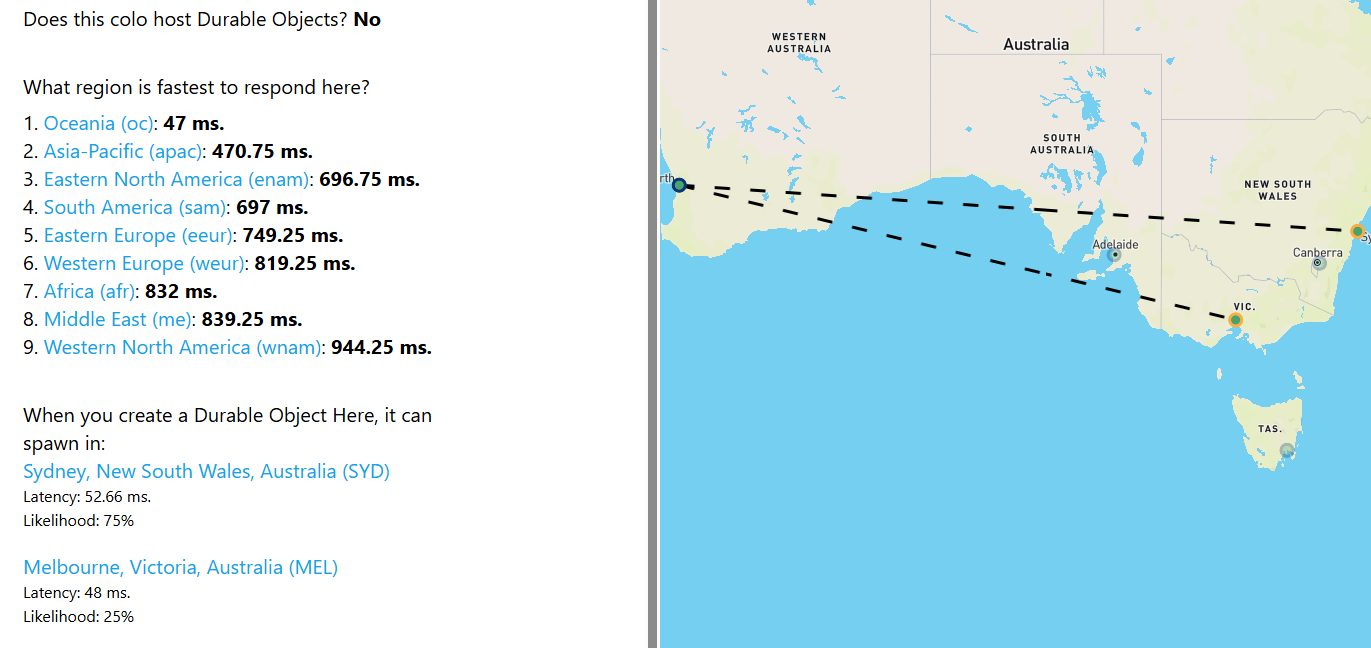

Yeah the DO is in Mel but request being routed through Perth

Yeah the DO is in Mel but request being routed through Perth

hopefully someone has seen me complaining and is fixing this as we speak

hopefully someone has seen me complaining and is fixing this as we speak

name

it should automatically be disposed. Unsure about max or idle timeout. Good idea to have wrapper on calling side to do retries with backoff

it should automatically be disposed. Unsure about max or idle timeout. Good idea to have wrapper on calling side to do retries with backoff2025deleteAll()routeDORequest