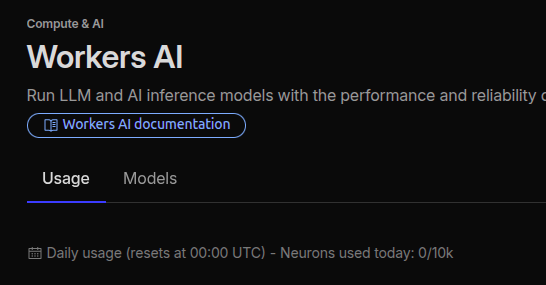

Hey @James , @carter and others. Apologies for the slow progress here, and I understand and share t

Hey @James , @carter and others. Apologies for the slow progress here, and I understand and share the frustration.

We are absolutely working on fixing these issues, and we hope to have an update soon. We've got a little backed up on some work here, but should be getting around to it soon. To give you an idea of the kinds of things we've been working on:

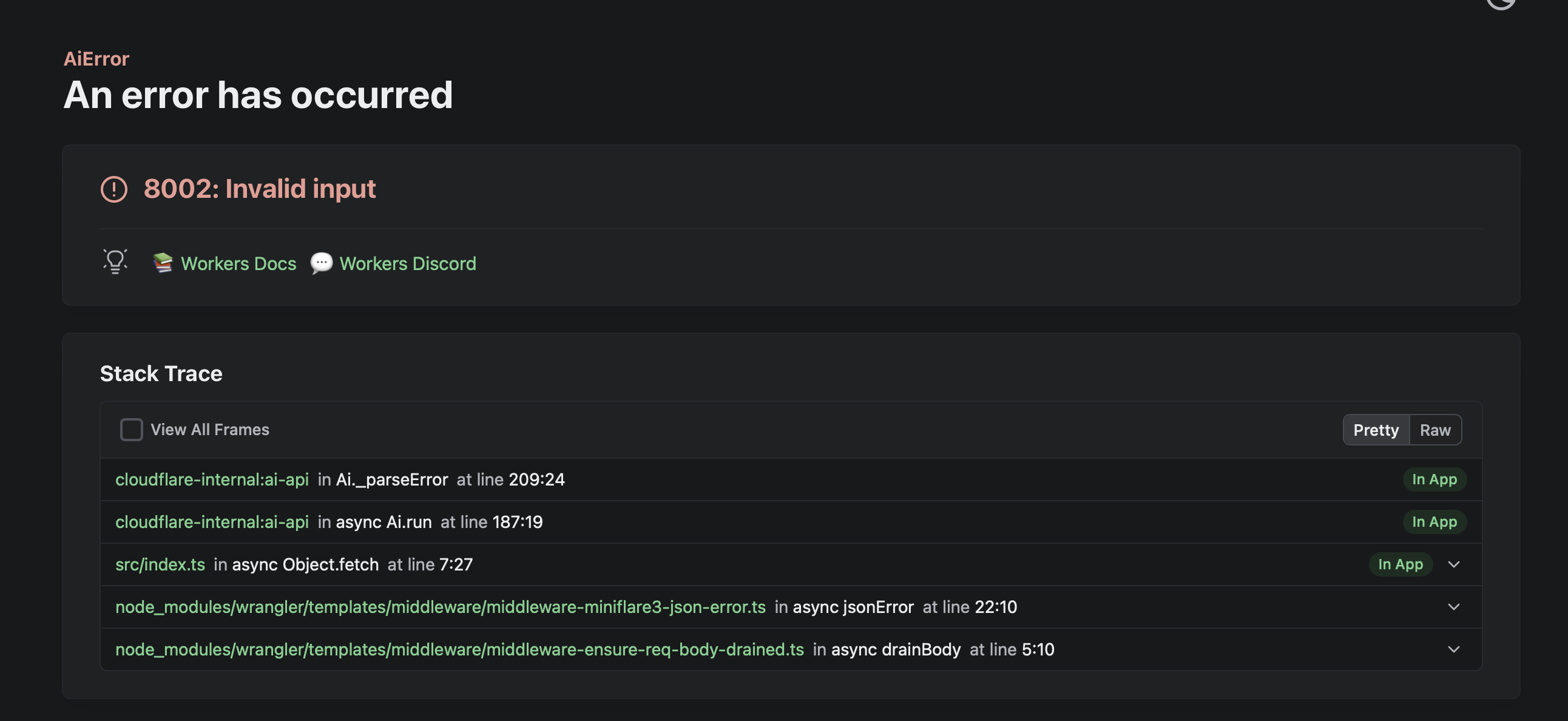

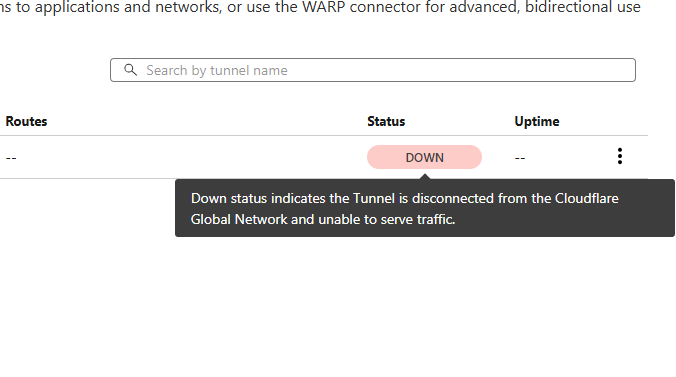

- Improved platform stability and performance: we've recently rolled out a lot of "invisible" changes to make the platform more stable to things like high load and more consistently return successful responses. While there will still be occasions that people will see 3040 errors due to limited capacity, these should be much less often than before

- For the gpt-oss models, we've been waiting for some vLLM changes to land before pushing out more updates. In hindsight we shouldn't have let ourselves be blocked on this, and we're working to address this by seeing if we can build this ourselves internally. That's going to bring streaming, chat/completions support, and support for async requests. Along with a number of bug fixes.

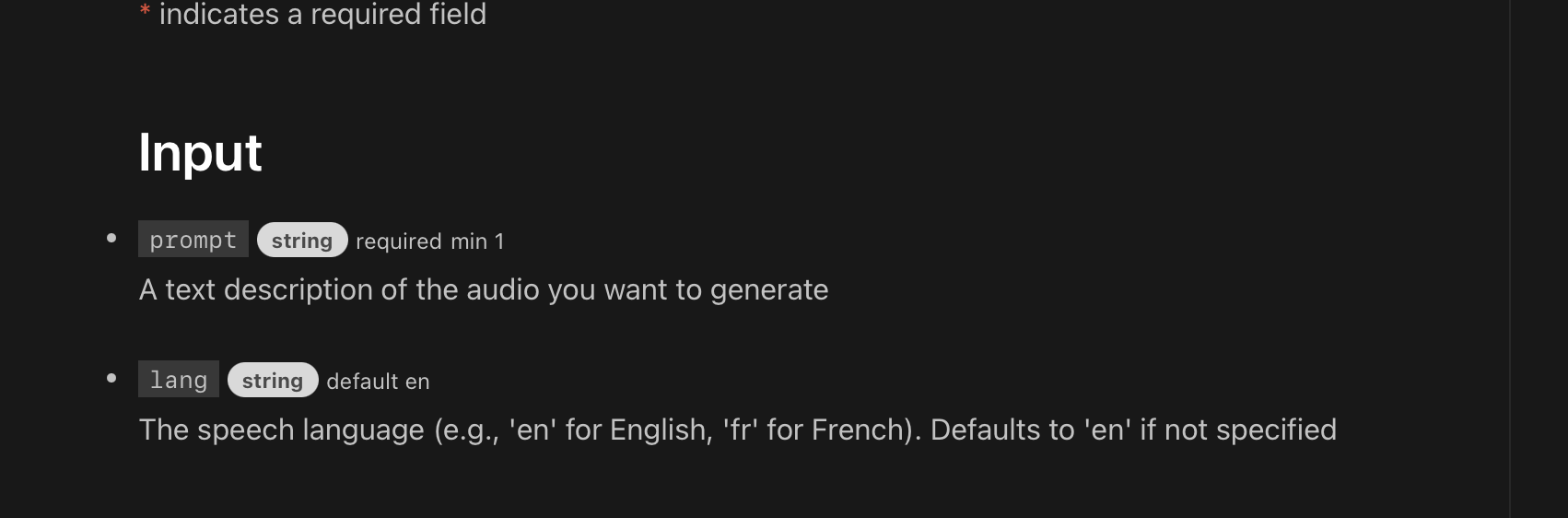

- Support for realtime audio models like deepgram/flux, and websocket support for those.

On the DX front -- all the feedback that gets shared here/in GitHub is absolutely heard internally. e.g. you have @Kevin and I both core member of the Workers AI team checking this regularly.

We are absolutely working on fixing these issues, and we hope to have an update soon. We've got a little backed up on some work here, but should be getting around to it soon. To give you an idea of the kinds of things we've been working on:

- Improved platform stability and performance: we've recently rolled out a lot of "invisible" changes to make the platform more stable to things like high load and more consistently return successful responses. While there will still be occasions that people will see 3040 errors due to limited capacity, these should be much less often than before

- For the gpt-oss models, we've been waiting for some vLLM changes to land before pushing out more updates. In hindsight we shouldn't have let ourselves be blocked on this, and we're working to address this by seeing if we can build this ourselves internally. That's going to bring streaming, chat/completions support, and support for async requests. Along with a number of bug fixes.

- Support for realtime audio models like deepgram/flux, and websocket support for those.

On the DX front -- all the feedback that gets shared here/in GitHub is absolutely heard internally. e.g. you have @Kevin and I both core member of the Workers AI team checking this regularly.