Efficiently syncing large data sets from different websites

Hello everyone,

I’ve been reading the documentation and would love to get your thoughts on how to efficiently sync a large amount of data between our database and a third-party website using the Firecrawl API.

For example, the Parliament website publishes records of recent meetings between MPs. There are a few hundred thousand meetings that I’ve already crawled and stored in our database using a custom CSS crawler. Now that I’m migrating to Firecrawl, I don’t need to crawl all of them again—I just need to check the main list for recent meetings and sync any that we’ve missed....

Can we use extract to extract information when logged on a website?

Hello,

I would like to use /extract but after logging in the website, is there a way to do that? I could share secrets (email/pwd, cookies, ...) or whatever would be needed.

Is it possible? Or is it planned to be?...

Latest version of Firecrawl Supabase error.

Im running the latest version of Firecrawl, and after initiating the /extract endpoint, it will create a job but when trying to retrieve the job it will result in the following error:

```

{

"success": false,...

Reduce length of crawl results to 5 MB instead of 10 MB

Hello, while trying to fetch crawl results from firecrawl curl --request GET \

--url https://api.firecrawl.dev/v1/crawl/{id} , I'm limited by my calling code to 5 MB max that I can fetch. Is there a way to ask firecrawl to limit size results to less than 10 MB ? Or to limit the number of results returned?...

429 Rate Limit on mapURL

Can anyone tell me what the rate limit is for this action? The docs don't say.

https://docs.firecrawl.dev/rate-limits#rate-limits

I'm getting a 429 error even when I space out my calls by ~5 seconds (so only max 12 per minute.)...

local Search

Hello. I'm trying search endpoint locally (local deployment) but I have "No search found" and several error in logs

api-1 | 2025-02-08 10:43:57 warn [:]: You're bypassing authentication {}

api-1 | 2025-02-08 10:43:57 warn [:]: You're bypassing authentication {}

api-1 | 2025-02-08 10:45:20 warn [:]: You're bypassing authentication {}...

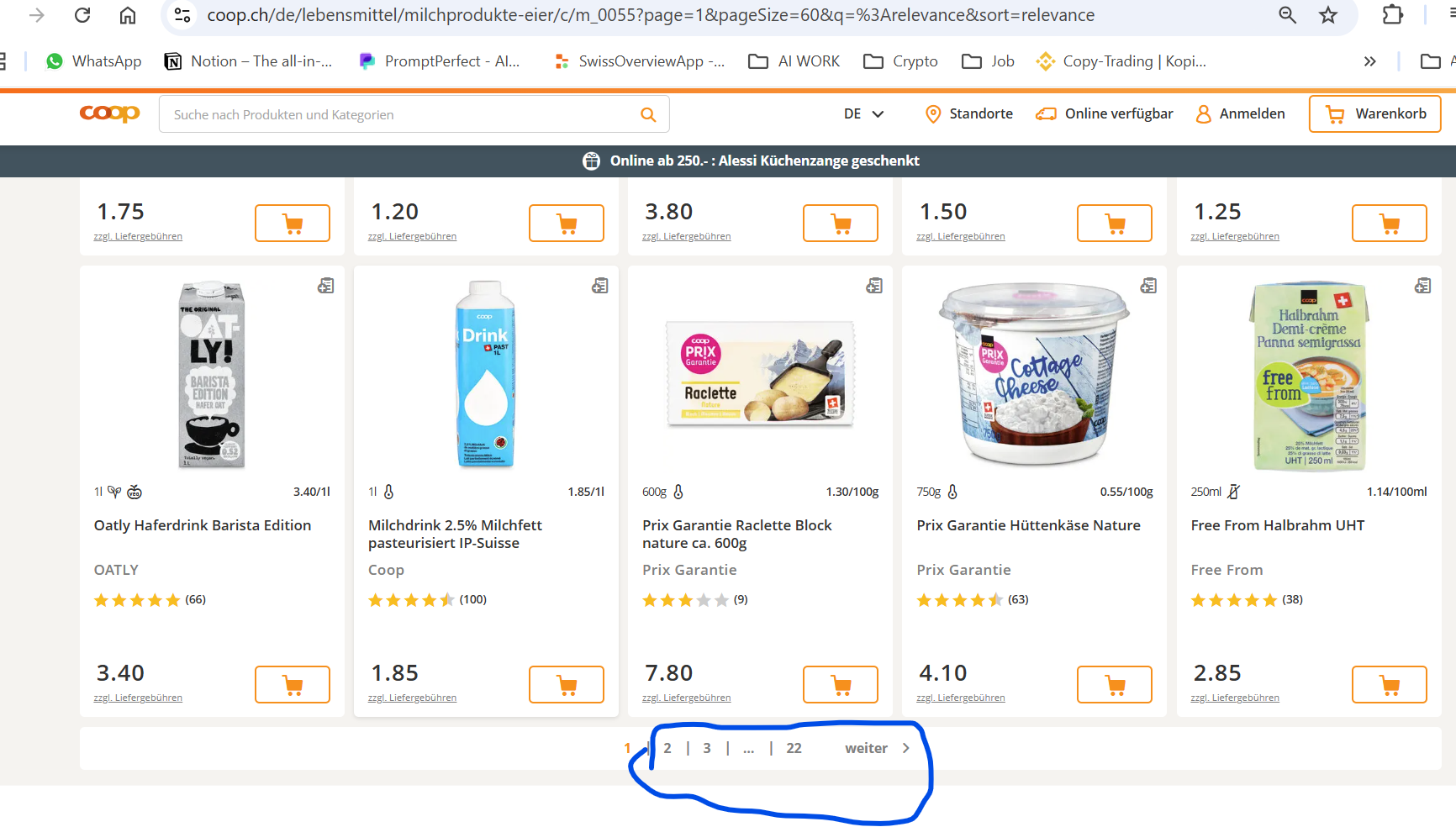

Need help: next page extraction

Hi

Unfortunately, I am not able to extract product information of the subsequent pages, just for the initial page. My assumption: all subsequent pages have the same URL? But who am I - I have no clue and no DEV experience 🙂 !

...

search endpoint

hey is the /search endpoint available in the self hosted (docker) image? where can i find a better description of what is supported if i run it locally?

llms.txt generator - crawl single page instead of full domain

I've tried the llms.txt generator (https://llmstxt.firecrawl.dev/) to convert these docs (https://developer.wordpress.org/block-editor) and subpages to llms.txt but it is crawling the full subdomain https://developer.wordpress.org

Any way to only crawl single pages or subpages of the requested URL?...

Issues with the map endpoint

I'm having issues getting the map endpoint to return page numbers close to the number I know exist for most domains. I know there are limits in the alpha around smart results but it seems to even be significantly undercounting sitemaps. For example I've been testing it on bard.edu, which I know contains over 9000 pages. The sitemap contains 4000 pages but when I use the map endpoint as follows I only get about 2000 pages. Is this expected behavior. I'm seeing similar results across all domains I...

Need Help: Scrape Actions doesn't seem to work / apply

I'm trying to use scroll actions to navigate through a website (Kickstarter), but they aren't working at all. I confirmed this by taking a screenshot, which shows that the page remains at the top even after multiple scroll-down actions. To rule out a site-specific issue, I tested other websites, but the problem persists.

Clicking and text input actions also don't work. However, I do receive an error when a clickable element isn't found, which suggests that my syntax is correct and that the checks are being applied.

Additionally, the example actions from the documentation doesn't work out of the box ( did the website change? ): https://docs.firecrawl.dev/launch-week#example...

i need help

{

"markdown": "# Verifica tu identidad\n\nMantén presionado el botón para confirmar que no eres un robot.",

"metadata": {

"url": "https://www.bodegaaurrera.com.mx/blocked?url=L2luaWNpbw==&uuid=2f3bf6aa-e1a6-11ef-b180-7f8d379c02a3&vid=&g=b",

"title": "Verify Your Identity",...

Errors with Scrape this Morning

I'm using scrape in N8N and tried it at firecrawl.dev this morning and its timing out. Any ideas?

API issue

Hi mods and support,

I'm trying to extract data from site. I used to code recommanded by the documentation

```python...

402 error payment required error despite having tons of credits left

We're getting intermittent 402 errors saying we have insufficient credits even though our dashboard shows tons of credits left on our paid plan. All our invoices are paid. Anyone else had this error?

Scrape forbidden: http://deseretfamilydentistry.com

Could you please investigate why this site cannot be scraped? Thank you!

Question about new extract service and the scrape service

I have a question, because the original scrape service can also provide the function of AI LLM extract (via the extract field), which is very similar to the newly launched extract service, what is the difference between the two? I hope to explain in detail the use, differences, and price or more. Thanks!

Extract embedded links

I want to extract all the embedded URLs from a website using FireCrawl. However, some URLs on the site rely on dynamic interactions. Is there a solution for this issue?

Including URLs to Background Images in markdown

The markdown of the html does a good job of including <img> tags but as you know with a lot of websites, a very high percentage of images on websites are actually rendered using a <div> block and a background css style. Anyone had any luck getting firecrawl to extract that into the markdown?