New build endless "Pending" state

Skip build on Github Commit

Model initialization failed: CUDA driver initialization failed, you might not have a CUDA gpu.

getting occasional OOM errors in serverless

ComfyUI + custom models & nodes

bug in creating endpoints

16 GB GPU availability almost always low

Endpoint specific API Key for Runpod serverless endpoints

generation-config vllm

--generation-config vllm.

How do I add --generation-config vllm parameter when using Quick Deploy? Want to be able to set custom top_k, top_p, temperature in my requests instead of being stuck with model defaults.

Thanks!...New UI New Issue again lol

ComfyUI looks for checkpoint files in /workspace instead of /runpod-volume

Unhealthy worker state in serverless endpoint: remote error: tls: bad record MAC

Job Dispatching Issue - Jobs Not Sent to Running Workers

Stuck at initializing

So serverless death

How to bake models checkpoints in docker images in ComfyUI

Is it possible to set serverless endpoints to run for more than 24 hours?

executionTimeout to a value higher than 24 hours when creating the endpoints. However, the jobs still exit exactly at the 24-hour mark. Is it possible to increase this limit, and if so, how?New load balancer serverless endpoint type questions

Mounting a network storage on comfyui serverless endpoint

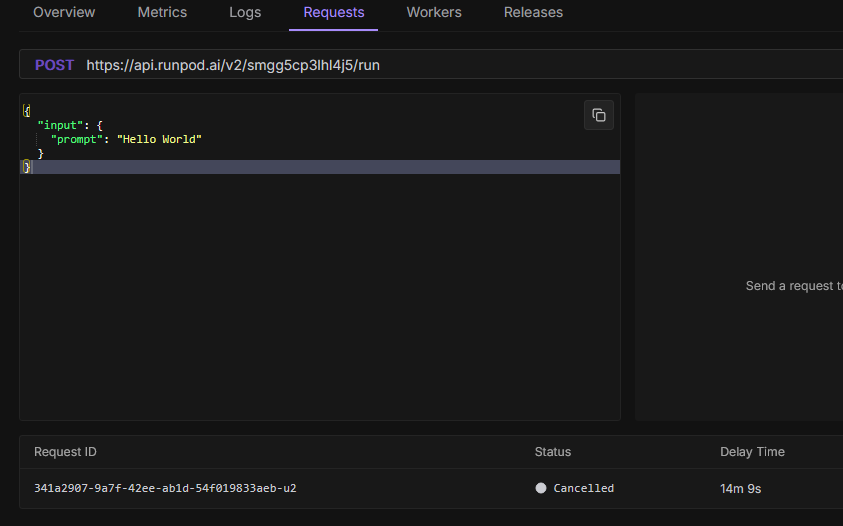

Testing default "hello world" post with no response after 10 minutes

openai/gpt-oss-20brunpod/worker-v1-vllm:v2.8.0gptoss-cuda12.8.1

runpod/worker-v1-vllm:v2.8.0gptoss-cuda12.8.1