How i run ipynb on vscode

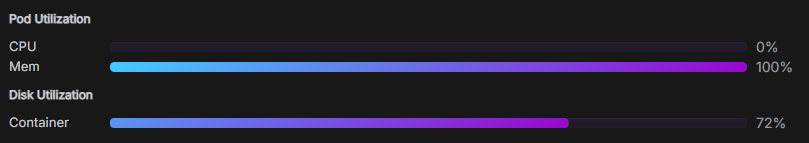

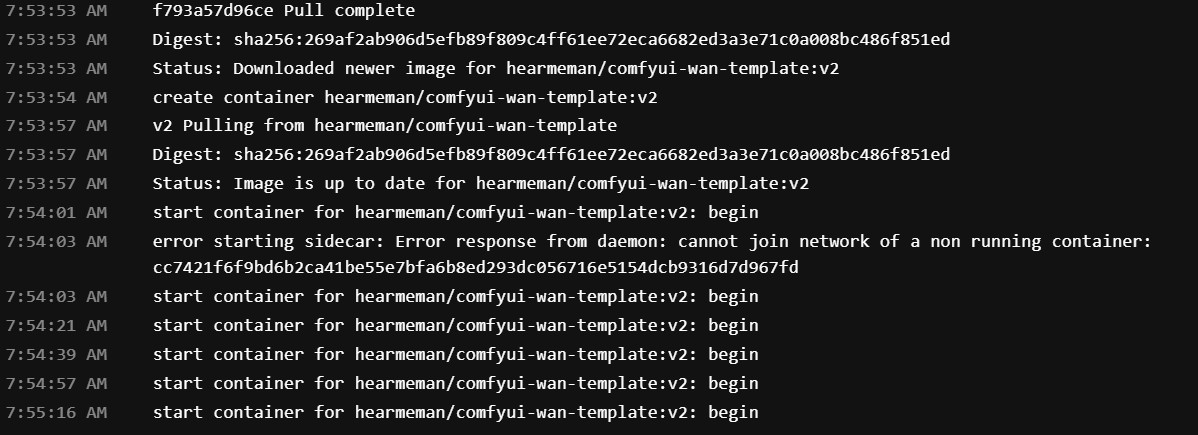

The pod is crashing while rendering a video in hunyuan

Connect to Pod via CloudFlare

Auto-downloading straight to local

Is it possible to run a grpc service on a pod?

Help me understand a productive workflow

I am ssh into one pod and my drives are on a different pod. I only have one pod. UG

(__ \ ( \ | |...

Unable to SSH into a pod via open TCP port

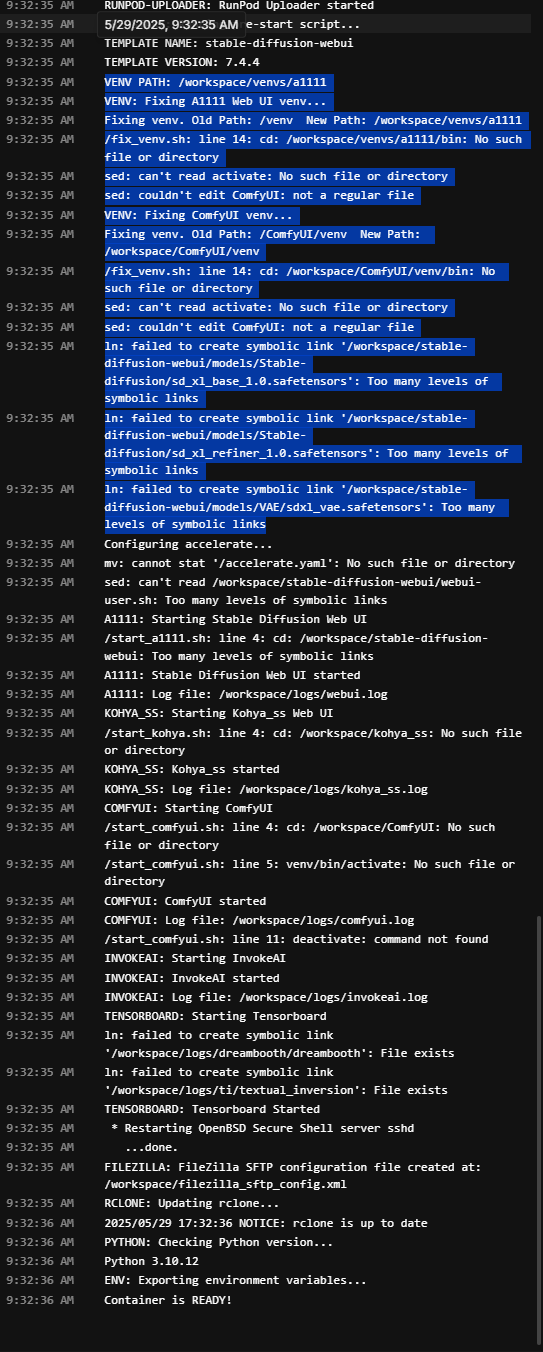

Unable to start templates

Use onedrive or Google drive as workspace

Stable diffusion wont start with network storage.

ubuntu archive 403

Network peering between VPC and run pod network

Whitelist IP for AWS RDS Connection

Is it just me or jupyterlab connect button are no longer passing token ?

Error loading ASGI app. Could not import module "main".

Ultimate stable diffusion webui pod weirdness

Multi-Instance GPU Support on RunPod

nvidia-smi - but it didn’t work. Instead, it threw an ‘insufficient permissions’ error.

This appears to be due to how virtualisation is implemented, providing a lack of hardware-level control within a pod....B200

runpod api questions

gpuTypes query -> passing to POST https://rest.runpod.io/v1/pods request body gpu count from my local ui(not more than maxGpuCountCommunityCloud i parsed for specific gpu type) and gpu type id...