hi if you already trained you can skip that part. please watch this video : https://www.youtube.com/watch?v=kIyqAdd_i10

YouTubeSECourses - Software Engineering Courses

Our Discord : https://discord.gg/HbqgGaZVmr. This is the video where you will learn how to use Google Colab for Stable Diffusion. If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on  https://www.patreon.com/SECourses

https://www.patreon.com/SECourses

Playlist of Stable Diffusion Tutorials, #Automatic1111...

https://www.patreon.com/SECourses

https://www.patreon.com/SECoursesPlaylist of Stable Diffusion Tutorials, #Automatic1111...

you cant do dreambooth but you can do lora in dreambooth extension

also you can do textual inversion

very weird. and there is no error message right?

I’m creating a second model, so I don’t know why the indentation is an issue since I know it works and never changed it

(I followed your video to a T and I’m not sure where the difference is from the text)

@Furkan Gözükara SECourses I have 2400 RegularizationImages, what would the class images per instance be?

copy paste that part from the original link it should fix

i suggest 50 per instance

how many training you have?

10

ok i think still set 50

so it will use 500

perfect

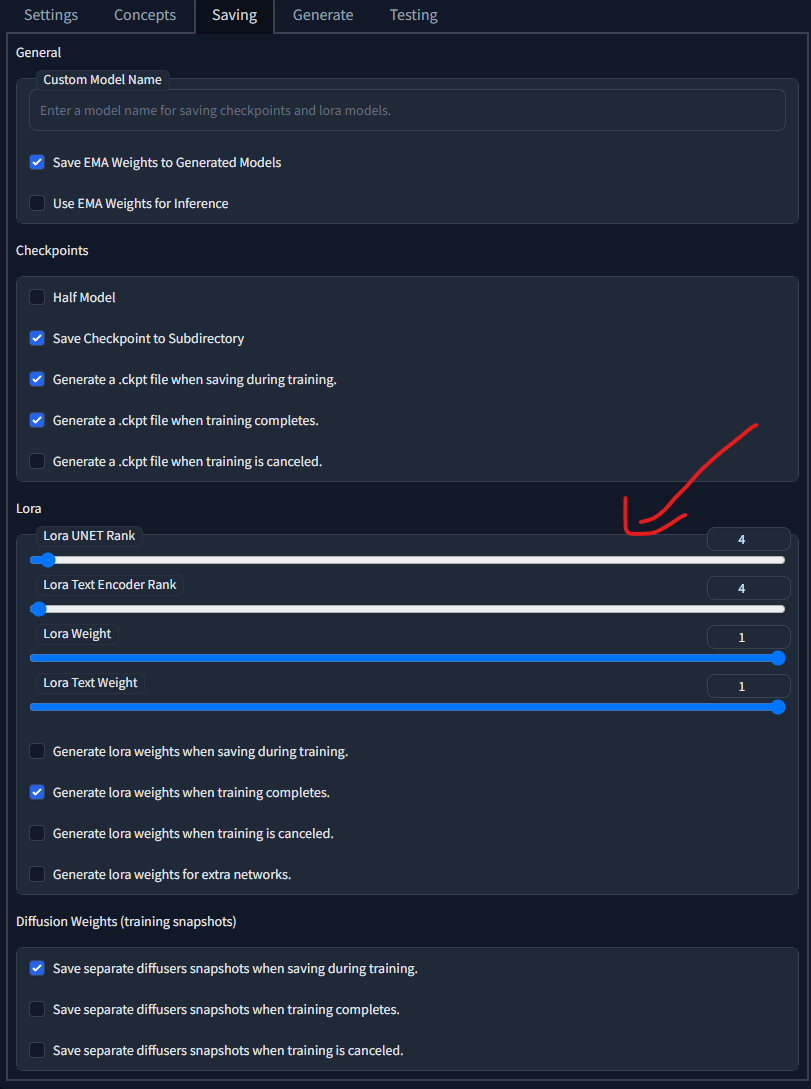

Finally before I start training

I am using Lora so I need to best settings for these sliders

unfortunately i am yet to run experiments for them

but kohya uses 128 and 128

i believe

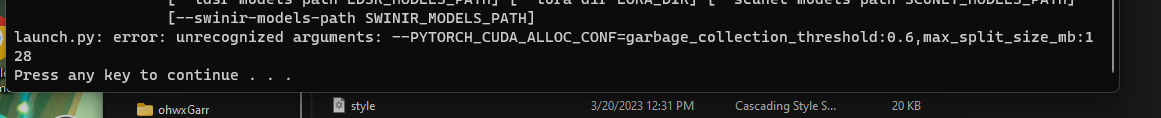

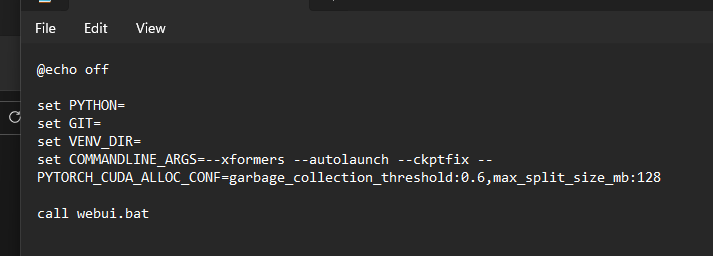

another tips for lower vram. under command line arguments :

I assume the PyTorch_Cuda_Alloc_Config command needs to be added before starting SD and not during XD

yes. this is for low vram problem having people

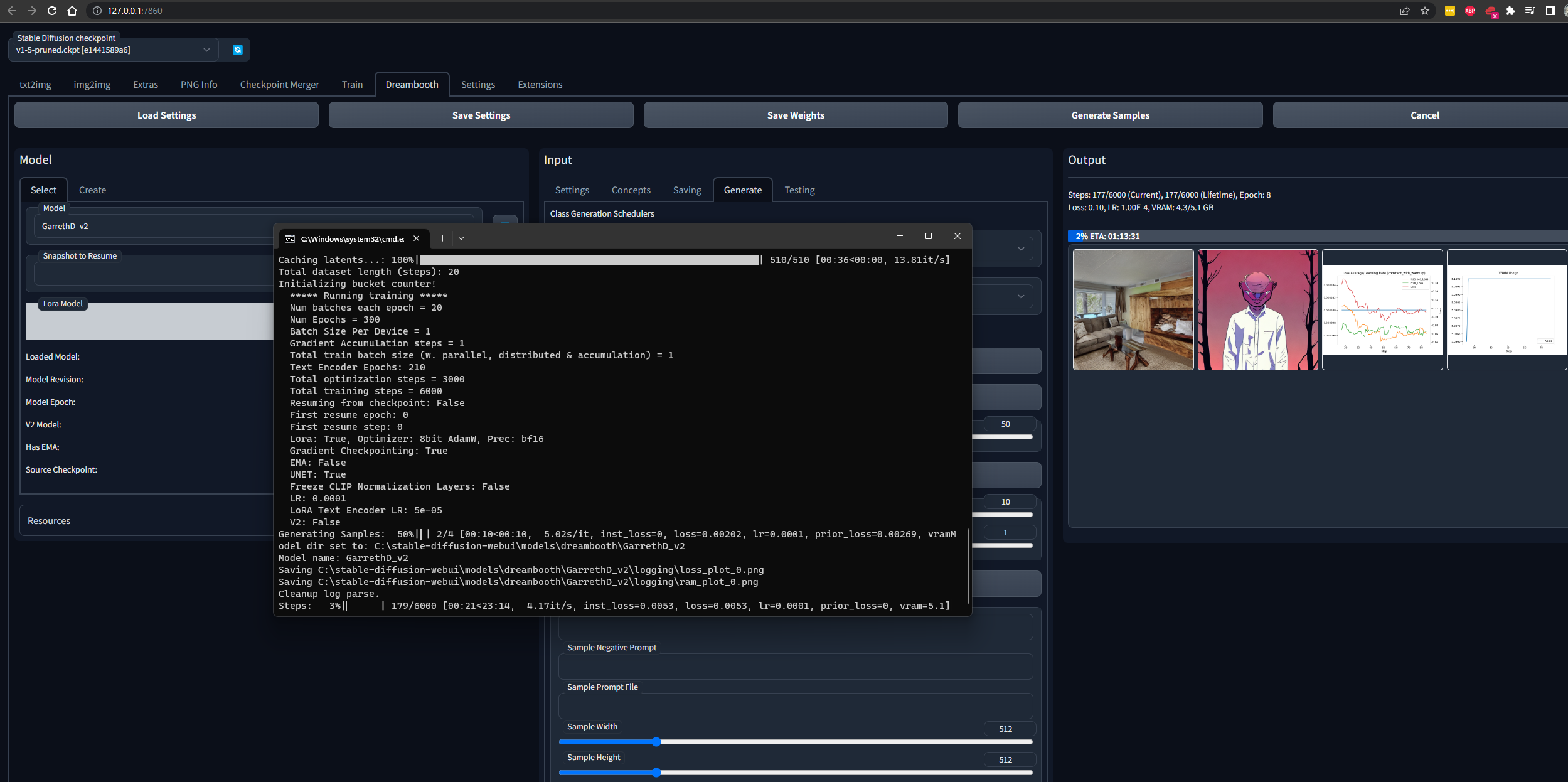

I am now following your guide to the letter incuding the images. I will train styles later

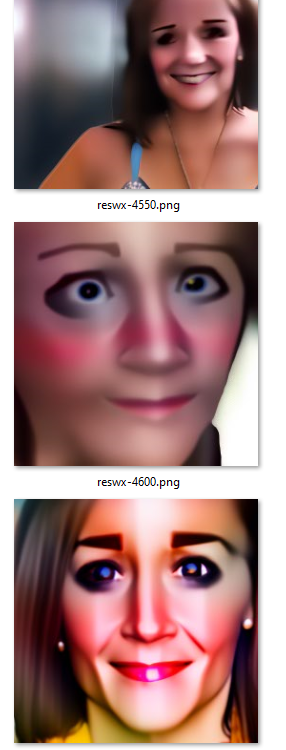

These images that are being sampled do not seem right

I am expecting images of me or is this not the case?

The face and hair looks kinda like me.. Am I being hopfull XD

I am getting really cool accurate ish looking images

great

i added a spanish subtitle can anyone test it who knows spanish? https://www.youtube.com/watch?v=m-UVVY_syP0

YouTubeSECourses - Software Engineering Courses

RunPod: https://bit.ly/RunPodIO. Discord : https://bit.ly/SECoursesDiscord. Get your own #Midjourney level model for free via Style Teaching. If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on  https://www.patreon.com/SECourses

https://www.patreon.com/SECourses

Playlist of #StableDiffusion Tutorials, Au...

https://www.patreon.com/SECourses

https://www.patreon.com/SECoursesPlaylist of #StableDiffusion Tutorials, Au...

now translating to french

I let my embed run all night and it is still a monster! This is tough.

could be overtraining. you are following this video right? https://www.youtube.com/watch?v=dNOpWt-epdQ

YouTubeSECourses - Software Engineering Courses

Our Discord : https://discord.gg/HbqgGaZVmr. Grand Master tutorial for Textual Inversion / Text Embeddings. If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on  https://www.patreon.com/SECourses

https://www.patreon.com/SECourses

Playlist of Stable Diffusion Tutorials, Automatic1111 and Google Colab Guide...

https://www.patreon.com/SECourses

https://www.patreon.com/SECoursesPlaylist of Stable Diffusion Tutorials, Automatic1111 and Google Colab Guide...

@Furkan Gözükara SECourses The command you gave does not seem to wrk

which one

also, with Lora my face does not look close to as good as your images you get to generate. I will now try with normal Dreambooth without Lora and then try the XYZ picking

you need to put set to front

dreambooth better than lora

I could not find an embedding video from you! Hooray I am saved. Thank you!

Hi, i'm having issues with setting up xformers with the A5000 GPU in SD Dreambooth

Whenever i click Generate Class Images i'm getting :

Exception generating concepts: No operator found for memory_efficient_attention_forward with inputs: query : shape=(1, 2, 1, 40) (torch.float32) key : shape=(1, 2, 1, 40) (torch.float32) value : shape=(1, 2, 1, 40) (torch.float32) attn_bias : p : 0.0 flshattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) tritonflashattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) triton is not available requires A100 GPU cutlassF is not supported because: xFormers wasn't build with CUDA support smallkF is not supported because: xFormers wasn't build with CUDA support max(query.shape[-1] != value.shape[-1]) > 32 unsupported embed per head: 40

I've set everything based on your youtube tutorial here https://www.youtube.com/watch?v=Bdl-jWR3Ukc&t=158s

Whenever i click Generate Class Images i'm getting :

Exception generating concepts: No operator found for memory_efficient_attention_forward with inputs: query : shape=(1, 2, 1, 40) (torch.float32) key : shape=(1, 2, 1, 40) (torch.float32) value : shape=(1, 2, 1, 40) (torch.float32) attn_bias : p : 0.0 flshattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) tritonflashattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) triton is not available requires A100 GPU cutlassF is not supported because: xFormers wasn't build with CUDA support smallkF is not supported because: xFormers wasn't build with CUDA support max(query.shape[-1] != value.shape[-1]) > 32 unsupported embed per head: 40

I've set everything based on your youtube tutorial here https://www.youtube.com/watch?v=Bdl-jWR3Ukc&t=158s

generate class images with txt2img

much faster better

With or without the --

i think like this can you test?