I think there's a performance issue. Do you want me to file a formal ticket on GitHub or just describe it briefly here?

Uh oh. You can send it here, then I’ll add it to GH and credit you there

Or whichever way you prefer

I need to add formalizing contributions to Flareutils to my evergrowing todo list…

Basically the cacheTTL you use for the fronting cache is the same as the cacheTTL for KV. This means that every time you read from KV it's a cold read whereas KV takes great pains to make sure that you never see cold reads for frequently accessed keys.

The latest version of the PR isn't building, but I'm working on docs providing guidance:

https://vlovich-kv-advanced-guide.cloudflare-docs-7ou.pages.dev/workers/learning/advanced-kv-guide/#avoid-hand-rolling-cache-in-front-of-workers-kv

I think a good change to BetterKV that follows the recommendation while cutting costs is:

The latest version of the PR isn't building, but I'm working on docs providing guidance:

https://vlovich-kv-advanced-guide.cloudflare-docs-7ou.pages.dev/workers/learning/advanced-kv-guide/#avoid-hand-rolling-cache-in-front-of-workers-kv

I think a good change to BetterKV that follows the recommendation while cutting costs is:

- Set the cache TTL in the fronting cache to ~40-50 seconds (regardless of the cacheTTL the user is requesting from KV).

- Have 1% of cache hits do a KV.get anyway in a wait until (+ update your cache with the updated value & extend the 40-50 second deadline out further).

This guide provides best practices for optimizing Workers KV latency as well as covering advanced tricks

that our customers sometimes employ for their …

that our customers sometimes employ for their …

Also, please add a note saying that the fronting cache is incompatible with future KV improvements to allow reducing the the refreshTTL < 60s.

This change would achieve both goals.

Note that this change also saves you more money. Because you're sampling at 1%, you will have a small number of requests that end up going through to KV & updating the value you have cached. The current behavior has a stampeding herd every 1 minute where ALL requests suddenly go to KV.

You can make the sampling threshold and the fronting cache duration tunable but cap the fronting cache duration at 50-55s at most. I would also make sure to call out in the docs that customers should set long cacheTTL as described in that document.

And ultimately my goal is for there to be no pricing difference of putting the Cache API in front, but we're still a ways away from realizing that vision. We're working on making KV faster than the cache API first.

My goal is to win the bet & get Sid (TL for pages) to shave his head bald if we hit certain performance milestones

(We have a grafana panel tracking that)

If this happens, Sid is obligated to come to our next meetup bald

Very good point. Skye is correct that performance is not the #1 priority for BetterKV, pricing is, but I do also definitely see your point. I’ll probably add your suggestions as a performance mode that people can enable

It's also net a cost savings. Would you be open to making it the default & the user needing to turn it off? Even if you don't want to do the probabilistic piece, can you shorten the cacheTTL so that it's under what you give KV? Otherwise, BetterKV is not really different than cache+R2.

I can't wait for this

Qq, what’s your username on Discord, so I can credit you for the changes?

vlovich

Oh that's my github username

beelzabub#0369 on Discord

Wait

No, I meant GH

I guess I’m just brain-tired from the heat…

But yeah, being more likely to refreshing the value as you approach the expriy end date is a significant cost saving measure. Counter-intuitive but true. It's how KV has to operate under the hood for similar reasons. We technically use a bounded exponentially increasing algorithm where after 5s there's an infinitesimaly small probability of a refresh that doubles with every passing second up to 100% at something like 45s or 50s at which point every read has a 100% probability of causing a background refresh. That way only a small subset of requests end up triggering a refresh while the rest just always serve from the cache without any stampeding herd. We have other tricks we're layering in as well, but they're not available externally yet.

How old is this writeup? I did not even know that putting cache in front of KV was a thing at some point, and from the time I started using (~2 years now), I always assumed KV is parallel to Cache. In other words, since KV is already at the edge, what's the point of cache in front of a cache?

To reduce billing since KV cache hits are still billed but the cache api is free

Now that you have exposed the cache status of reads, this becomes a lot easier to implement - previously when I thought about a probabilistic update, using the time the read took as an indicator for hit/miss was rather messy, so thanks for implementing

(one small issue I noticed with the docs PR, the ToC seems to cut off at some point)

The latter

Hi.

Is there any problem with publishing Cloudflare kv id, preview_id on github?

I have defined id, preview_id in wrangler.toml like this, is it ok to upload it as is where it can be seen by unspecified people? Or should I put them in .dev.vars?

Is there any problem with publishing Cloudflare kv id, preview_id on github?

I have defined id, preview_id in wrangler.toml like this, is it ok to upload it as is where it can be seen by unspecified people? Or should I put them in .dev.vars?

Everything in

wrangler.toml@zegevlier Thank you.

Is it OK to write directly in wrangler.toml and make it public for things like api key provided by cloudflare, not just kv?

I'm curious because GCP and AWS keep things like api_key secret.

Is it OK to write directly in wrangler.toml and make it public for things like api key provided by cloudflare, not just kv?

I'm curious because GCP and AWS keep things like api_key secret.

No. API keys should never be made public. You should not be storing these in your

wrangler.tomlThank you very much.

I wasn't sure how to tell which items should be secret, but I think your advice helped me understand a little better.

I checked the cloudflare dashboard and the api tokens clearly says "Protect this key like a password!" to keep it secret.

I'll try to keep this kind of warning secret, but if there is anything else I should be aware of, could you please let me know?

I wasn't sure how to tell which items should be secret, but I think your advice helped me understand a little better.

I checked the cloudflare dashboard and the api tokens clearly says "Protect this key like a password!" to keep it secret.

I'll try to keep this kind of warning secret, but if there is anything else I should be aware of, could you please let me know?

In general (this might not always be true, but it's usually a good rule of thumb. As far as I know it always holds for Cloudflare stuff), IDs are safe to share, keys and secrets are not.

Thank you again and again for your kindness. I understand.

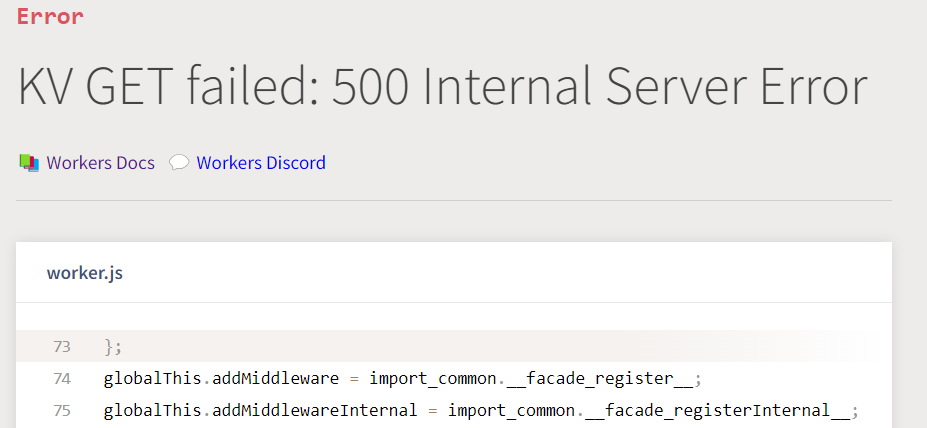

Guys there is issue in kVs

it crashed my whole server almost 5 times today

Fixed now

Hi @bhadoo, can you share with me your affected account ID?