Oh, so an index is like a Hash Table, rather than a standard table… That makes sense.

Oh, so an index is like a Hash Table, rather than a standard table… That makes sense.

SELECT * FROM table LIMIT NWHEREHAVING

ORDERLIMITLIMIT 10

ORDER BY <some_date_column>

SQL LIKE

rows_writtenrows_read

rows_writtenrows_readtotal_rows_written and total_rows_read in a "status" request?

back from pushing the limits over 500MB?

back from pushing the limits over 500MB?

.batch()EXPLAIN statement. The column name is messed up?

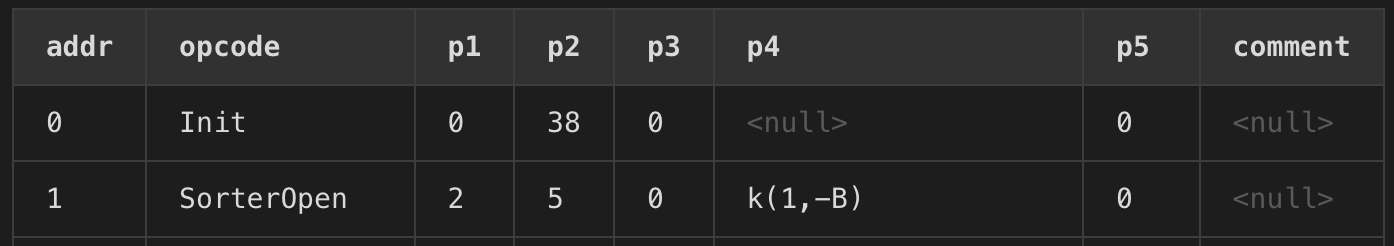

When the EXPLAIN keyword appears by itself it causes the statement to behave as a query that returns the sequence of virtual machine instructions it would have used to execute the command had the EXPLAIN keyword not been present. When the EXPLAIN QUERY PLAN phrase appears, the statement returns high-level information regarding the query plan that would have been used.

Many of these limits will increase during D1’s public alpha. Join the #d1-database channel in the Cloudflare Developer Discord to keep up to date with changes.

From the Docs

➜ wrangler d1 execute db-wnam --command "SELECT * FROM [Order] WHERE ShipRegion LIKE '%Western%' LIMIT 10" --json | jq '.[].meta.rows_read'

18 <-- we had to scan 18 rows to find 10 that matched our filtertotal_rows_writtentotal_rows_readEXPLAIN