you could probably Jerryrig an inefficient replication system with the cache api

you could probably Jerryrig an inefficient replication system with the cache api

wrangler pages devD1 started up with something like pnpx wrangler -c wrangler.toml d1 execute DB --local --file=migrations/001.sql ? Just want to make sure this is a supported use case for D1 in the Beta stage @ the moment. Note: I already have a working D1 setup with pages on Cloudflare. I'm interesting in running locally. So my question essentially is just if whether this is already supported or not.pnpx wrangler d1 execute db_name --local --file=./src/dump/dump.sql but it stayed the same for past 1h: Mapping SQL input into an array of statements

Mapping SQL input into an array of statementsRequest entity is too large [code: 7011]

CLOUDFLARE_ACCOUNT_ID=1234 CLOUDFLARE_API_TOKEN=4321 wrangler whoami

CLOUDFLARE_ACCOUNT_ID=1234 CLOUDFLARE_API_TOKEN=4321 wrangler d1 execute test-database --command="SELECT * FROM Customers" CLOUDFLARE_ACCOUNT_IDCLOUDFLARE_API_TOKEN

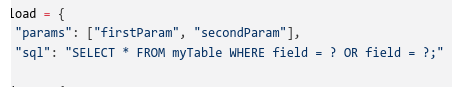

wrangler d1 info <DBNAME>INSERT INTO test (id) VALUES (1), (2), (3), (4), (5){

"params": [

1,

2,

3,

4,

5

],

"sql": "INSERT INTO test (id) VALUES (?), (?), (?), (?), (?)"

}D1D1D1pnpx wrangler -c wrangler.toml d1 execute DB --local --file=migrations/001.sqlpnpx wrangler d1 execute db_name --local --file=./src/dump/dump.sql