I have 1536 dimension openai embeddings and I can't insert 284 of them, but it works if I only inser

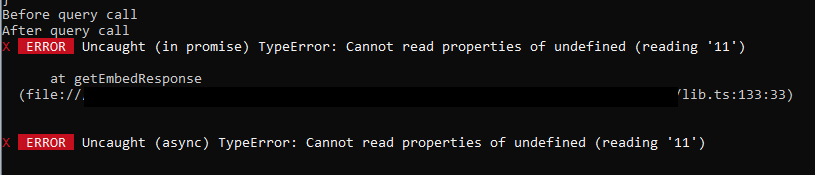

I have 1536 dimension openai embeddings and I can't insert 284 of them, but it works if I only insert the first up to 100 vectors. 101 doesn't work.

I looked up the limitations and didn't see anything relevant. Is this a bug?

Update: I implemented chunking and now all the request fail (they have 90 vectors each, so I have like 4 of them). If I try a few times, sometimes several of the 4 request succeed, but never all and it's not reliably of course

Update 2: Still trying to triangulate the issue. Tested by slicing only the first 3 dimensions and then I can insert all at once without a problem or batch them into 4 batches without a problem. Weird

Update 3: Batching into maxSize 10 batches (29 of them) did work on all 29 insert requests. Is there any metadata or index name limit per batch maybe?

I looked up the limitations and didn't see anything relevant. Is this a bug?

Update: I implemented chunking and now all the request fail (they have 90 vectors each, so I have like 4 of them). If I try a few times, sometimes several of the 4 request succeed, but never all and it's not reliably of course

Update 2: Still trying to triangulate the issue. Tested by slicing only the first 3 dimensions and then I can insert all at once without a problem or batch them into 4 batches without a problem. Weird

Update 3: Batching into maxSize 10 batches (29 of them) did work on all 29 insert requests. Is there any metadata or index name limit per batch maybe?