When the public release is ready, is there an expectation on an increase to the throughput per secon

When the public release is ready, is there an expectation on an increase to the throughput per second over the 400 per queue that’s been the beta?

max_batch_size is set to 1.

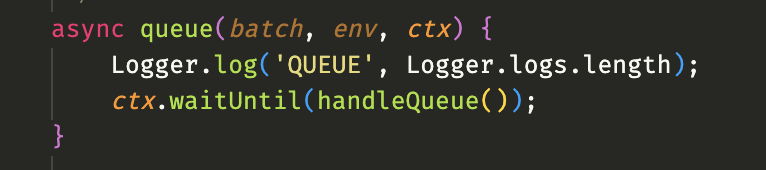

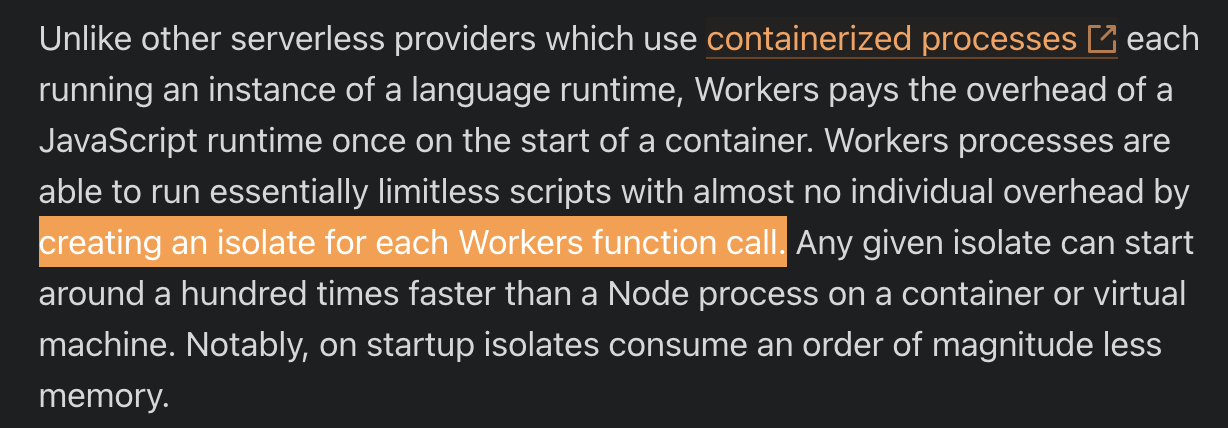

queue function which starts by logging the number of messages logged so far. The Logger is a simple ES6 module I wrote by myself.queue calls were isolated (by creating a V8 isolate for each call), then the logged number of messages should always be Zero.queue calls are being made within the same running environment.

fetch() and a queue() ? the fetch is a simple web page. I'm have a litte troubble understanding what happens when the queue forces the worker to scale? Is there any potential problems there?

json, from the existing v8 default. This will improve compatibility with pull-based consumers when we release them.2024-03-04

{ task_id, subtask_data }, and when I call sendBatch all messages in the batch have the same task_id, then can I guarantee that all messages in the consumer have the same task_id? Or will retries or max batch sizes mess with this?max_batch_size1Invalid queue name: invalid queue name: STATE-CHANGES. Must match

^[a-z0-9]([a-z0-9-]{0,61}[a-z0-9])?$ [code: 11003]queuequeuequeuequeueLoggerfetch()queue()jsonv82024-03-04{ task_id, subtask_data }task_idtask_id