@Mana👽 can you DM me the matching .tilt file?

@Mana can you DM me the matching .tilt file?

can you DM me the matching .tilt file?

can you DM me the matching .tilt file?

can you DM me the matching .tilt file?

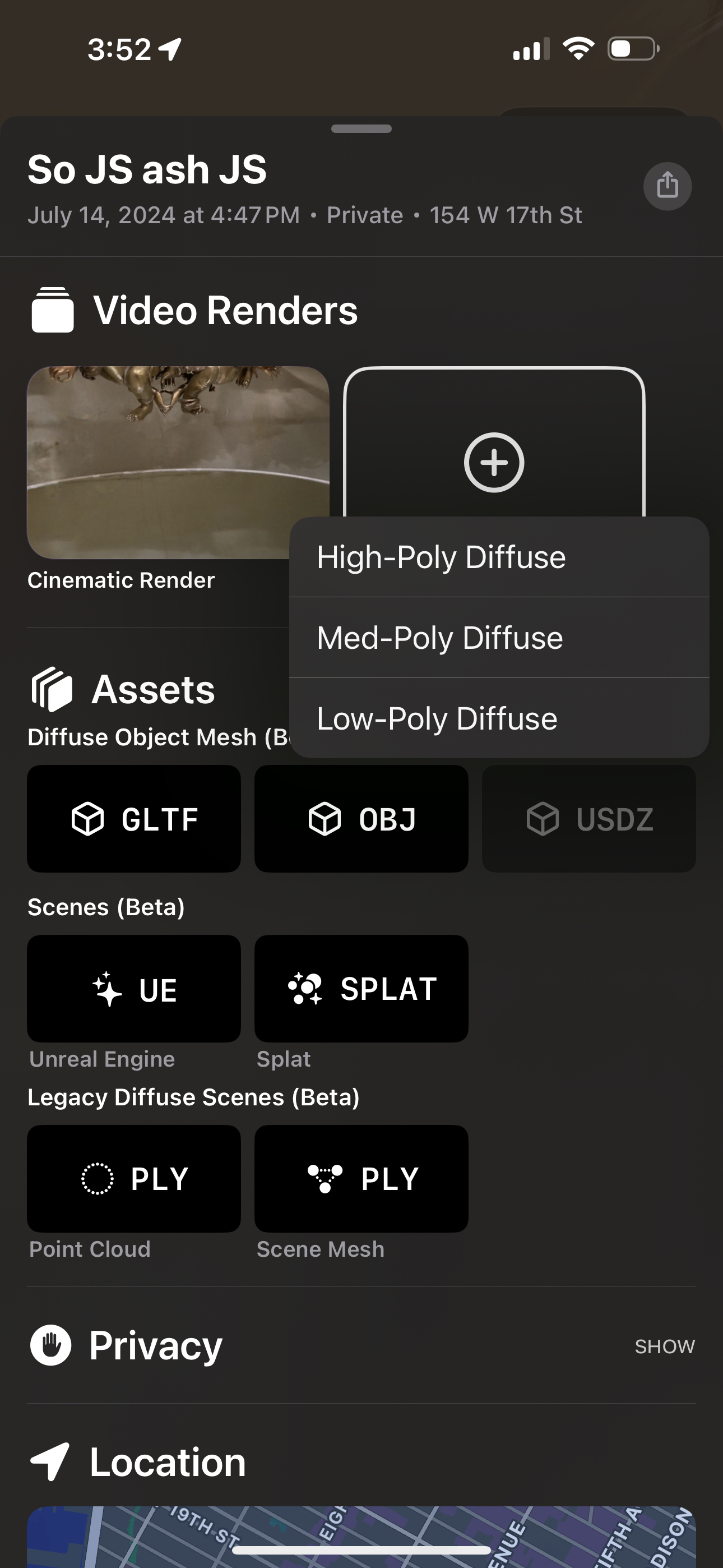

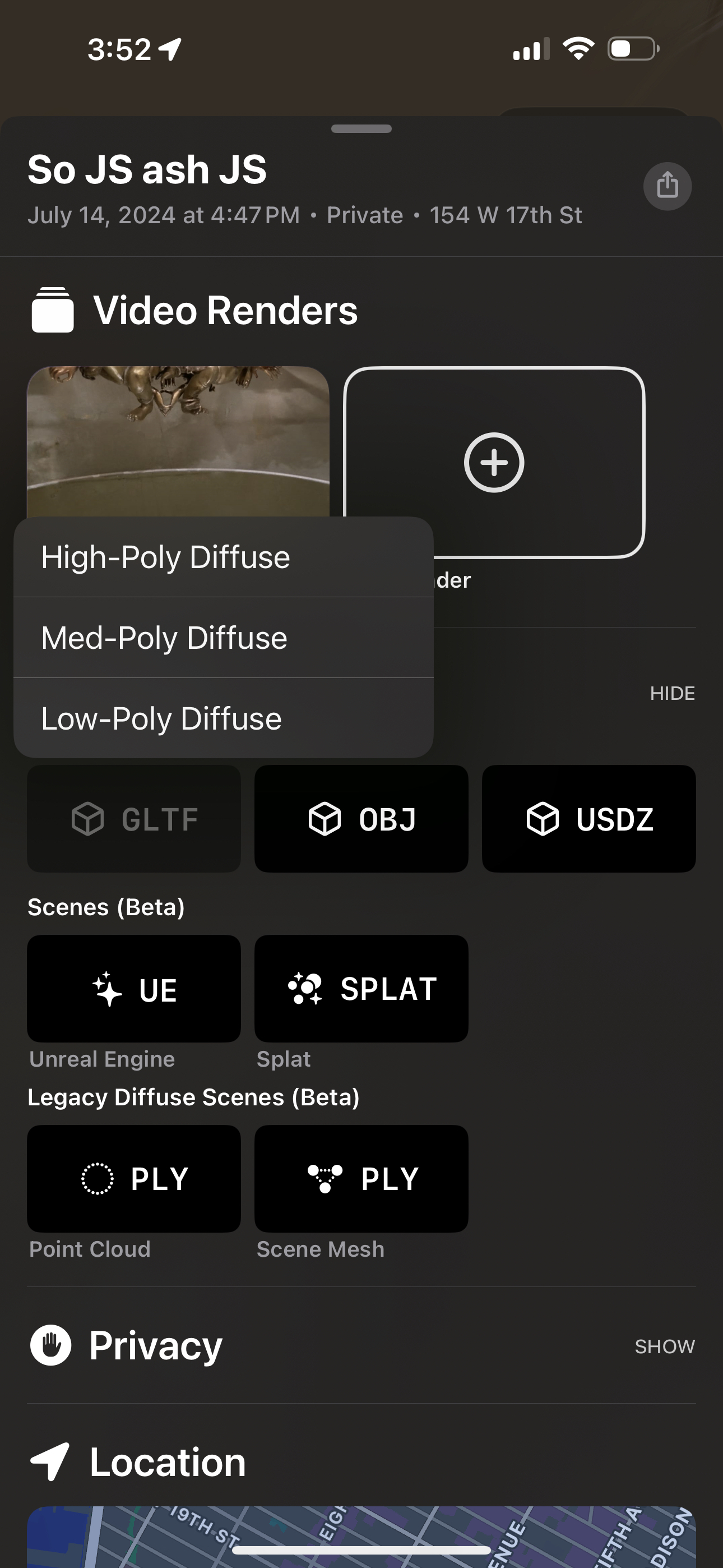

, and configure them to use the ﹤model-viewer﹥ 3D & AR web component

, and configure them to use the ﹤model-viewer﹥ 3D & AR web component

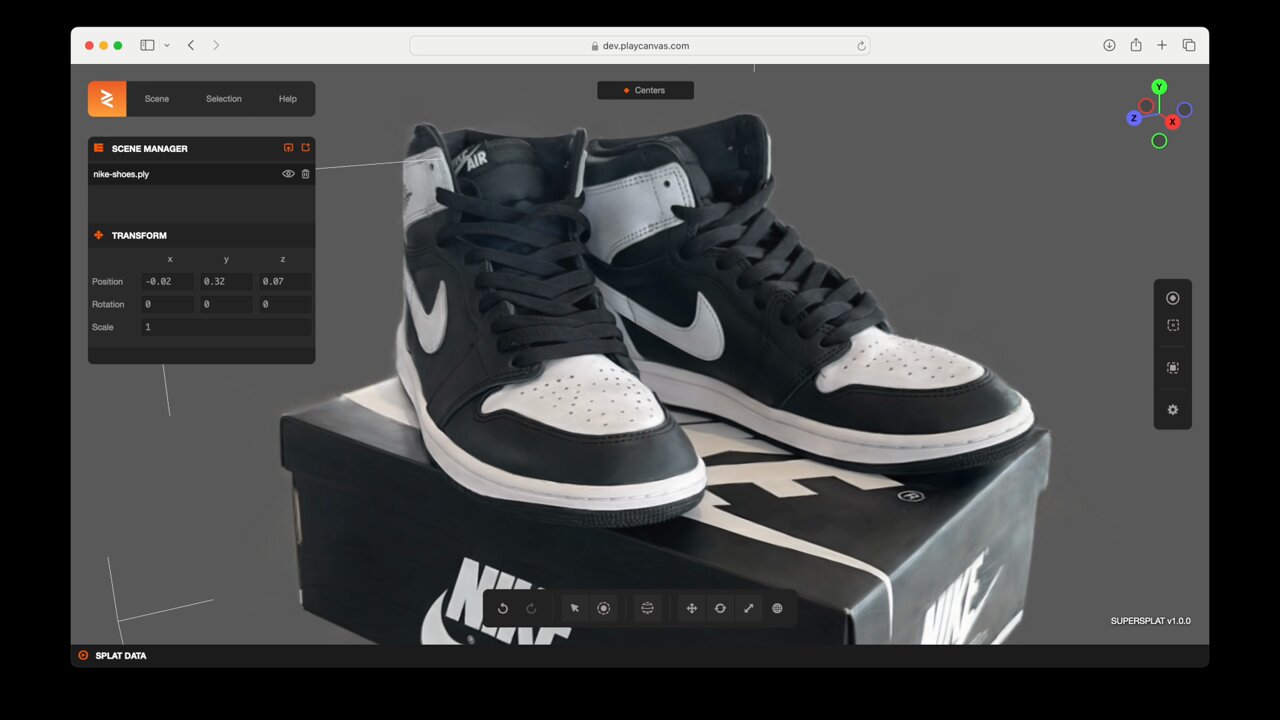

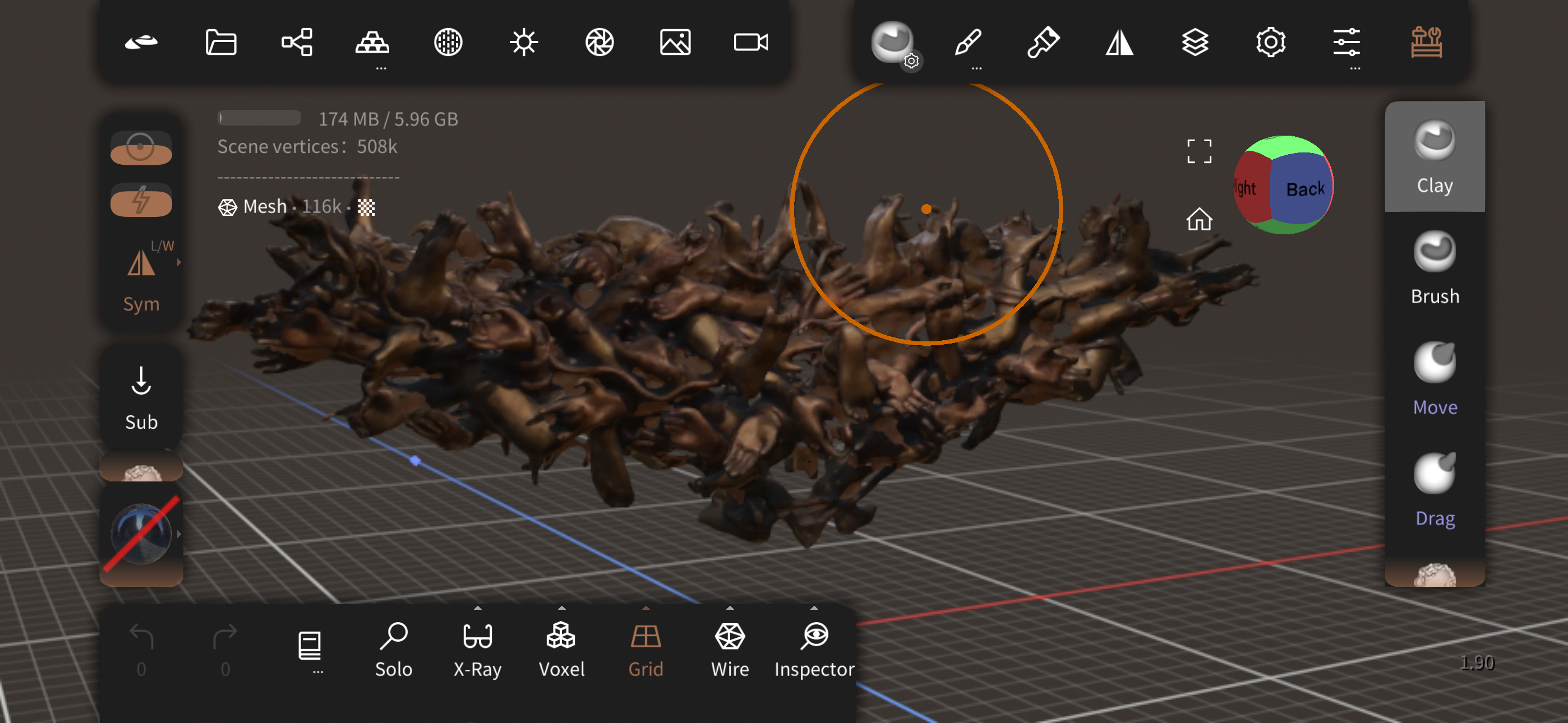

SuperSplat 1.0

SuperSplat 1.0

8/8/24, 11:19 AM

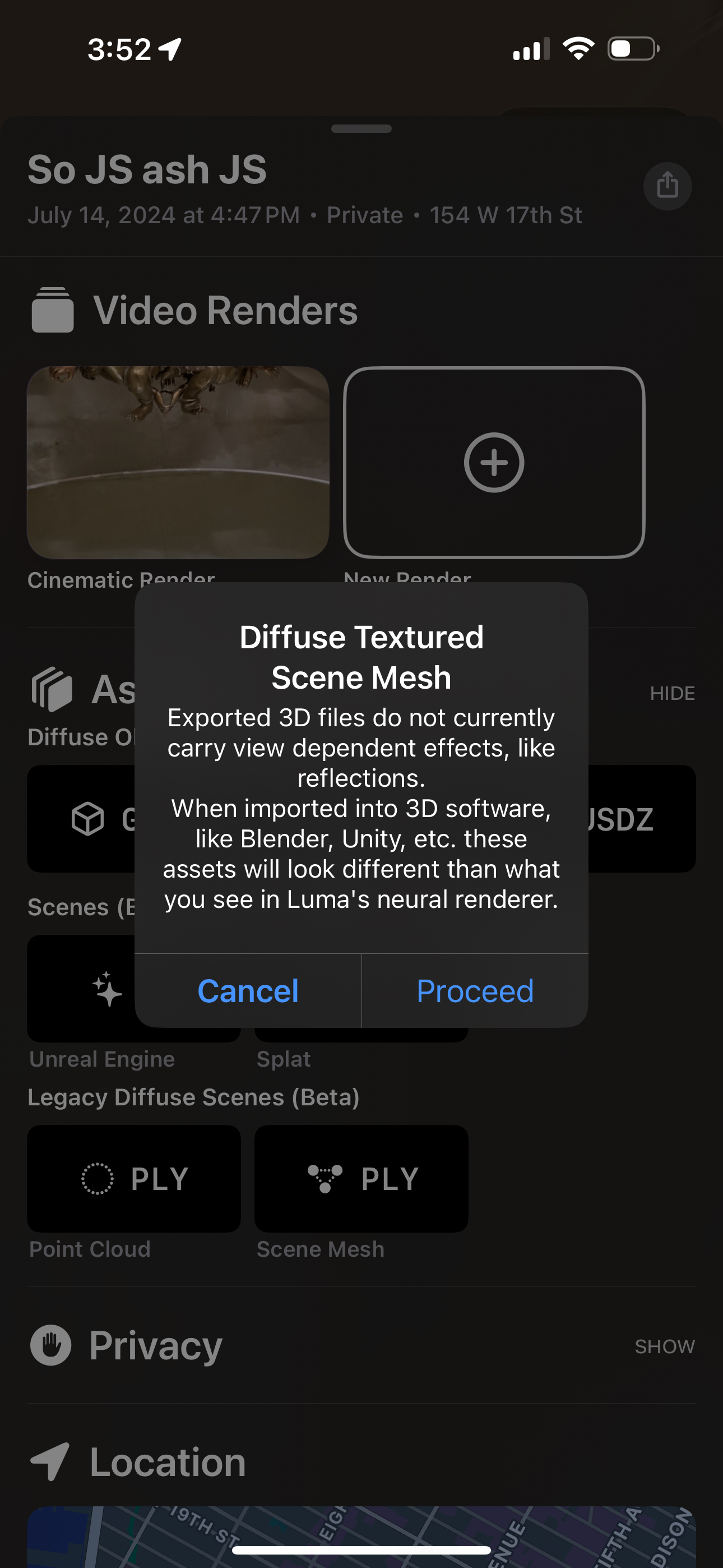

.tilt.tilt