Welcome to the official Cloudflare Developers server. Here you can ask for help and stay updated with the latest news

83,498Members

View on DiscordResources

Recent Announcements

Similar Threads

Was this page helpful?

I thought it was related

I thought it was related

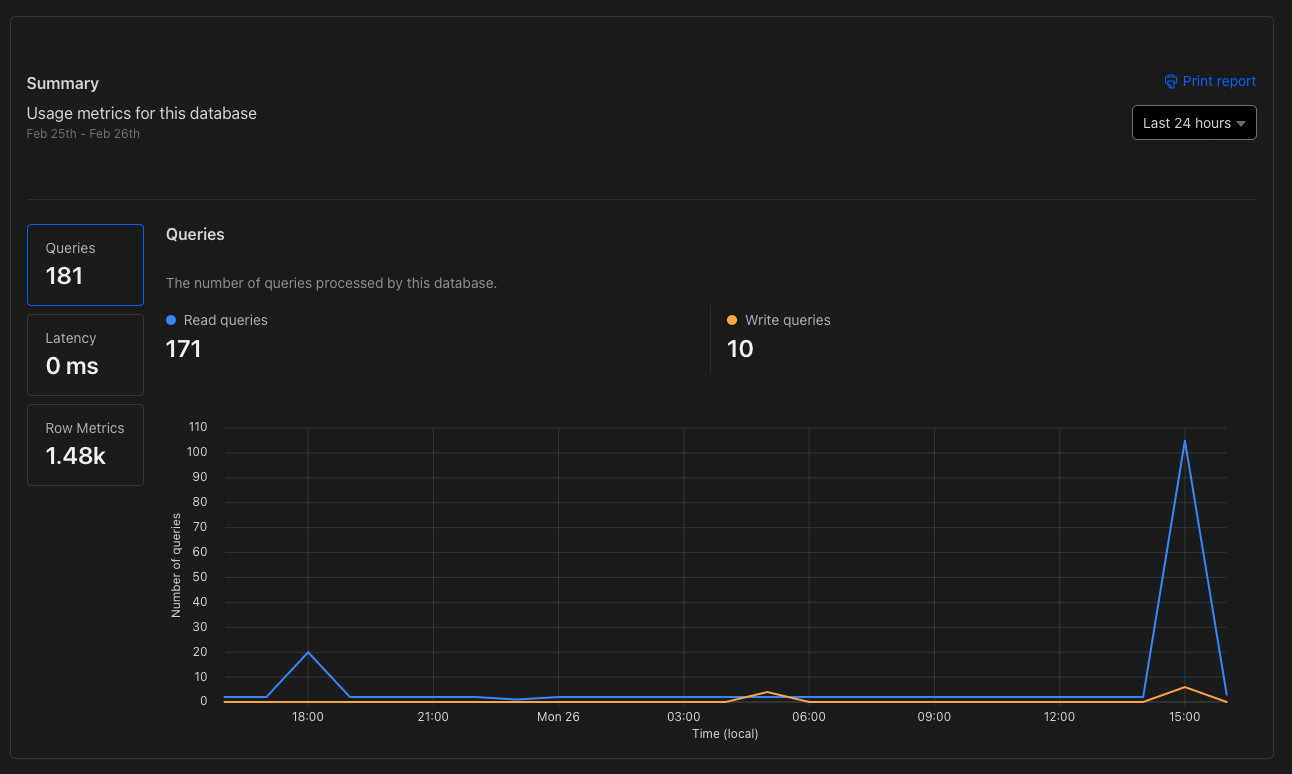

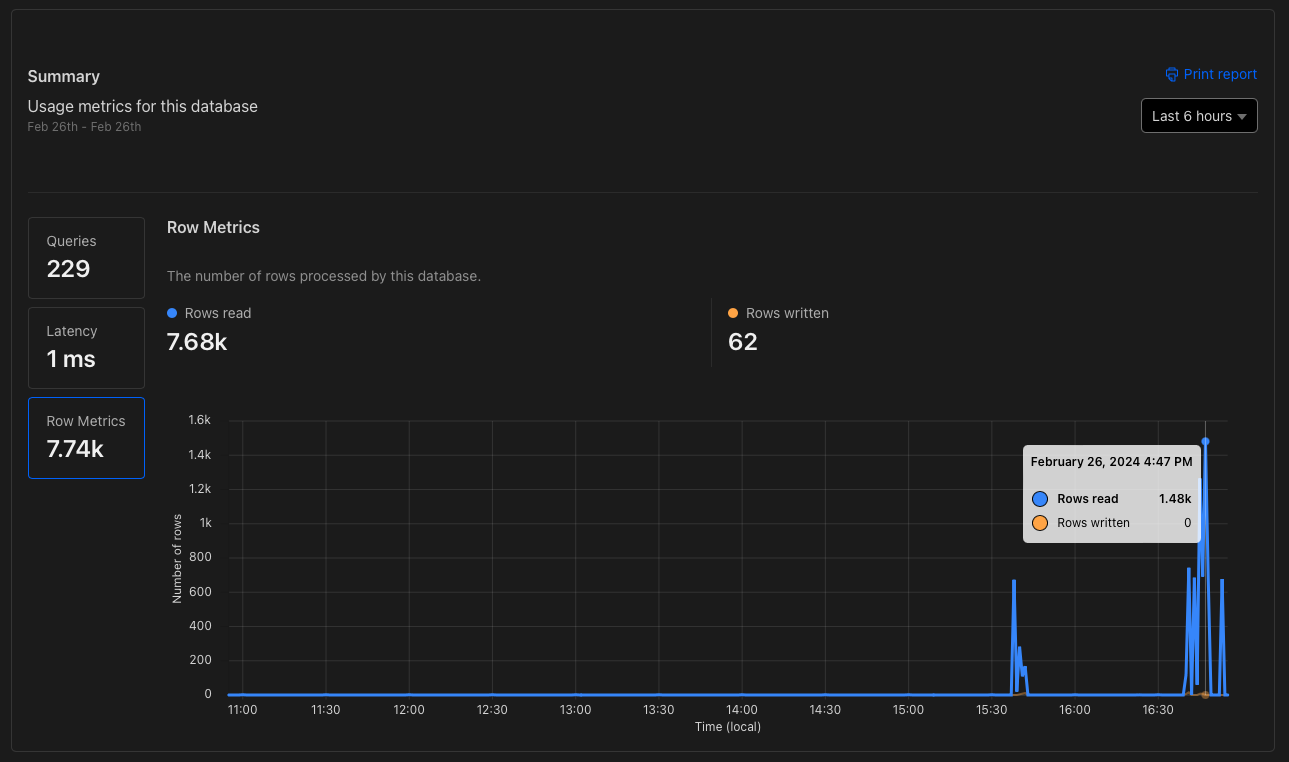

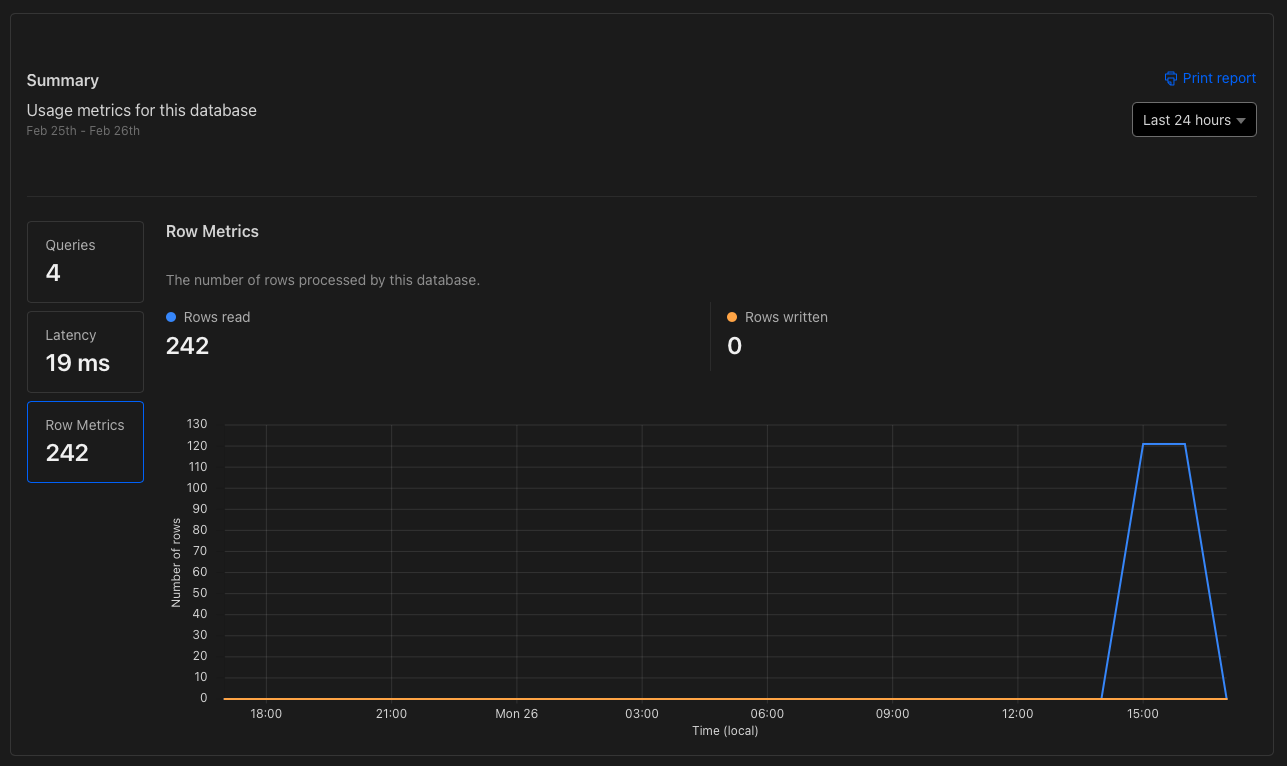

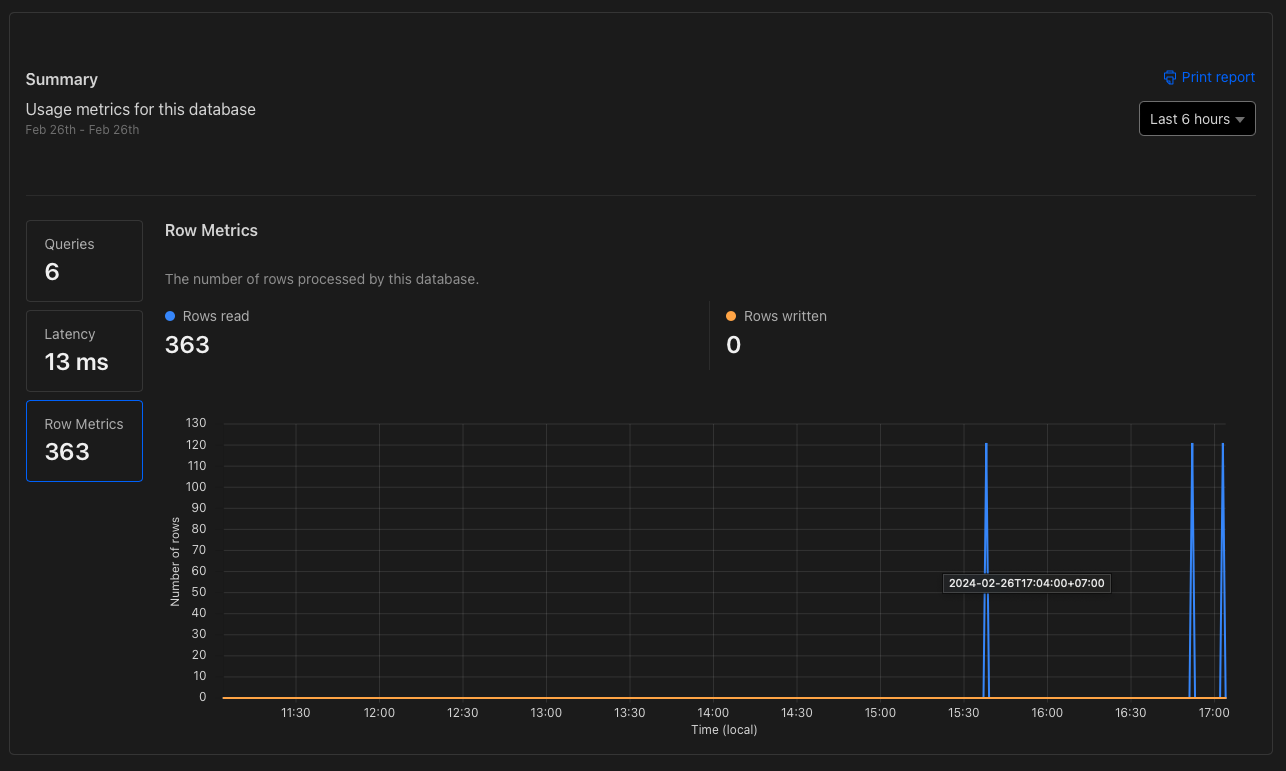

wrangler d1 insights to see what the high rows_read queries areselect * from (select count(*) from table...);wrangler d1 insightsselect * from (select count(*) from table...);