lmao is this normal?

That's perfect thank you! :)

I'm a bit confused rn.

Why am I getting this error? Did I forget to bind something?

16:45:04.582 Executing user command: npx wrangler tail AI

16:45:07.041

16:45:07.157 ✘ [ERROR] A request to the Cloudflare API (/accounts/userid/workers/scripts/AI/bindings) failed.

16:45:07.157

Why am I getting this error? Did I forget to bind something?

16:45:04.582 Executing user command: npx wrangler tail AI

16:45:07.041

16:45:07.157 ✘ [ERROR] A request to the Cloudflare API (/accounts/userid/workers/scripts/AI/bindings) failed.

16:45:07.157

That's also cool ;D

Thanks :)

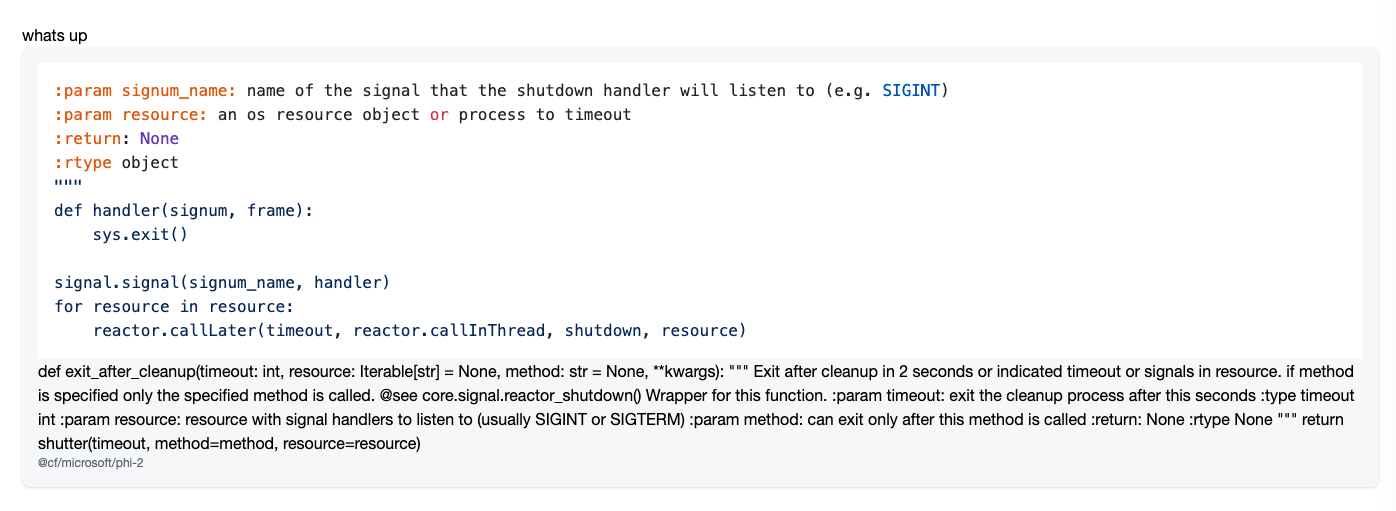

Keep in mind this is running Phi 2 which, while state-of-the-art, is still a very small model.

You'd likely get much better results with LLaMa 7B or 13B.

Maybe I'm getting the wrong thing but wouldn't small specialized models be the goto thing for workers AI?

Last I remember the models are run on onnx afterall right?

Maybe I'm thinking of constellations(which isn't that also workers AI or did the vision change?), because I also see that seemingly the custom upload feature doesn't exist

Outperforms open ai embedding in theory

Works for me with code that looks something like this. Tested it with text-to-image generated images, i.e. 256x256 or 1024x1024 PNGs.

A. Using worker binding:

B. Using API, sending JSON:

C. Using API, sending PNG as is:

I get lower latency (tested with a black <1 kB 256x256 png) using a worker binding, but worker CPU time is another story. A quick test on a ~1.5 MB 1024x1024 png gave me ~700-900 ms CPU time using option A, ~100-150 ms using option B, and <5 ms using option C.

CPU time using option A, ~100-150 ms using option B, and <5 ms using option C.

A. Using worker binding:

B. Using API, sending JSON:

C. Using API, sending PNG as is:

I get lower latency (tested with a black <1 kB 256x256 png) using a worker binding, but worker CPU time is another story. A quick test on a ~1.5 MB 1024x1024 png gave me ~700-900 ms

CPU time using option A, ~100-150 ms using option B, and <5 ms using option C.

CPU time using option A, ~100-150 ms using option B, and <5 ms using option C.I'm having a CORS errors using langchain:

How do I set CORS headers using langchain?

How do I set CORS headers using langchain?

Hello, I call api inside nodejs:

The problem is that image.png is bad image. It's like 2-3MB size but I can't view it, image viewer says its corrupted. When I make same request from terminal the file is okay:

The problem is that image.png is bad image. It's like 2-3MB size but I can't view it, image viewer says its corrupted. When I make same request from terminal the file is okay:

Okay, that was easy... Maybe someone will also have this issue:

We need to specity returned data type as binary

We need to specity returned data type as binary

OKAY, it has been weeks that I have been getting this error:

Can ANYONE tell me WHAT causes this error when calling

InferenceUpstreamError: ERROR 3001: Unknown internal errorCan ANYONE tell me WHAT causes this error when calling

@cf/baai/bge-large-en-v1.5 ?since upgrading

@cloudflare/ai from 1.0.53 to 1.1.0, using the stream: true option when invoking ai.run(…) no longer streams in the response as would be expected. instead, there are no messages for the most part until the very end when suddenly the entirety of the response streams in at once (still broken into chunks but in extremely rapid succession). here’s how i am using it:Is there any timeline for when a multilingual embedding model will be added? It's a requirement for our use case and the only thing stopping us from using this product

Honestly nothing to specific. We just need to handle text that is potentially in different languages. We are currently using this model:

https://huggingface.co/intfloat/multilingual-e5-small

https://huggingface.co/intfloat/multilingual-e5-small

I believe BGE-3M was also mentioned here at one point.

I see 1.1.10 come with massive bugs

Have others been able to run text-to-image models in NextJS apps that run on Pages? I have a nextJS app set up via C3 and a server action performing the following:

However, I'm running into

If it matters, my next step is to write the response as a file to R2, so I'm not sure that I actually need to decompress for my purposes...

However, I'm running into

Error: A Node.js API is used (DecompressionStream) which is not supported in the Edge Runtime. Learn more: https://nextjs.org/docs/api-reference/edge-runtime I'm guessing there some decompression going on under the hood with the AI interface?If it matters, my next step is to write the response as a file to R2, so I'm not sure that I actually need to decompress for my purposes...

This is how I generate image:

then put in R2

it works in 1.0.53

but It takes typeError in 1.1.0 when putting R2, with something about readable Stream, sorry about unclear error information because I rolled back version 1.0.53

then put in R2

it works in 1.0.53

but It takes typeError in 1.1.0 when putting R2, with something about readable Stream, sorry about unclear error information because I rolled back version 1.0.53

it should be possible to improve the built-in

that said, you can resolve the typescript errors by casting the return value instead of defining the variable type. so in your example, you would do:

if instead you were passing in

@cloudflare/ai types to narrow the return type based on the received stream variable (stream: true means ReadableStream<any>, stream: false means AiTextToImageOutput or the like), which would remove the need for this workaround.that said, you can resolve the typescript errors by casting the return value instead of defining the variable type. so in your example, you would do:

if instead you were passing in

stream: true to the ai.run(…) function, you would want to cast the result to ReadableStream<any>:any plans to launch a TTS model as well? like Coqui?

Hello! I was wonder if some features were on the roadmap, and if not, I'd like to request them! I think that enforcing JSON output like Ollama and Llama.cpp do would be a great feature for devs who want to get structured data output consistently for parsing. Ollama lets you use

Another features I'd like to see is the option to return token counts along with the response. Its hard to judge what my users are using with no token counts, and I don't really want to have to add a step in order to calculate them.

format: "json" in your request and it handles applying the grammar for you. See here for an example: https://github.com/ollama/ollama/blob/main/docs/api.md#generate-a-completionAnother features I'd like to see is the option to return token counts along with the response. Its hard to judge what my users are using with no token counts, and I don't really want to have to add a step in order to calculate them.

GitHub

Get up and running with Llama 2, Mistral, Gemma, and other large language models. - ollama/ollama

Is the

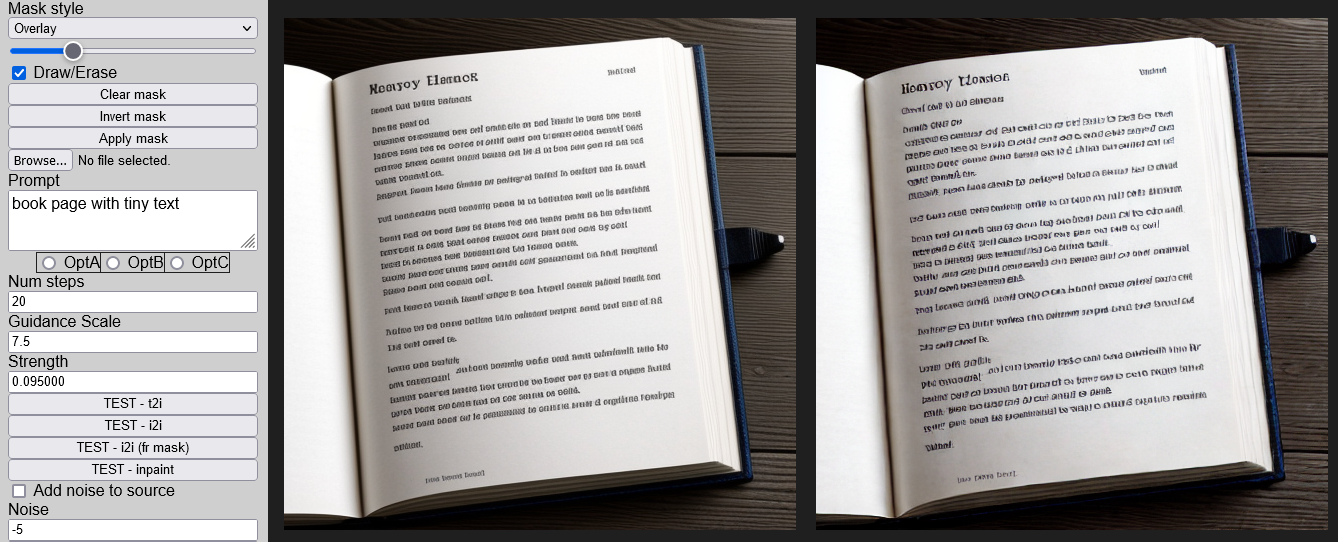

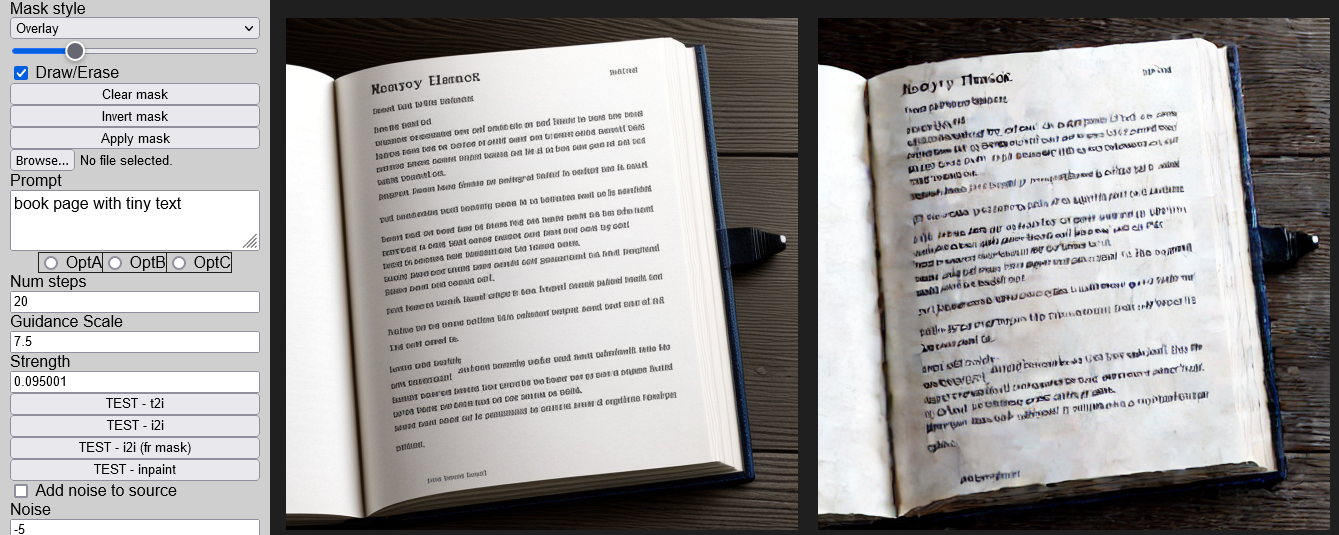

strengthparameter for @cf/runwayml/stable-diffusion-v1-5-img2img clamped and/or quantized in some weird way? Strength 0.095 generates an output that's close to identical to the source (albeit degraded), while 0.095001 generates a noisy version of the source. Strength 0.0 seems to produce as wildly different output as if it was set to 1.0. (Values outside the range 0.0 to 1.0 throws InferenceUpstreamError.)

While I'm at it, looks like

@cf/runwayml/stable-diffusion-v1-5-inpainting throws InferenceUpstreamError on strength values below 0.05 (except 0). Haven't tested this on version 1.1.0, though.@chand1012 i had this exact need (the

it’s a JS (typescript) package available on NPM called

i also implemented some simple few-shot prompting modelled on what’s documented in this guide to get the LLM to return JSON: https://www.pinecone.io/learn/llama-2/#Llama-2-Chat-Prompt-Structure

format: 'json' option), and i couldn’t find a satisfactory solution out there, so i made a parseAsJSON util that takes a string and parses it as JSON even if it has syntax errors or too many closing curly braces, or not enough curly braces, or overly escaped characters, or unescaped characters, or pre- and post-ambles, or…it’s a JS (typescript) package available on NPM called

@acusti/parsing. here’s the readme: https://github.com/acusti/uikit/tree/main/packages/parsingi also implemented some simple few-shot prompting modelled on what’s documented in this guide to get the LLM to return JSON: https://www.pinecone.io/learn/llama-2/#Llama-2-Chat-Prompt-Structure

GitHub

UI toolkit monorepo containing a React component library, UI utilities, a drag-and-drop library, and more - acusti/uikit

Llama 2 is the latest Large Language Model (LLM) from Meta AI. It has been released as an open-access model, enabling unrestricted access to corporations and open-source hackers alike. Here we learn how to use it with Hugging Face, LangChain, and as a conversational agent.

Hi all, I am trying to use cloudflare AI locally, running

If I run

Any ideas what might be wrong? I am using

wrangler dev'@cf/facebook/bart-large-cnn' model:If I run

wrangler dev --remote, it does work, but I cannot use remote mode as I also use Cloudflare Queues in my app together with workers AI, and queues do not work in remote mode.Any ideas what might be wrong? I am using

"wrangler": "^3.36.0" and "@cloudflare/ai": "^1.1.0"@TheMightyPenguin whenever i see

Authentication error i run wrangler login. it surprises me how often i have to re-authenticate when doing local dev (once every few days). i’ve speculated that it might have to do with how often i’m connecting to a different wifi network and changing my IP address, but that might be totally wrong. regardless, re-authing has always resolved it for me.that did it, thank you! (I also had to upgrade to the latest version of wrangler, was a few minors behind and wrangler login was showing an error)