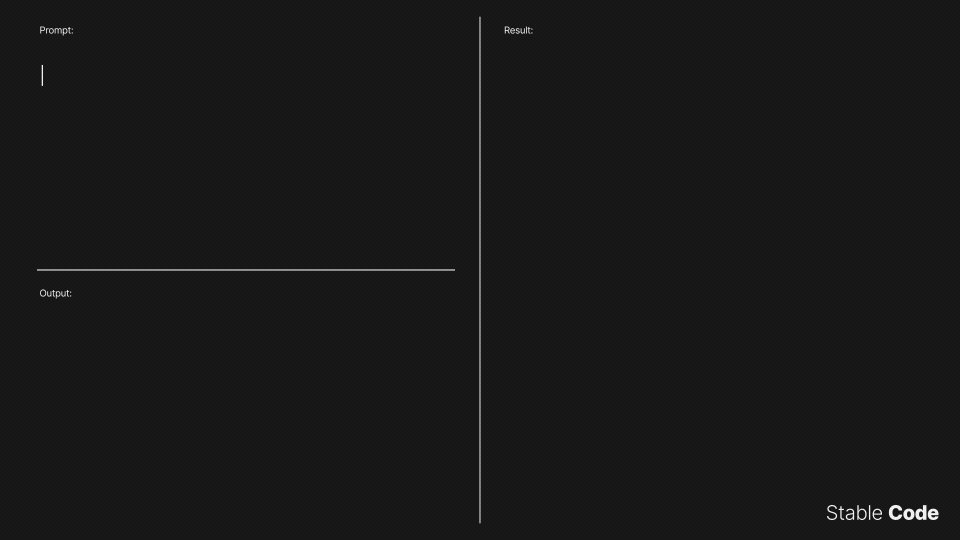

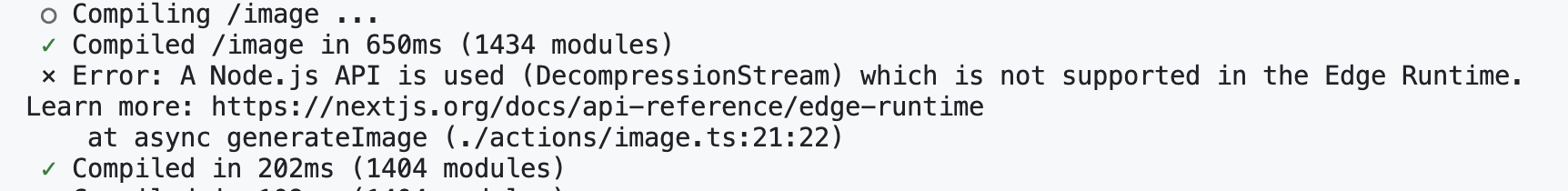

since upgrading `@cloudflare/ai` from 1.0.53 to 1.1.0, using the `stream: true` option when invoking

since upgrading

@cloudflare/aistream: trueai.run(…)