Hi, I am looking to migrate from Neon to D1 so that I can bind Pages functions to them directly. My database stores a lot of texts (which is auto compressed by Postgres). Does D1 supports auto compression?

FYI: Prisma ORM support for D1 in preview: https://vxtwitter.com/prisma/status/1775170363791425667?s=46

vxTwitter / fixvxTwitter Prisma ORM v5.12.0 just dropped!

Prisma ORM v5.12.0 just dropped!

Here's what's new:

Cloudflare D1 Support (Preview)

Cloudflare D1 Support (Preview)

https://github.com/prisma/prisma/releases/tag/5.12.0

57

57  6

6

Prisma ORM v5.12.0 just dropped!

Prisma ORM v5.12.0 just dropped!Here's what's new:

Cloudflare D1 Support (Preview)

Cloudflare D1 Support (Preview)

createMany()https://github.com/prisma/prisma/releases/tag/5.12.0

57

57  6

6I will not sharding like that tho..., i will shard it by date of birth, date of registration or anything like that (i used to do that in dynamo db to reduce costs), but in another way i usually split the app into many small business logic and create a database for each of them.

Btw while designing the system i will try to store data as much as possible in s3 (sacrifice a bit of convenient) to make the db light and fast

Only metadata are stored and query in db

Might not be the brightest idea but it saves me a lot of money on aws

Yeah pretty much what rob said, eg my service has a free tier, so those users are low revenue, but also low read/write/storage, so a traditional monolithic db is generally fine, just need to upgrade the size when necessary. D1 would be significantly better though for auto-scaling and data separation concerns (once dynamic binding and tooling is in place to manage the schema of that many instances).

Workers for platforms ups the limits , maybe reach out to someone and see what the requirments are to get that enabled

There's workarounds I could do to use D1 today, like sharing dbs between users as you said, but I'm using postgres right now and rely on RLS to keep data separate, I'd rather have a guarantee of data separation rather than refactor everything to make sure data is separate (alongside the risk of developer error in the future potentially leaking data), but that's my personal preference.

It's the kind of thing where once I'd be fine signing up for an ent contract I'll consider it, but by that point it becomes even more work to migrate over to D1 that I'll likely have higher priorities unless I was facing technical issues with my current setup.

Or skip freemium, i only have 5usd users, Meaning 250k mrr.. im sure i can affiord enterprise once the limit is reached. .. waiting on dynamic binding

.. waiting on dynamic binding

.. waiting on dynamic binding

.. waiting on dynamic bindingNot d1 related much but how do you guys deal with dates those that use drizzle? Simple createdAt column with CURRENT_TIMESTAMP as default doesn’t work for me

It gives “Invalid date” upon data retrieval

Drizzle ORM is a lightweight and performant TypeScript ORM with developer experience in mind.

ty worked

Are you able to use the limit request form to get increased per database now? I’m happy with no replicas as a tradeoff, just want to be future proof with more storage.

All existing databases (if you have a paid Workers plan) can grow to 10GB each - https://developers.cloudflare.com/d1/platform/changelog/#d1-is-generally-available

Cloudflare Docs

D1 is now generally available and production ready. Read the blog post for more details on new features in GA and to learn more about the upcoming D1 …

Are you able to request a limit beyond that or is it still a hard-coded value like in the beta?

We don’t currently increase that limit - it’s not that it’s hard coded, it’s that we have a very (very) high bar for performance & cold starts.

We’re continuing to work on raising it over time - and as we do it’ll just work. But also unlikely a single D1 DB will be 100GB (which is a large transactional DB by any standard)

We’re continuing to work on raising it over time - and as we do it’ll just work. But also unlikely a single D1 DB will be 100GB (which is a large transactional DB by any standard)

Keep in mind that a 10GB database storing user records @ 1KB per row = 10M users.

That's fair yeah, we're considering a serverless migration at work and our current DB is 108GB (we can't split by user with our use case) so size is the issue

does SQLite View improve query speed? I'm not planning to join view with anything else

Same here. I tried specify a region and it works.

@geelen

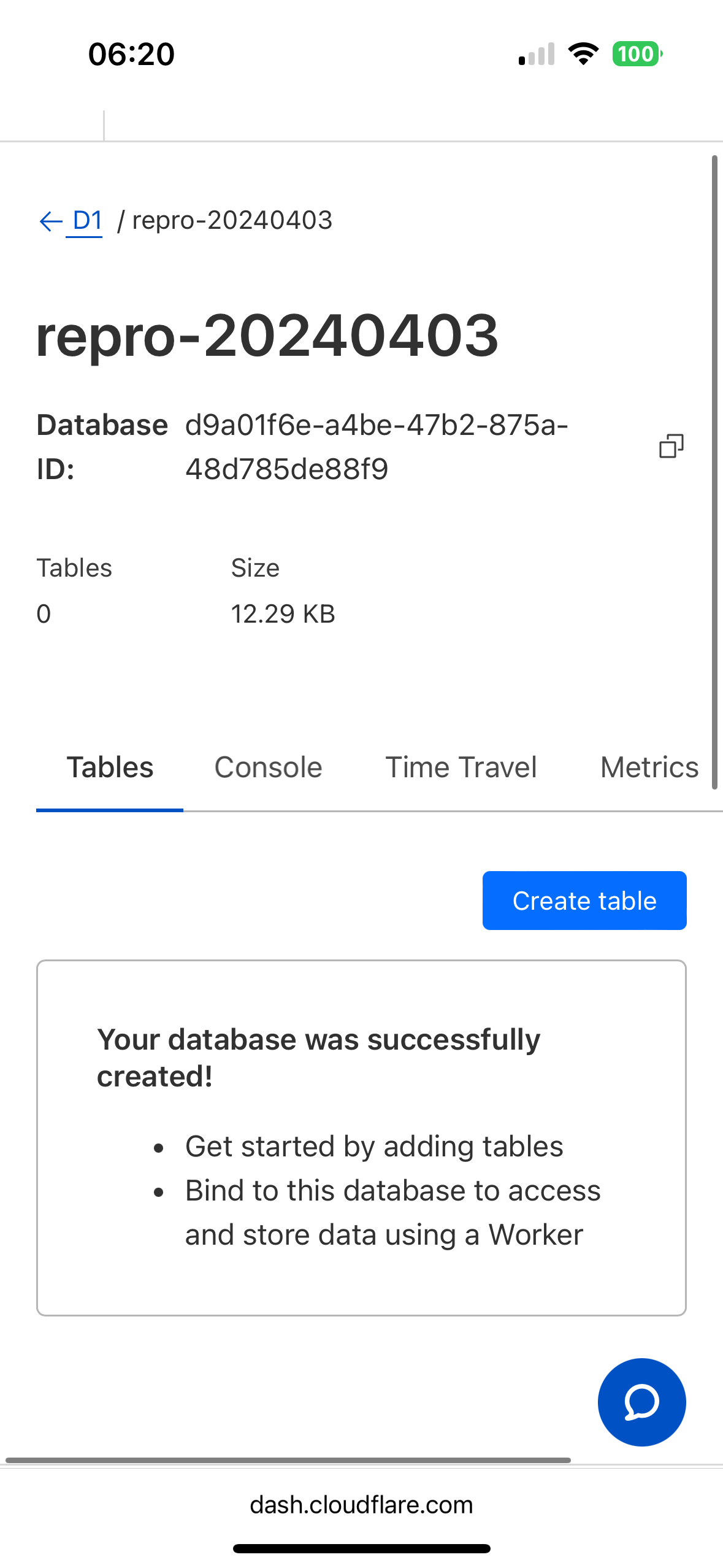

Are you still seeing this? I was able to create a new DB just now.

OK, looking into it. My new DB was automatic (based on your previous comment).

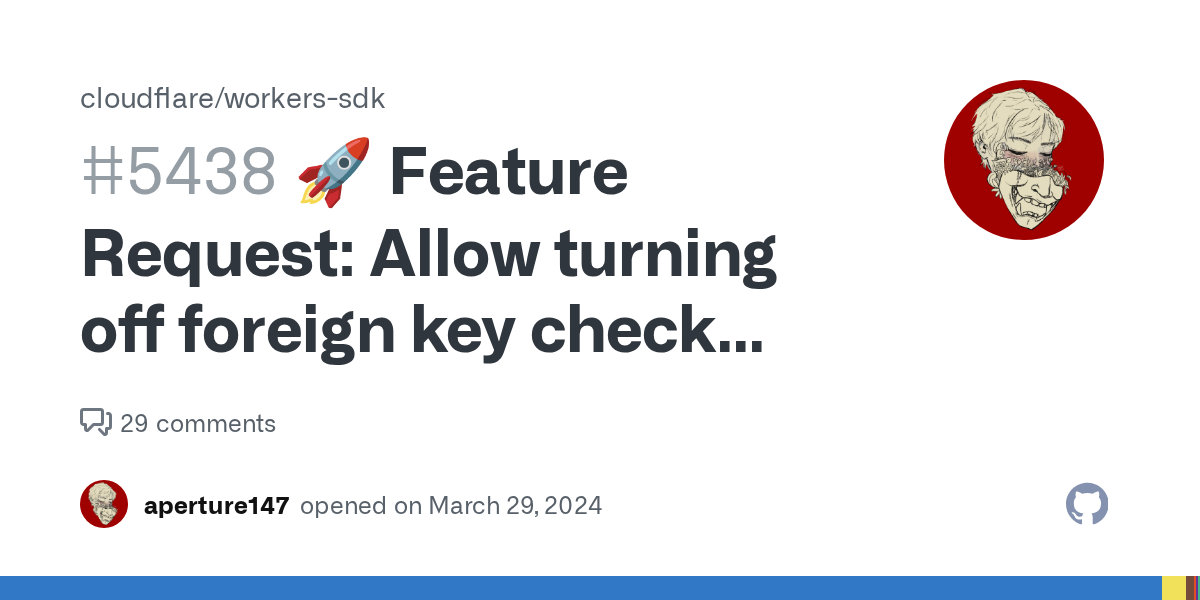

Hi guys, is there anyway to turn off foreign key check while migrating the database? Some table altering tasks needs table to be dropped, which will cascade referenced tables.

A sample scenario was mentioned in this issue https://github.com/cloudflare/workers-sdk/issues/5438

GitHub

Some table altering tasks in SQLite cannot be achieved without replacing it with a new table (by dropping - recreating the table) (like adding foreign keys, changing primary keys, updating column t...

checkout https://developers.cloudflare.com/d1/build-with-d1/foreign-keys/#defer-foreign-key-constraints

Cloudflare Docs

D1 supports defining and enforcing foreign key constraints across tables in a database.

This works with table creating or importing but not for table dropping. Matt also suggested me to bracketing the migration by that pragma but sadly it didn't work

Got another question, there's still no way to dynamically bind to databases correct? I.e. bind to all 50k DBs from a Worker instead of the ~5k that'll fit in wrangler.toml? I believe you can use the API, but that kind of defeats the purpose.

Correct

Yeah I think it's the only way to add foreign key or change column type, but whenever I drop the table, all records in tables which reference to the table dropped before are gone too because of cascading on delete.

My current approach is store the child table data to a temp table then insert it again after migrating the parent table. But when my child table also has some more children, the task turns out to be complex and wasteful

Thank you!

The REST API is significantly slower than a Worker, would recommend a simple wrapper over a Worker instead: https://github.com/elithrar/http-api-d1-example

Can you try one more time when you're back online?

Is it possible to share a d1 database between worker and pages locally?

I'm symlinking the wrangler folder, it seems to work but was curious if there is a better way

we are working on making it faster tho

I am trying to understand how session-based consistency would work in an application such as SvelteKit and the data invalidation methods. I understand the example of consistency when executing multiple queries in the same function, but how can I guarantee that the next reload of the page will be up to date? Does anyone have any examples of this?