I'm working on a project where I need to upload videos to Cloudflare using JavaScript. I found a code that works fine for normal-sized videos, but I've run into an issue when trying to upload videos larger than 200 MB.

I've tried several solutions, but I haven't yet found an effective way to handle the uploading of large videos. Does anyone have an example or can you guide me on what I might be missing or how I can approach this issue?

ERROR CONNECTION

I've tried several solutions, but I haven't yet found an effective way to handle the uploading of large videos. Does anyone have an example or can you guide me on what I might be missing or how I can approach this issue?

ERROR CONNECTION

Do you have any examples that can help me? I would appreciate it, my problem is that it does not allow me to upload videos larger than 200MB, if the video is less it uploads it without inconvenience

but I would like upload video from JavaScript to Cloudflare

Ok, excuseme .

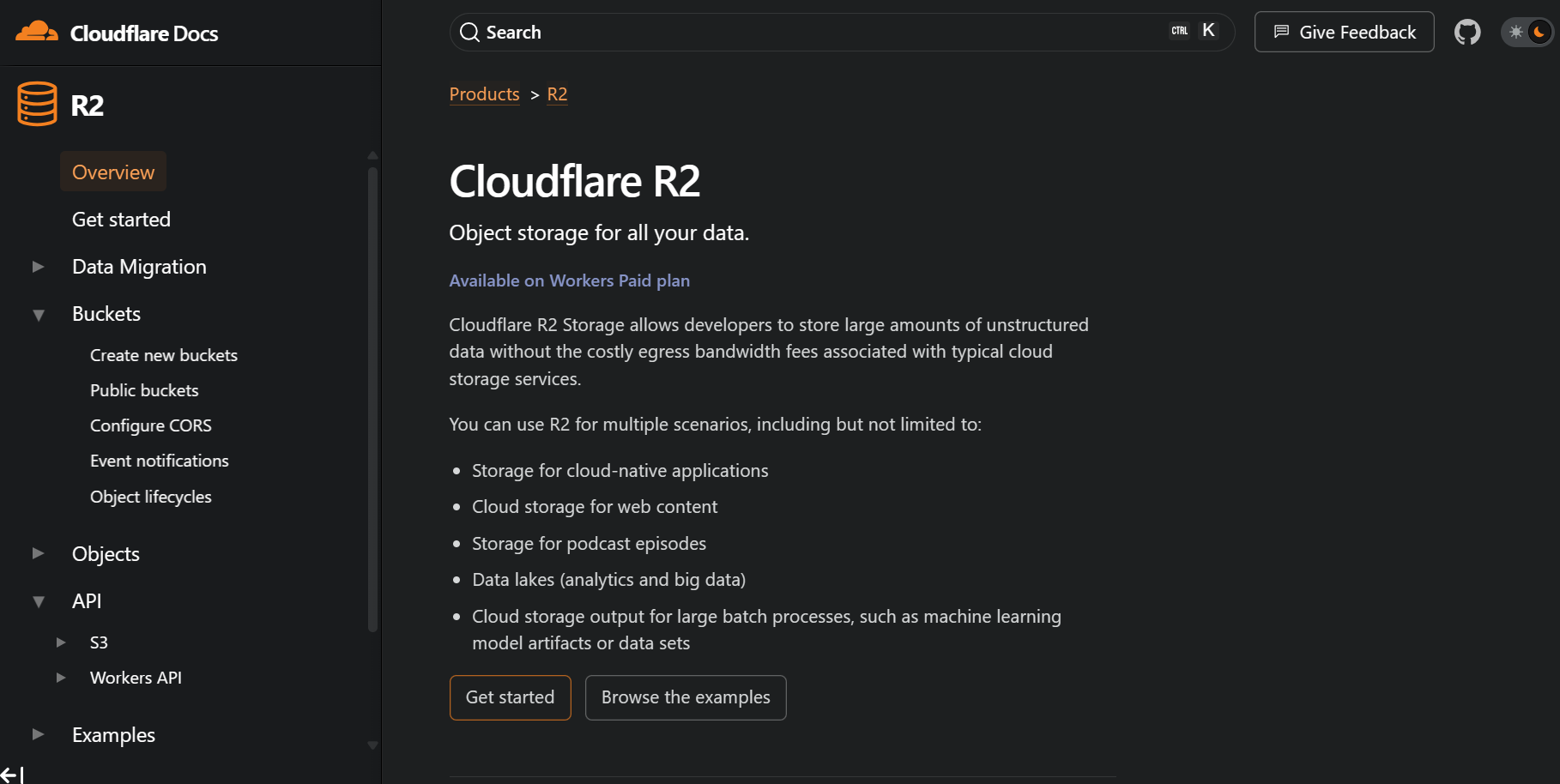

Do you recommend uploading the video to any of these APIs? Or tell me if I'm wrong

Do you recommend uploading the video to any of these APIs? Or tell me if I'm wrong

Is it possible to increase the R2 bucket limit? If so what's the ceiling/cost related to this?

The code it is in JavaScript and then I would like use this code in APEX

the worker allow only 100mb

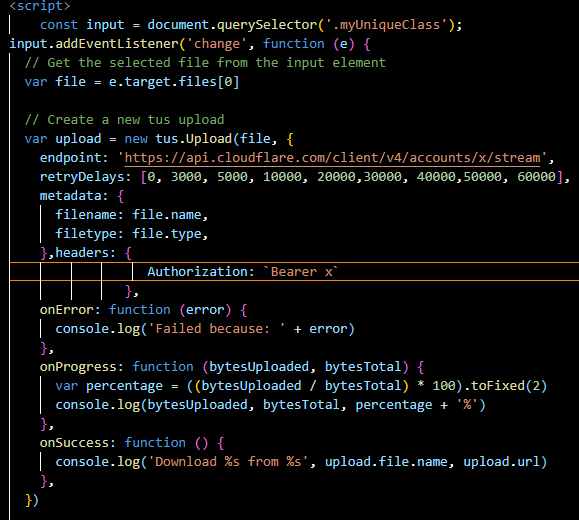

I found this information, have you worked with TUS? They mention there that this is a way to upload long videos, however it doesn't work for me

For uploading files more than 300 MB i need to use workers, I have seen videos that are too complex to implement and integrate, is there any chance for increasing uploading limit in R2 itself for about an 5GB

Means, splitting files?

How to do that

I'm literally new to this, I don't know what to do.

Even when I read, I didn't understand anything

Can you help me to do that

I want to upload a zip file which is of 3gb

For that when I seen some yt videos and developer blog I found that it can be done by workers somthing called wangler I tried commands as in the yt , unfortunately failed

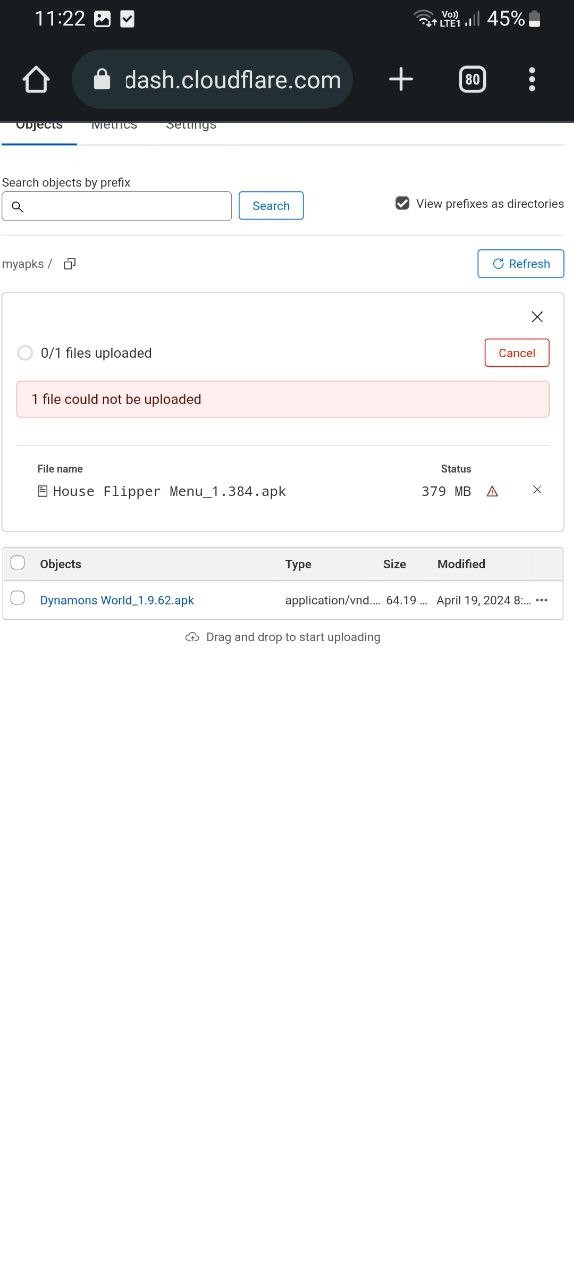

It says, "file could not be uploaded"

I try .APK file too, same happens

Yes, I have a Mod APK download site, where I provide games/apps

Their extension is .APK .zip most are .apk

Their extension is .APK .zip most are .apk

Yes, I did nothing else

I'm new to this, guide me I'll learn ASAP

I just need to upload these files, nothing more

I just need to upload these files, nothing more

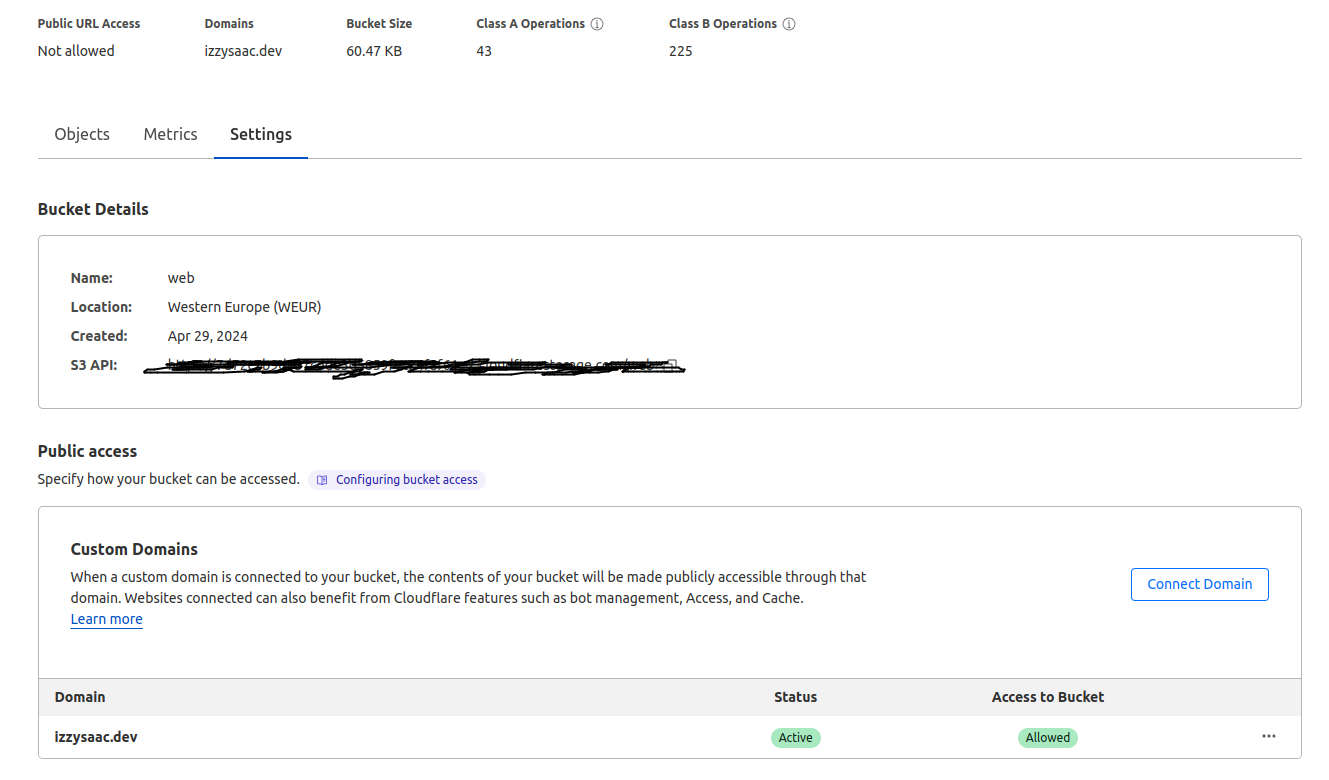

Hello there, I'm new to R2. I want upload to upload static files like .webp or .png and access them. I have already uploaded the files. Then I have connected a custom domain with my bucket with status active. But I'm trying to access the files but I can't. Also, Public URL Access is 'Not allowed'. Not sure what I'm missing

lol I just tried and just worked.

I was just testing on izzysaac.dev and was getting this

I was just testing on izzysaac.dev and was getting this

make sense, I just bought the .dev when i already had the .com domain. I'm very newbie

Akeeba:internalGetBucket(): [500] Unauthorized:SigV2 authorization is not supported. Please use SigV4 instead.

Hi I am trying to configure R2 with this Joomla plugin but I get the following error: "Akeeba:internalGetBucket(): [500] Unauthorized:SigV2 authorization is not supported. Please use SigV4 instead."

This plugin works perfectly with AWS S3 and with DigitalOcean's Space (which is also an S3 compatible service).

Hi I am trying to configure R2 with this Joomla plugin but I get the following error: "Akeeba:internalGetBucket(): [500] Unauthorized:SigV2 authorization is not supported. Please use SigV4 instead."

This plugin works perfectly with AWS S3 and with DigitalOcean's Space (which is also an S3 compatible service).

Hello, Does r2 incur cost when it is denied? Let's say there is a case where you send a put request, and it is denied.

No, auth failures are not billed (unlike aws)

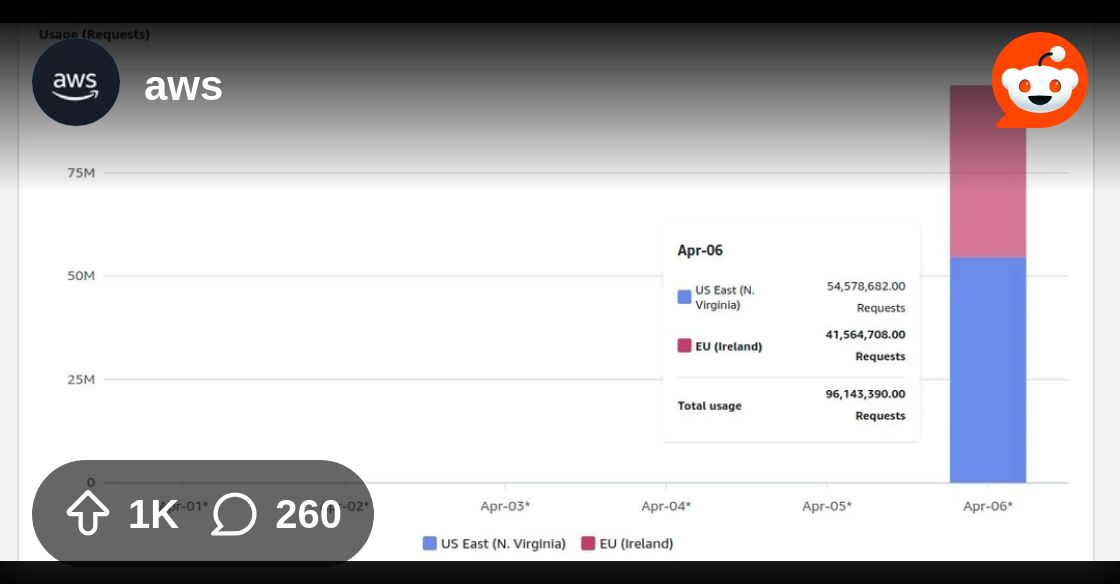

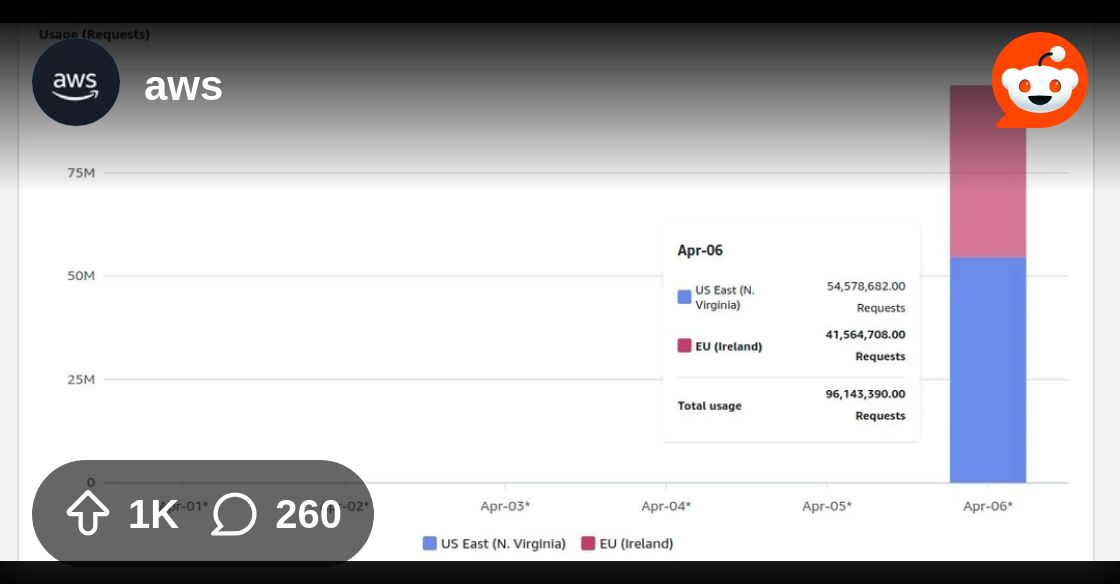

thank you Erisa. reddit and x are excited about this thread today .https://www.reddit.com/r/aws/comments/1cg7ce8/how_an_empty_private_s3_bucket_can_make_your_bill/

Reddit

Explore this post and more from the aws community

Yeah I saw that, not great

DDoB: Distributed Denial of Budget

I came here to ask the exact same question, glad someone asked first and got an answer

AWS is crazy for allowing that…

I don't think there is, I'll see about adding that

Hi! I have an R2 bucket which I created with public access and a public R2.dev subdomain. I uploaded some images and used the dev url to fetch the images in the website. It was working fine till last month. Recently, it started behaving weirdly. Most of the time the image links are not working except suddenly sometimes. Does anyone else have this issue?

What is the error code when it doesn't work?

But importantly: https://developers.cloudflare.com/r2/buckets/public-buckets/#enable-managed-public-access

But importantly: https://developers.cloudflare.com/r2/buckets/public-buckets/#enable-managed-public-access

Public access through r2.dev subdomains are rate limited and should only be used for development purposes.you should use a Custom Domain instead.

Hi, would R2 be susceptible to the same S3 issue outlined here? -> https://twitter.com/lauramaywendel/status/1785064878643843085?s=46&t=uiFKqgo_CD2_yKd9HE5hRg&fbclid=IwAR1ERL19bPW53lsmCBNZTWSZdH0S68J88UKjv-WT5kg2vB-fspCCyr6e3wo

if so, what would be the recommended approach to avoid this?

if so, what would be the recommended approach to avoid this?

So apparently if someone knows / guesses the name of your S3 bucket - even if it's private (!) - they can just bankrupt you by sending infinite PUT requests and there is nothing you can do about it.

requests get rejected

but AWS still counts it as a write operation against…

4/29/24, 9:53 PM

ahh thank you, completely missed that just joined

Hi guys, I need your help, I uploaded my files to R2 and connected my domain to make it public

But the creation of the URL between folders and files is being separated by '%2F'

Like the following example: https://domain/Projects%2Fhome%2FServers%2Fservers-data-orig.json

Whereas before it was something like: http://localhost/Projects/home/Servers/servers-data-orig.json

I would like to know if there is any configuration regarding the creation of the URL, which could be separating the folders by '/'?

But the creation of the URL between folders and files is being separated by '%2F'

Like the following example: https://domain/Projects%2Fhome%2FServers%2Fservers-data-orig.json

Whereas before it was something like: http://localhost/Projects/home/Servers/servers-data-orig.json

I would like to know if there is any configuration regarding the creation of the URL, which could be separating the folders by '/'?

Considering that my application accesses this URL and checks for possible updates, the file name is also being downloaded differently

Hello, I want to serve my WordPress website images through Cloudflare CDN. Which one should I use Cloudflare Images or R2 for this?

Thank you  :cloudflare:

:cloudflare:

:cloudflare:

:cloudflare:Less than 10mb. Single upload via go sdk

Upload via AWS SDK was also slow

I'm not able to consume the api in r2's postman does anyone know if there's a little secret every time it gives a different 403 asking for something different

Is R2 globally distributed automatically or is it based on bucket location hint?