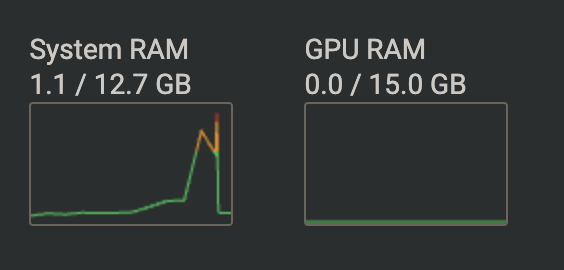

The limit for Llama 3 (on Cloudflare) seems to be ~2800 tokens though. The model stops mid-sentence

The limit for Llama 3 (on Cloudflare) seems to be ~2800 tokens though. The model stops mid-sentence when prompt/messages + generated text goes above that limit.