This typically means your query is running for too long. Do you have an index on `user_id` ?

This typically means your query is running for too long. Do you have an index on

user_id

execute--file--command "CREATE TRIGGER delete_item_tags after delete on list_item begin DELETE FROM list_item_tags WHERE item_id = OLD.id; END;"beginBEGIN

BEGIN![[wrangler] Made D1 BEGIN and CASE regex case insensitive by polvall...](https://images-ext-1.discordapp.net/external/NPSWk-yEcuFJG-Ln2ONb01LK_s7_DWt__2lag0H4l2g/https/opengraph.githubassets.com/e9577be72f920471d0c1bb206d5c80e5f17dfe6a9065ab870e22ca0e1abdff99/cloudflare/workers-sdk/pull/5889)

d1_

5/22/24, 2:51 PM

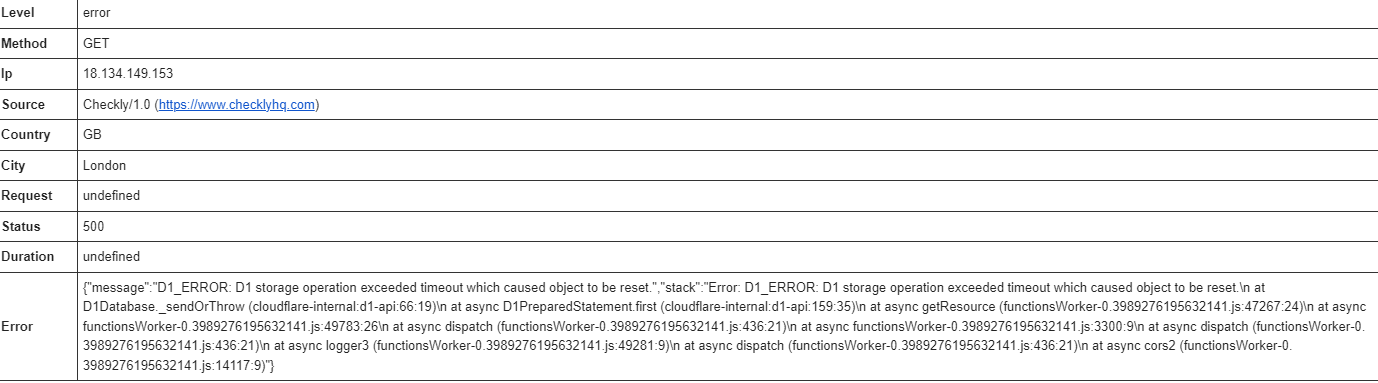

.sqlite.wranglerUserDROP TABLE User; - is it expected that running the migration command again returns :white_check_mark: No migrations to apply!?idError: D1_ERROR: D1 storage operation exceeded timeout which caused object to be reset.Anything going on?

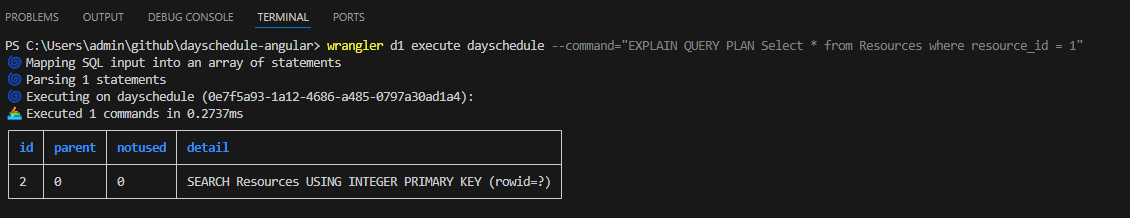

EXPLAIN QUERY PLAN

Error: Too many API requests by single worker invocation I know workers has a 1000 request invocation limit but I was wondering if there was a way insert large amounts of rows with a single request like pythons executemany command. I'm currently inserting a single row per request like this execute() on a giant sql insert statement stringCREATE TRIGGER delete_item_tags

AFTER DELETE ON list_item

begin

DELETE FROM list_item_tags

WHERE item_id = OLD.id;

END;DROP TABLE User;:white_check_mark: No migrations to apply!Error: Too many API requests by single worker invocationexecutemanyexecute()const prepared = db.prepare("INSERT OR IGNORE INTO r2_objects_metadata (object_key, eTag) VALUES (?1, ?2)");

const queriesToRun = newBucketObjects.map(bucketObject => prepared.bind(bucketObject.Key, bucketObject.ETag));

await db.batch(queriesToRun);for (let i = 0; i < newBucketObjects.length; i++) {

const Key = newBucketObjects[i].Key;

const ETag = newBucketObjects[i].ETag;

await db.prepare(

`INSERT OR IGNORE INTO r2_objects_metadata

(object_key, eTag) VALUES (?, ?)`

).bind(Key, ETag).run();

}let masiveInsert = "";

for (let i = 0; i < newBucketObjects.length; i++) {

const Key = newBucketObjects[i].Key;

const ETag = newBucketObjects[i].ETag;

let insert = `INSERT OR IGNORE INTO r2_objects_metadata (object_key, eTag) VALUES ('${Key}', '${ETag}');`;

masiveInsert += '\n';

masiveInsert += insert;

}

await db.exec(masiveInsert);