There was so much fuss about the GGUF models/ people running out of VRAM/ Flux being too large, that I spent the entire day today with my home PC (12GB 4070 Super) & ComfyUI, trying various models. To my surprise, I found:

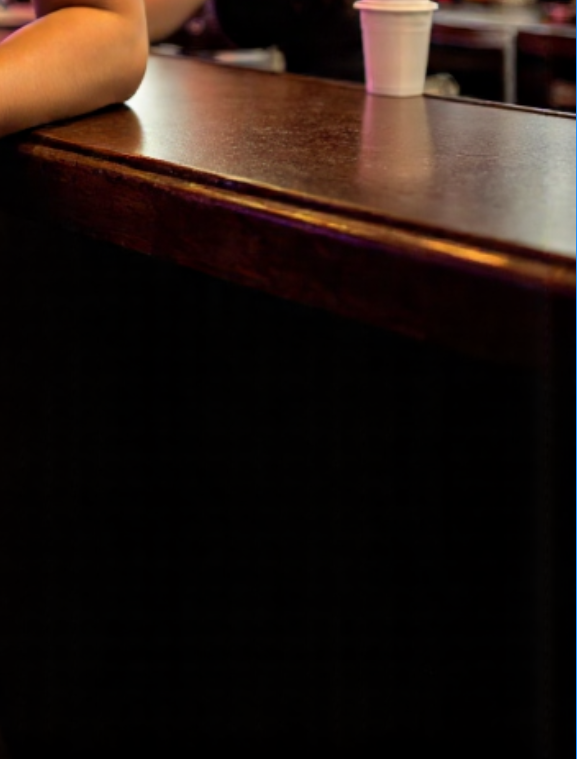

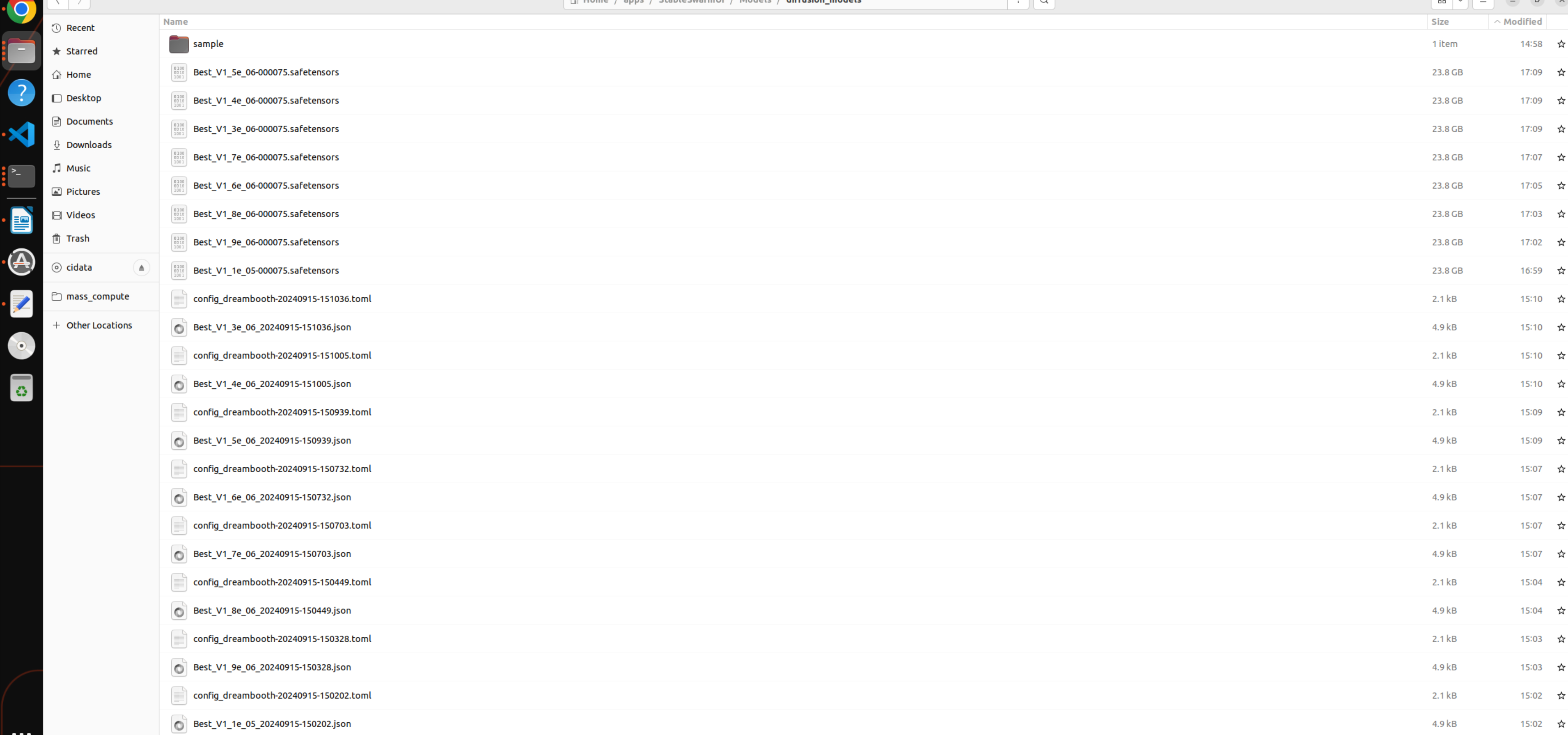

1) ComfyUI could load and generate with the original 23GB Flux Dev Model (reasonable speed) on my PC & FP8 T5XXL. I do not understand how people are running out of VRAM? It seems both swarm UI and comfy ui have some resource management going on inside which lets you use the dev model. Am I missing something? Since it was working, I loaded Florence2 and SAM2 as well, ended up generating + inpainting with relative ease.

2) As for the GGUF models, I actually found that they took much, much longer than the 23GB model to generate images!! I tried Q6_K and Q8_O and was able to do the above operations with them as well. The quality was good, but obviously not better than the full size dev model.

3) I found that Swarm UI is somehow doing a much better job at generating with the LoRAs+Img2Img. On the other hand, it seems SwarmUI can't work with Controlnets+LoRAs at all. The results are laughable tbh. The inpainting on ComfyUI is the best!