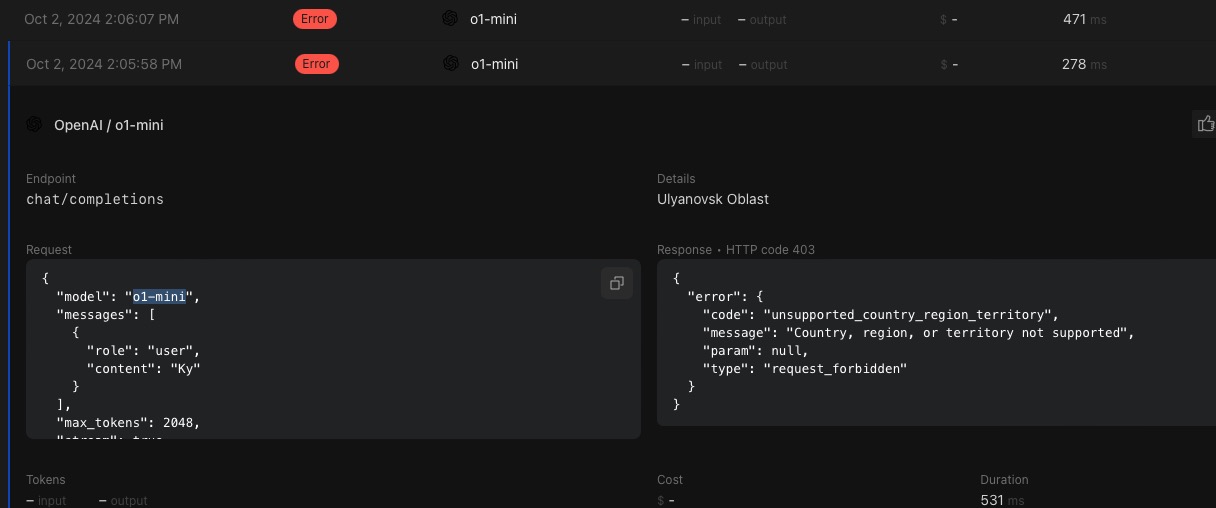

const validModel = ['gpt-4o-mini', 'gpt-4o-mini-2024-07-18', 'gpt-4o', 'gpt-4o-2024-08-06', 'gpt-3.5-turbo-0125', 'gpt-3.5-turbo-1106']; // NO o1-mini

export default class extends WorkerEntrypoint<Env> {

async fetch(request: Request): Promise<Response> {

const { model, messages }: any = await request.json();

return this.rpc(model, messages);

}

async rpc(_model: string, messages: Messages[], colo?: string): Promise<Response> {

const model = _model?.split('/')[1]; // slice prefix openai/

if (!model || !validModel.includes(model)) {

return new Response(JSON.stringify({ message: 'Invalid model' }), { status: 400 });

}

...

const aiPayload = {

model: model,

messages: messages,

max_tokens: 2048,

stream: true

}

...

const response = await fetch(cfGateway, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${token}`

},

body: JSON.stringify(aiPayload),

});

const validModel = ['gpt-4o-mini', 'gpt-4o-mini-2024-07-18', 'gpt-4o', 'gpt-4o-2024-08-06', 'gpt-3.5-turbo-0125', 'gpt-3.5-turbo-1106']; // NO o1-mini

export default class extends WorkerEntrypoint<Env> {

async fetch(request: Request): Promise<Response> {

const { model, messages }: any = await request.json();

return this.rpc(model, messages);

}

async rpc(_model: string, messages: Messages[], colo?: string): Promise<Response> {

const model = _model?.split('/')[1]; // slice prefix openai/

if (!model || !validModel.includes(model)) {

return new Response(JSON.stringify({ message: 'Invalid model' }), { status: 400 });

}

...

const aiPayload = {

model: model,

messages: messages,

max_tokens: 2048,

stream: true

}

...

const response = await fetch(cfGateway, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${token}`

},

body: JSON.stringify(aiPayload),

});