Hello, I'm trying to set up Worker AI. I got this {"response":"Content-Type invalid"} Any thoughts?

Hello, I'm trying to set up Worker AI. I got this {"response":"Content-Type invalid"}

Any thoughts?

Any thoughts?

curl and when it does implement it inside the worker. Example request that works for me ->messages, in doubt ask any chatbot website to format this correctly for you.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

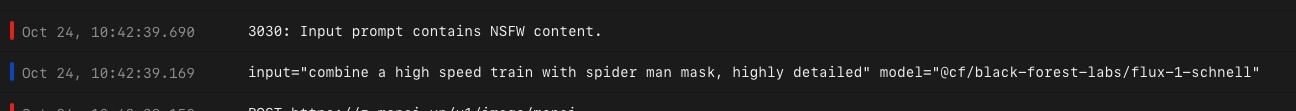

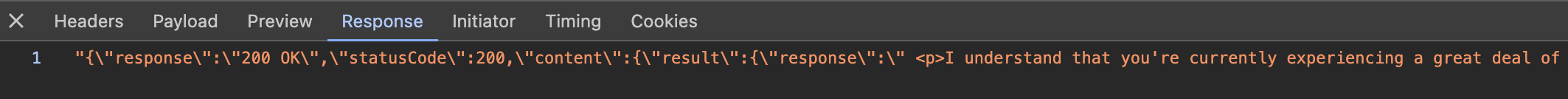

response property), it may not work. LLMs are trained to generate text not JSON.jq is happy so should any JSON parserOpenAIEmbeddings library to send text to my AI-worker. What I noticed is that the embed_documents method doesn't send the text to the endpoint provided but rather sends an array of tokens. On the other hand the CF embedding models/methods expects strings as inputs. Anyone know how to resolve this? Is there specific Langchain library for Cloudflare, or is there a way to input tokens into the CF models?withAI and useAI method that allows us to inject the binding instance into our workers from a core package.Env interface was generated by wrangler types_env.AI in this case is of abstract class Ai as defined in the workers-type pacakge.

JSON.stringify(inputs).length needs to be less than 9.5 MB, to be precise. I.e. the input to AI.run is stringified at one point internally and must not exceed ~10 million bytes or you'll get "request to large". cc @Kristian)8b, but there is no 8b Llama 3.2 modelcurlmessagesresponsejqOpenAIEmbeddingsembed_documentsimport { useContext } from '@fc/core/context';

/**

* Injects the Ai instance into the application context.

*/

export const withAI = (ai: Ai) => {

const context = useContext();

if (!context) {

throw new Error('Context not found: ensure the AI binding is attached.');

}

context.dependencies.set('ai', ai);

};

/**

* Retrieves the Ai instance from the context.

* Throws an error if Ai is not found.

*/

export const useAI = (): Ai => {

const context = useContext();

if (!context) {

throw new Error('Context not found: useAI must be called within a Cloudflare Worker.');

}

const ai = context.dependencies.get('ai') as Ai;

if (!ai) {

throw new Error('AI instance not found in context. Ensure `withAI` is used.');

}

return ai;

};withAIuseAI await withContext(requestId, async () => {

const env = envSchema.parse(_env);

// AI

withAI(_env.AI);Env_env.AI abstract class Aiconst cloudflareJobOutput = (await ai.run('@cf/openai/whisper', modelInput)) as WhisperResponse; undefined: undefinedJSON.stringify(inputs).length8b8bcurl -X POST \

https://api.cloudflare.com/client/v4/accounts/${CLOUDFLARE_ACCOUNT_ID}/ai/run/@cf/meta/llama-3-8b-instruct \

-H "Authorization: Bearer ${CLOUDFLARE_API_TOKEN}" \

-d '{

"messages": [

{

"role": "system",

"content": "You are a friendly assistant that helps write stories"

},

{

"role": "user",

"content": "Write a short story about a llama that goes on a journey to find an orange cloud "

}

]

}' \

| jq