I have Gigabyte Eagle rtx3090, no overclocked. Are you running Rank_3_T5_XXL_23500MB_11_35_Second_I

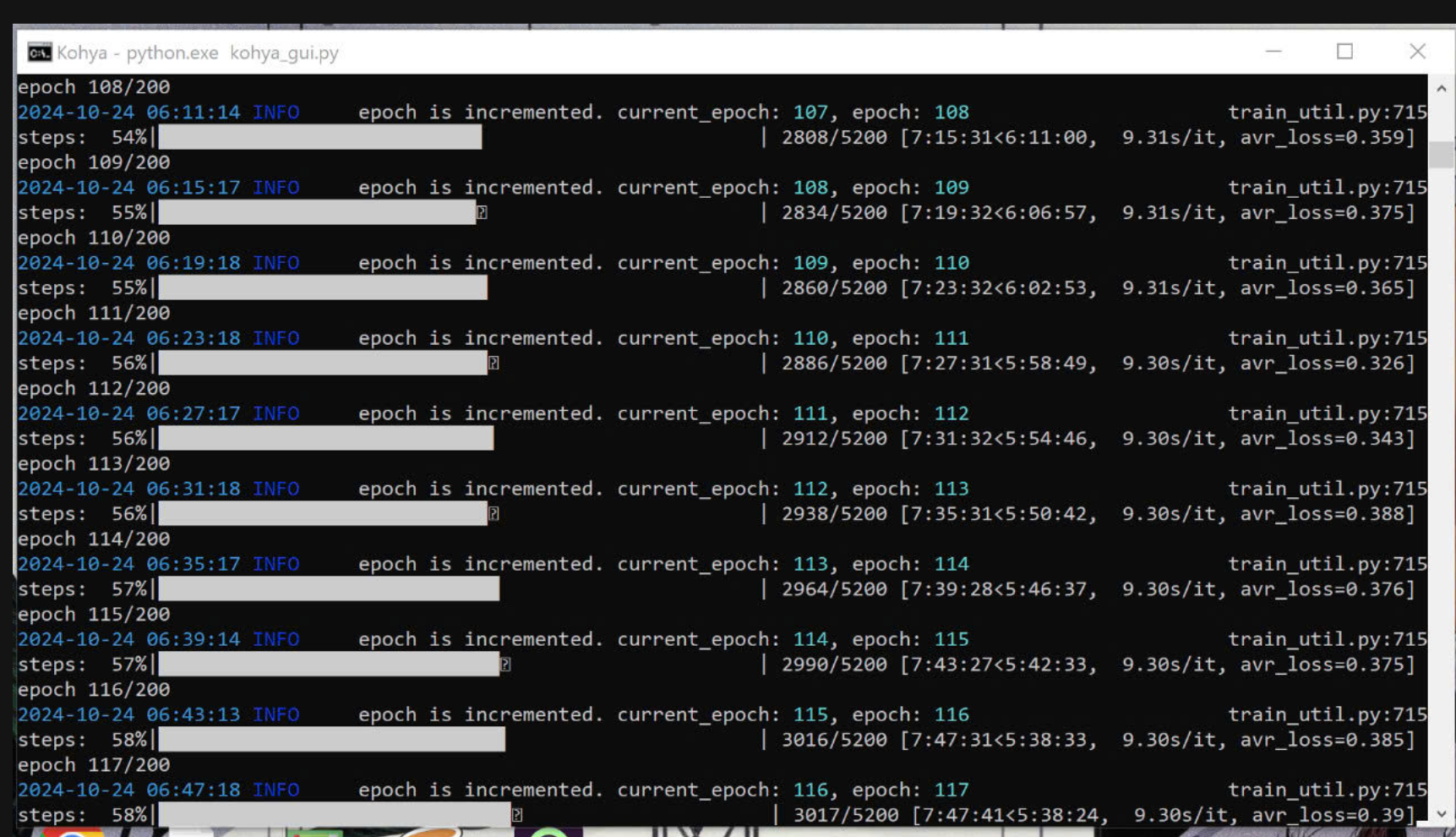

I have Gigabyte Eagle rtx3090, no overclocked. Are you running Rank_3_T5_XXL_23500MB_11_35_Second_IT.json to train LORA? I had posted 8.94s with Doc's V9, but unfortunately after some times the training process failed due to lack of VRAM. Now I'm testing with Kohya original version and it reached 10.5 s/It. But it is more stable in VRAM usage.

C:\IA\kohya_ss\venv\lib\site-packages\torch\utils\checkpoint.py:295: FutureWarning:

with torch.enable_grad(), device_autocast_ctx, torch.cpu.amp.autocast(**ctx.cpu_autocast_kwargs): # type: ignore[attr-defined]

steps: 0%|▎ | 15/3000 [02:36<8:38:56, 10.43s/it, avr_loss=0.416]

epoch 2/200

2024-10-25 23:28:24 INFO epoch is incremented. current_epoch: 1, epoch: 2 train_util.py:715

steps: 1%|▌ | 30/3000 [05:11<8:34:22, 10.39s/it, avr_loss=0.406]

epoch 3/200

2024-10-25 23:31:00 INFO epoch is incremented. current_epoch: 2, epoch: 3 train_util.py:715

steps: 2%|▊ | 45/3000 [07:49<8:33:58, 10.44s/it, avr_loss=0.374]

epoch 4/200

2024-10-25 23:33:38 INFO epoch is incremented. current_epoch: 3, epoch: 4 train_util.py:715

steps: 2%|█ | 60/3000 [10:28<8:32:55, 10.47s/it, avr_loss=0.409]

C:\IA\kohya_ss\venv\lib\site-packages\torch\utils\checkpoint.py:295: FutureWarning:

torch.cpu.amp.autocast(args...) is deprecated. Please use torch.amp.autocast('cpu', args...) instead.with torch.enable_grad(), device_autocast_ctx, torch.cpu.amp.autocast(**ctx.cpu_autocast_kwargs): # type: ignore[attr-defined]

steps: 0%|▎ | 15/3000 [02:36<8:38:56, 10.43s/it, avr_loss=0.416]

epoch 2/200

2024-10-25 23:28:24 INFO epoch is incremented. current_epoch: 1, epoch: 2 train_util.py:715

steps: 1%|▌ | 30/3000 [05:11<8:34:22, 10.39s/it, avr_loss=0.406]

epoch 3/200

2024-10-25 23:31:00 INFO epoch is incremented. current_epoch: 2, epoch: 3 train_util.py:715

steps: 2%|▊ | 45/3000 [07:49<8:33:58, 10.44s/it, avr_loss=0.374]

epoch 4/200

2024-10-25 23:33:38 INFO epoch is incremented. current_epoch: 3, epoch: 4 train_util.py:715

steps: 2%|█ | 60/3000 [10:28<8:32:55, 10.47s/it, avr_loss=0.409]