Wait, so are you using a framework in Vite?

Wait, so are you using a framework in Vite?

workerd . Navigate to Workers > Queues > your Queue and under the Metrics tab you’ll be able to view line charts describing the number of messages processed by final outcome, the number of messages in the backlog, and other important indicators.

. Navigate to Workers > Queues > your Queue and under the Metrics tab you’ll be able to view line charts describing the number of messages processed by final outcome, the number of messages in the backlog, and other important indicators.

{ targetQueue: "test-runner", data: {} }. Let's say I'm sending data to "test-runner" queue via queue manager. For some reasons, sometimes (more oftenly) my consumer worker doesn't get these messages from the queue, it just stay still. Sometimes I'm seeing Exceeded CPU Time error and that happen right after it runs for the first time and then I can't get anything back from the consumer worker. Thanks in advance!

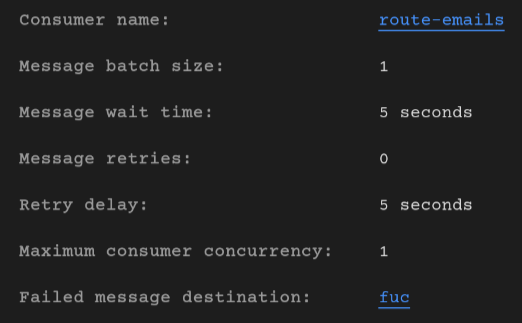

Thanks in advance!For some reasons, sometimes (more oftenly) my consumer worker doesn't get these messages from the queue, it just stay still.What's likely happening here is that your consumer Worker is taking a long time to process messages, and is erroring out.

Exceeded CPU time), they won't auto-scale (see here for details).

batch.queue is the name of the queue that the batch of messages is from:

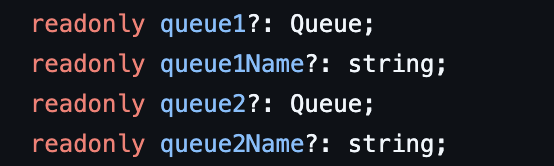

batch.queue === env.FOO_QUEUE.namestartsWith uglinesssend() methods. wrangler.toml

Both max_batch_size and max_batch_timeout work together. Whichever limit is reached first will trigger the delivery of a batch.

d1a94a7c4e4a4c23a6dbc74a8abbad11 if it helpsmax_concurrency on 1 but if the 10 messages in the batch complete faster than in 1 second then it doesn't workawait wait(1000) to wait a second

{ targetQueue: "test-runner", data: {} }Exceeded CPU TimeExceeded CPU timebatch.queuebatch.queue === env.FOO_QUEUE.namestartsWithsend()d1a94a7c4e4a4c23a6dbc74a8abbad11max_concurrencyawait wait(1000)export default {

async queue(batch) {

const ntfyUrl = 'https://ntfy.sh/doofy';

const message = batch.messages[0];

if (message) {

try {

const response = await fetch(ntfyUrl, {

method: 'POST',

body: message.body,

});

if (response.ok) message.ack();

} catch (error) {

console.error(`Failed to process message: ${error}`);

}

}

},

};