Hello Furkan, everyone! I'm trying to get my head around the structure of the Patreon as well as tu

Hello Furkan, everyone!

I'm trying to get my head around the structure of the Patreon as well as tutorials and have a bit of a hard time to see where to start - will appreciate a pointer to a 101 or a tutorial

My use case WAS => Stable Diffusion 1.5 and some public CivitAI models + my own photography dataset with poses, light and bokehs (150-200 images) = New checkpoint + Dreambooth fine-tuning on a new person (50-100 images) = New checkpoint, that let me create portraits of people in my own recognizable photography style (Rembrandt light, bokehs, ethereal feeling). I used https://github.com/TheLastBen/fast-stable-diffusion workbook on Colab for both trainings (SD15/CIVIT + MyStyle-XYZ.jpg x 200 images) + (Person-XYZ x 100 images). Sample results are attached - these look exactly as real people to the extent the parents can't say if it is made with AI or not. With some experimenting I managed to get to 768 and 896 pixel resolutions here, all with Automatic1111.

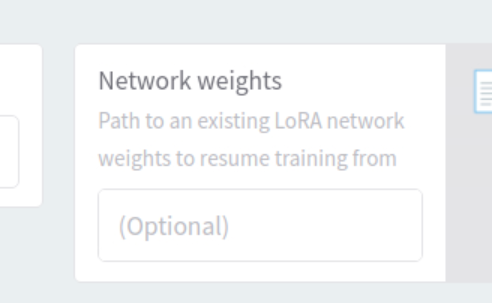

What I want is to elevate this to SDXL with 1280 pixels and test out SDXL and CivitAI checkpoints. So, I would have 150-200 1024-1280px images to train my style and then 50-100 images of a person to generate images like (beautiful personXYZ as styleXYZ .... ). I can't find a reliable workbook (Colab or RunPod, paid are OK) with clear instructions. I have a good development background and PhD in CS, but in a slightly different area, so I struggle to get to the point where I have a code that works so that I could experiment. Like I found configs, but how do I set up the environment / deployed OneTrainer - but it won't accept .safetensors model / managed to adjust fast-stable-diffusion to accept SDXL, but it fails to work with GDrive

Any clues, hints or pointers I could use? Thank you so much in advance!

Cheers, Alex

I'm trying to get my head around the structure of the Patreon as well as tutorials and have a bit of a hard time to see where to start - will appreciate a pointer to a 101 or a tutorial

My use case WAS => Stable Diffusion 1.5 and some public CivitAI models + my own photography dataset with poses, light and bokehs (150-200 images) = New checkpoint + Dreambooth fine-tuning on a new person (50-100 images) = New checkpoint, that let me create portraits of people in my own recognizable photography style (Rembrandt light, bokehs, ethereal feeling). I used https://github.com/TheLastBen/fast-stable-diffusion workbook on Colab for both trainings (SD15/CIVIT + MyStyle-XYZ.jpg x 200 images) + (Person-XYZ x 100 images). Sample results are attached - these look exactly as real people to the extent the parents can't say if it is made with AI or not. With some experimenting I managed to get to 768 and 896 pixel resolutions here, all with Automatic1111.

What I want is to elevate this to SDXL with 1280 pixels and test out SDXL and CivitAI checkpoints. So, I would have 150-200 1024-1280px images to train my style and then 50-100 images of a person to generate images like (beautiful personXYZ as styleXYZ .... ). I can't find a reliable workbook (Colab or RunPod, paid are OK) with clear instructions. I have a good development background and PhD in CS, but in a slightly different area, so I struggle to get to the point where I have a code that works so that I could experiment. Like I found configs, but how do I set up the environment / deployed OneTrainer - but it won't accept .safetensors model / managed to adjust fast-stable-diffusion to accept SDXL, but it fails to work with GDrive

Any clues, hints or pointers I could use? Thank you so much in advance!

Cheers, Alex