I have a product idea. An AI Router. Similar to AI Gateway but it would allow me to define custom ro

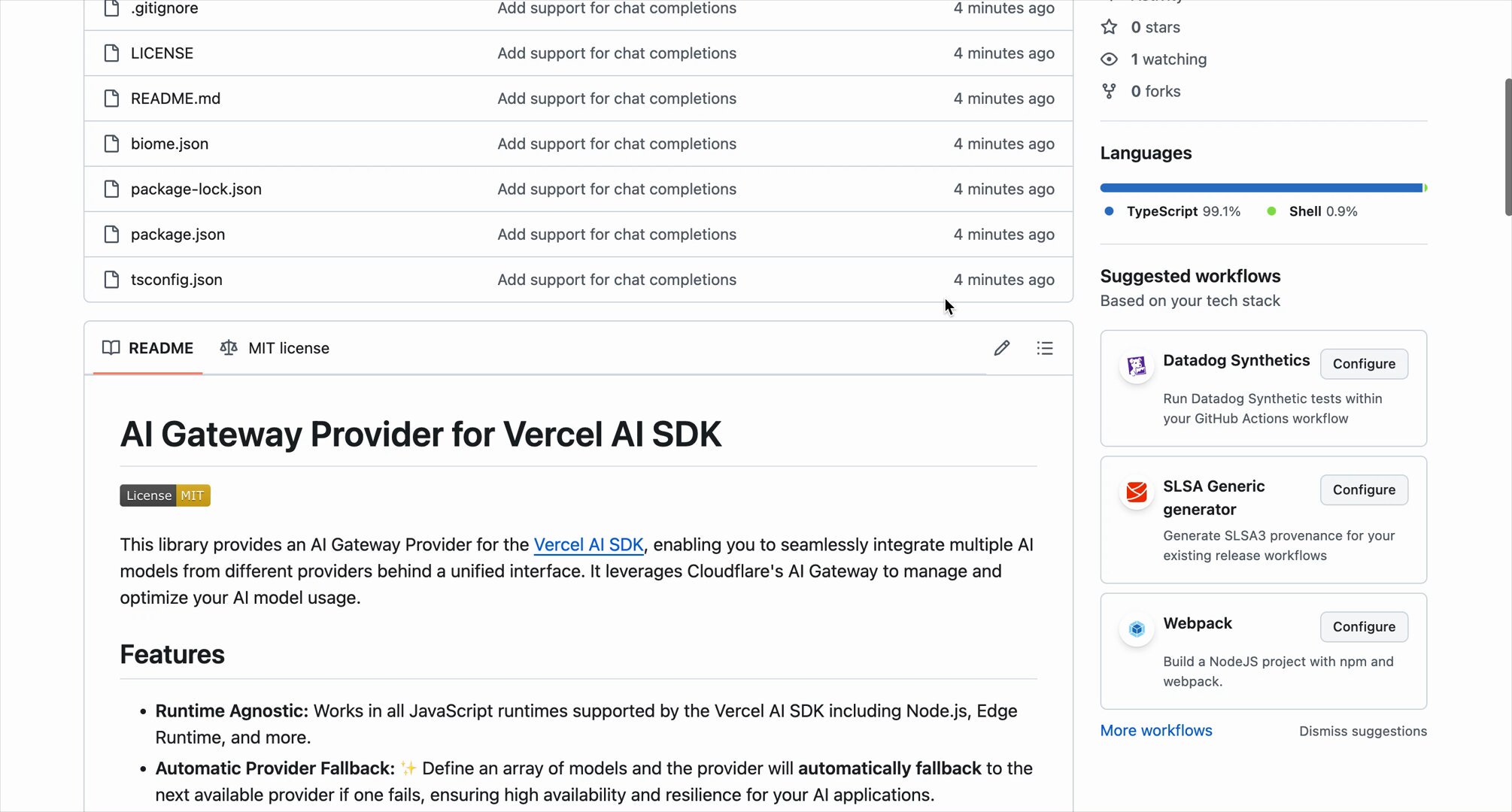

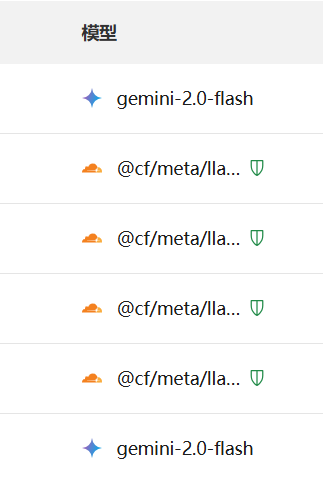

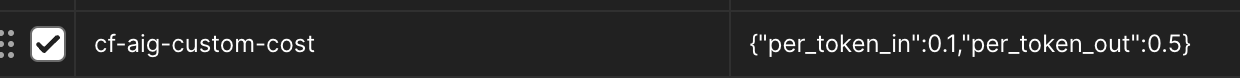

I have a product idea. An AI Router. Similar to AI Gateway but it would allow me to define custom routes where I can define the target llm model and settings like temp, max_tokens etc. I would then route each app llm call to the custome AI Router endpoint. I would send only the prompts (system(optional) and user prompts). All other settings, like which llm to use, requst settings would be defined at AI router level. With one setting I can turn on AI Gateway on the router.

This would allow me to quickly adapt to the fast paced landscape of llm models. I can quickly reconfigure app's functionality to use different llm's and even run tests to quickly to validate outputs.

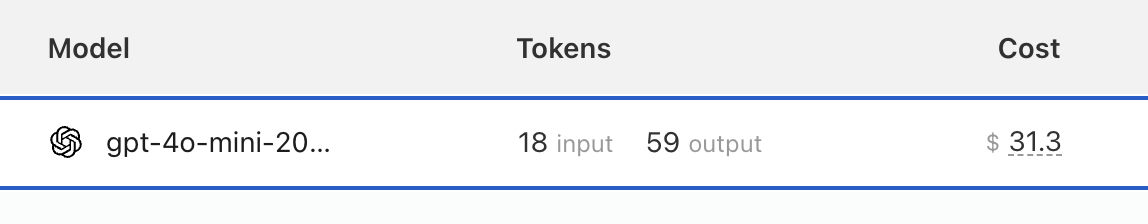

I'm wasting a lot of time updating and refactoring app code to stay up to date with llm models. Each time I need to:

This would allow me to quickly adapt to the fast paced landscape of llm models. I can quickly reconfigure app's functionality to use different llm's and even run tests to quickly to validate outputs.

I'm wasting a lot of time updating and refactoring app code to stay up to date with llm models. Each time I need to:

- find the llm api functionality. Try to remember what it does and understand it

- refactor the code to use different model because i found a better one, cheaper one or the one im using will no longer available.

- test in staging, test in production