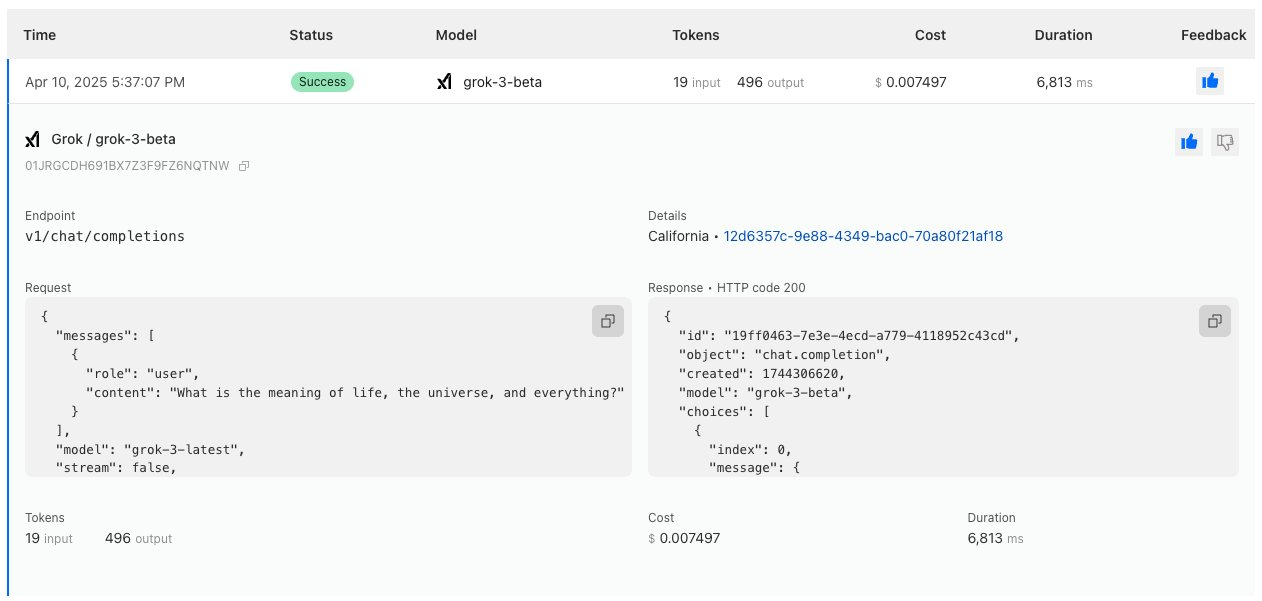

where is this playground the one i got doesnt have grok in it....

where is this playground the one i got doesnt have grok in it....

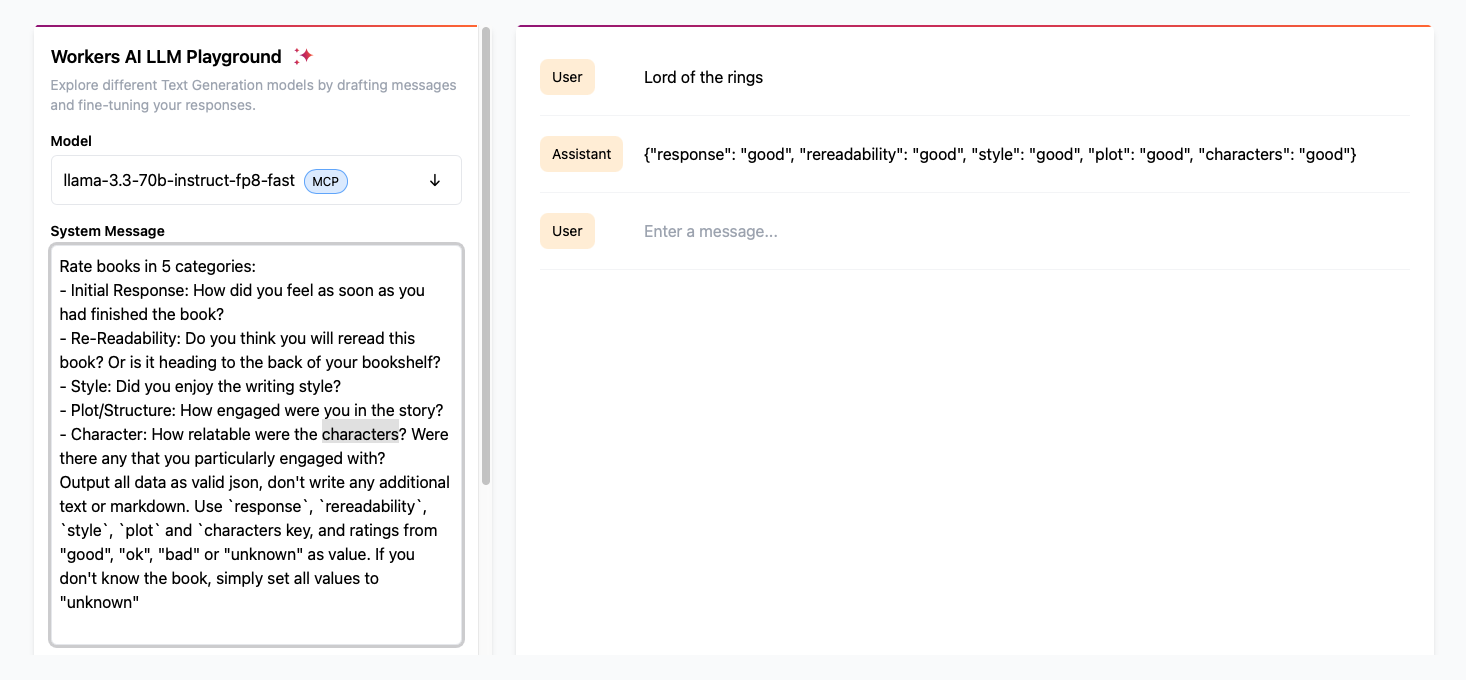

assistant message.cloudflare/ai-utils making this simpler, but I'm struggling with that one right now (see my thread from sunday)

gateway (as shown in the AI Gateway docs: https://developers.cloudflare.com/ai-gateway/providers/workersai/#workers-binding)runWithTools are very composable.. or am I missing something?

@cf/myshell-ai/melotts does not support chinese which the original model does support{ image: string } is there a way to have it output the usage data, similar to the { usage: { prompt_tokens: number, completion_tokens: number, total_tokens: number }} output for text generation models? Based on the pricing, I'd assume it would be something like { usage: { tiles: number, steps: number }}? Or does this just need to be calculated on each usage on our end using the default step of 4 if none is provided as input, and measuring the output image size?

401 Unauthorized errors in my logs indicate that the authentication for Workers AI is failing, despite the correct binding. This suggests issues with account permissions, missing API tokens, or a misconfiguration in the Workers AI setup. whisper turbo large v3 in the vars section of my wrangler.toml file?WORKERS_AI_TOKEN = "gdhd". i suppose i need to add the workers ai api token too?this there?wrangler.toml defines AUDIO_PROCESSOR as a Durable Object, and the code uses this.env.AI,

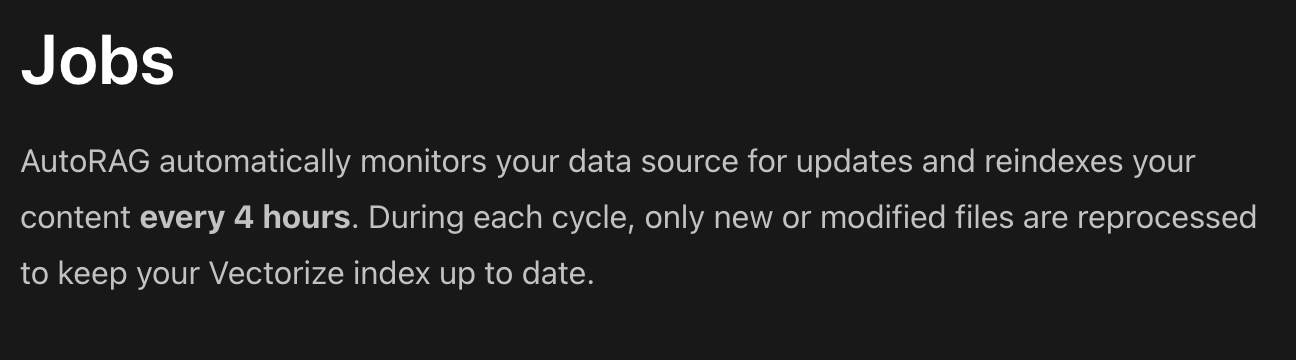

env.AI.run(…), and i get an authentication error in the console and it opens my default web browser to the cloudflare dashboard’s grant permissions UI (or sometimes crashes the process and i just run wrangler login manually to do it). is this normal and expected?Capacity error, will retry: 3040: Capacity temporarily exceeded, please try againai-utils. I spend a lot of time troubleshooting it, it tracks token usage wrong (returns only the last ones), calls AI.run more often than necessary, etc. See #Is module @cloudflare/ai-utils stable? > I just wrote the function calling in my own code, works way better, and doesn't inflict double usage consumption. And I'd recommend you to do so, too, tbh

assistantcloudflare/ai-utilsgatewayrunWithTools@cf/myshell-ai/melotts{ image: string }{ usage: { prompt_tokens: number, completion_tokens: number, total_tokens: number }}{ usage: { tiles: number, steps: number }}[ai]

binding = "AI"401 Unauthorizedwhisper turbo large v3varswrangler.tomlwrangler.tomlWORKERS_AI_TOKEN = "gdhd"workers ai try {

console.log(`[BYPASS] Attempt 3: Using AI binding with raw bytes`);

const aiTranscription = await this.env.AI.run('@cf/openai/whisper-large-v3-turbo', {

audio: [...audioBytes], // Convert Uint8Array to regular array

language: 'en',

});

try {

console.log(`[BYPASS] Attempt 2: Using AI binding with base64`);

const aiTranscription = await this.env.AI.run('@cf/openai/whisper-large-v3-turbo', {

audio: base64Audio,

language: 'en',

});

thisAUDIO_PROCESSOR# Define the Durable Objects

[[durable_objects.bindings]]

name = "AUDIO_PROCESSOR"

class_name = "AudioProcessor"

# External DO binding for LLM worker

[[durable_objects.bindings]]

name = "LLM_PROCESSOR"

class_name = "LlmProcessor"

script_name = "llm"

# Add queue consumers with explicit handler functions

[[queues.consumers]]

[vars]

# Define the Durable Object class

[[migrations]]

tag = "v1"

new_classes = ["AudioProcessor"]

[[kv_namespaces]]

[[d1_databases]]

[[queues.consumers]]

[[queues.producers]]

[ai]

binding = "AI"

[observability]

enabled = true

head_sampling_rate = 1env.AI.run(…)wrangler loginCapacity error, will retry: 3040: Capacity temporarily exceeded, please try againexport class AudioProcessor {

constructor(state, env) {

this.state = state;

this.env = env;

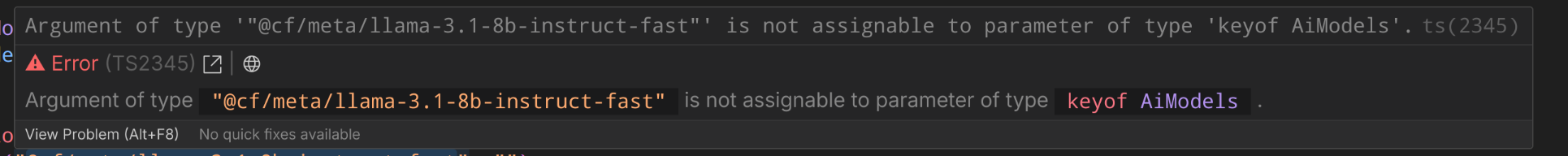

}import { runWithTools } from '@cloudflare/ai-utils';

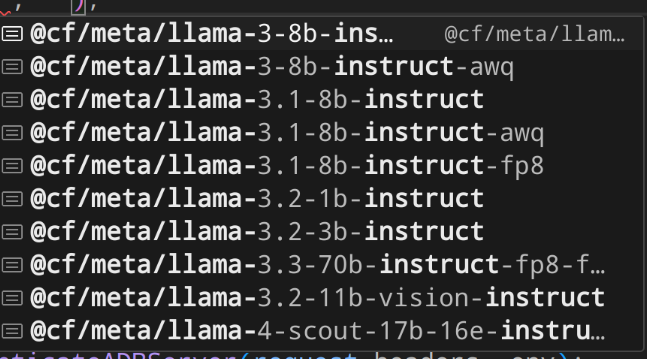

const model = '@cf/meta/llama-3.3-70b-instruct-fp8-fast';

const response = await runWithTools(

env.AI,

model,

{

messages: chatmessages,

tools: function_tools,

max_tokens: 8192,

temperature: 0.6,

},

{ strictValidation: true, maxRecursiveToolRuns: 1, verbose: true, streamFinalResponse: true }

);ai-utilsAI.run