https://aws.amazon.com/service-terms/ Section 82.3 specifically says you cannot cache most of the da

https://aws.amazon.com/service-terms/ Section 82.3 specifically says you cannot cache most of the data.

import S3 from 'aws-sdk/clients/s3.js';

region: 'auto'https://${ACCOUNT_ID}.r2.cloudflarestorage.com\us-east-1autoautoendpointwould/should I use the S3 API for that?Yeah

The following features are currently on the roadmap for R2 but not yet implemented.Pre-signed URLs are now implemented!

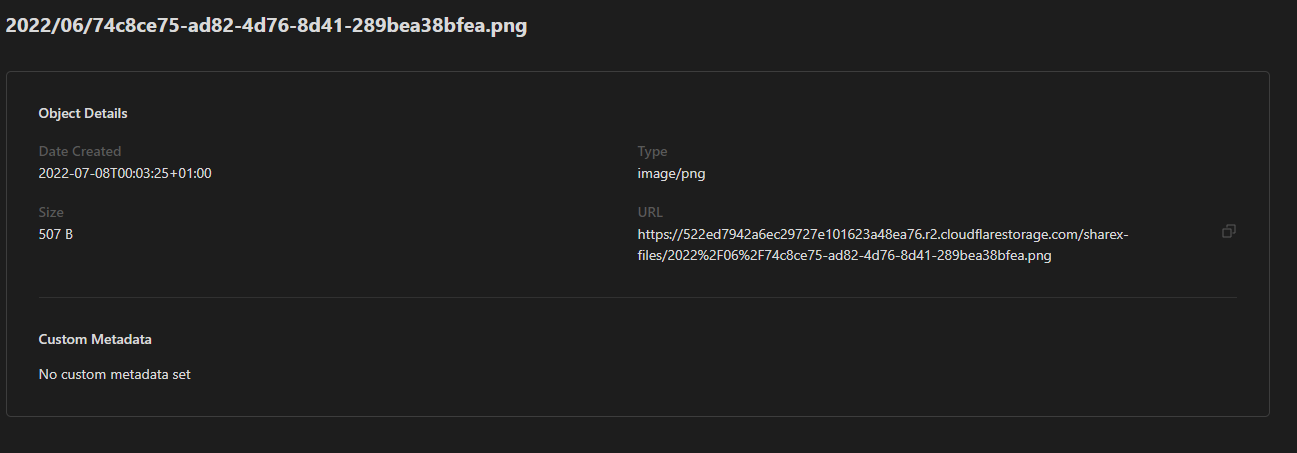

- Public Buckets

- Mapping a bucket to your custom domain

In the meantime, you can use a Worker to replicate the vast majority of functionality such as serving & caching public assets.

Take a look at the Demo Worker example here: https://developers.cloudflare.com/r2/examples/demo-worker/

Also, there's a great community project called Render that's made for R2:

https://github.com/kotx/render

Workers will not be required for making assets publicly available in the future, they are just an interim stop-gap.

import {S3Client} from "@aws-sdk/client-s3"

import {GetObjectCommand} from "@aws-sdk/client-s3"

const ACCOUNT_ID = 'x'

const ACCESS_KEY_ID = 'x'

const SECRET_ACCESS_KEY = 'x'

const S3 = new S3Client({

region: "auto",

endpoint: `https://${ACCOUNT_ID}.r2.cloudflarestorage.com`,

credentials: {

accessKeyId: ACCESS_KEY_ID,

secretAccessKey: SECRET_ACCESS_KEY,

}

});

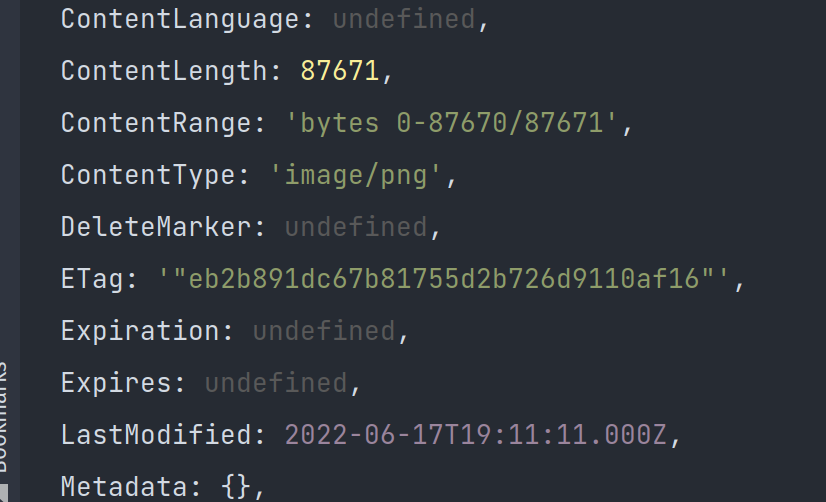

console.log(

await S3.send(new GetObjectCommand({

Bucket: "sdk-example",

Key: "ferriswasm.png"

}))

)