Not yet. Internally we use v2 for all our tests so that's why we have v2 examples everywhere. We're

Not yet. Internally we use v2 for all our tests so that's why we have v2 examples everywhere. We're happy to take contributions to add v3 examples.

import S3 from 'aws-sdk/clients/s3.js'; from my understanding.

region: 'auto' and even more the `endpoint: https://${ACCOUNT_ID}.r2.cloudflarestorage.com\ partsus-east-1 as aliases to auto but for completeness, it's best to use autoendpoint is necessary, right? Without it I had an error telling me the account was wrong, or something of the sort.would/should I use the S3 API for that?Yeah

The following features are currently on the roadmap for R2 but not yet implemented.Pre-signed URLs are now implemented!

- Public Buckets

- Mapping a bucket to your custom domain

In the meantime, you can use a Worker to replicate the vast majority of functionality such as serving & caching public assets.

Take a look at the Demo Worker example here: https://developers.cloudflare.com/r2/examples/demo-worker/

Also, there's a great community project called Render that's made for R2:

https://github.com/kotx/render

Workers will not be required for making assets publicly available in the future, they are just an interim stop-gap.

x-amz-content-sha256 header is supported though (it’s part of the signature), so you can use that instead.import S3 from 'aws-sdk/clients/s3.js';region: 'auto'https://${ACCOUNT_ID}.r2.cloudflarestorage.com\us-east-1autoautoendpointx-amz-content-sha256import {S3Client} from "@aws-sdk/client-s3"

import {GetObjectCommand} from "@aws-sdk/client-s3"

const ACCOUNT_ID = 'x'

const ACCESS_KEY_ID = 'x'

const SECRET_ACCESS_KEY = 'x'

const S3 = new S3Client({

region: "auto",

endpoint: `https://${ACCOUNT_ID}.r2.cloudflarestorage.com`,

credentials: {

accessKeyId: ACCESS_KEY_ID,

secretAccessKey: SECRET_ACCESS_KEY,

}

});

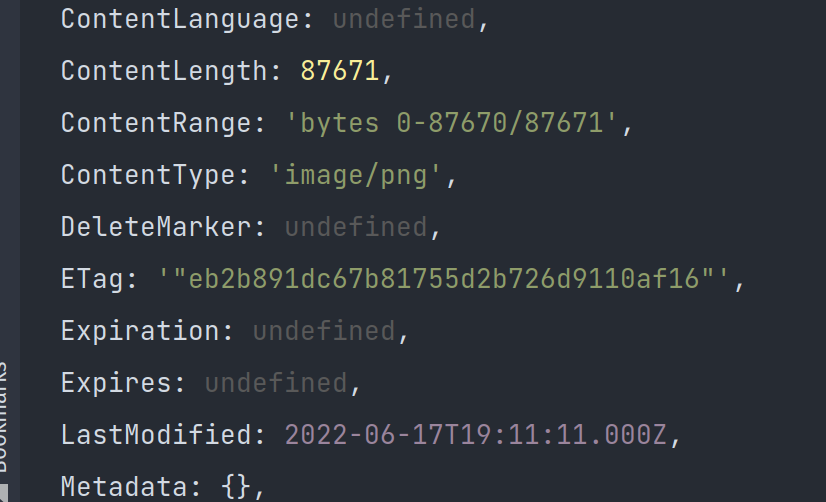

console.log(

await S3.send(new GetObjectCommand({

Bucket: "sdk-example",

Key: "ferriswasm.png"

}))

)