Firecrawl

Join builders, developers, users and turn any website into LLM-ready data, enabling developers to power their AI applications with clean, structured information crawled from the web.

JoinFirecrawl

Join builders, developers, users and turn any website into LLM-ready data, enabling developers to power their AI applications with clean, structured information crawled from the web.

Join🔥 Challenge: Website requires 2FA to disconnect sessions + 3 session limit - is this solvable?

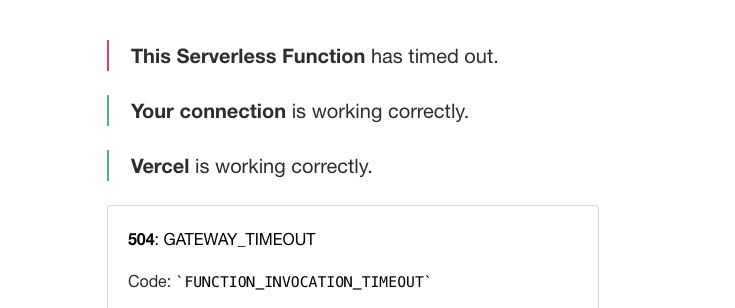

App keeps crashing, serverless function has timed out

Crawl does not work for a specific website, but scrape does

I'm trying to use it in TRAE and I'm getting this error, what could it be?

Crawl endpoint retrieving wrong sourceURL

Scraping site for logged-in users

Getting Empty Respose with Extract Node

JSON schema not working like earlier

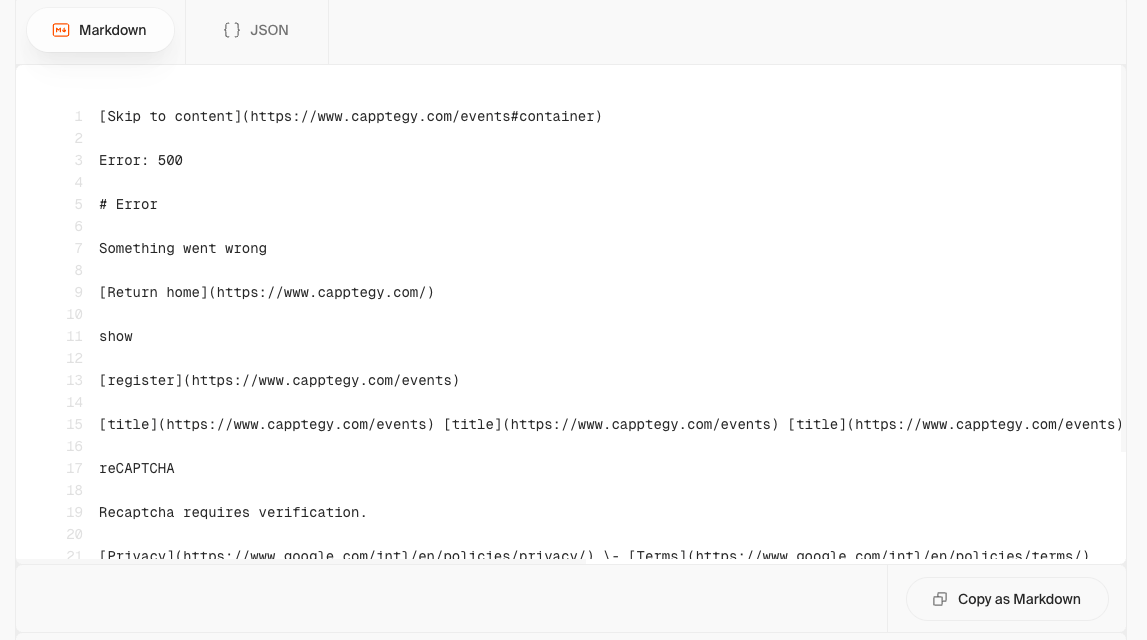

Getting intermittent error page from sites ONLY when using Firecrawl

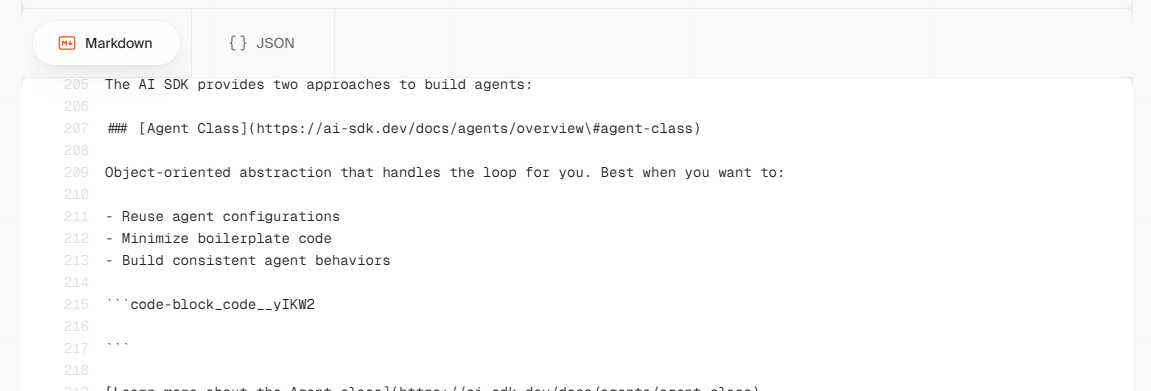

Why when I tried to scrape a page with pre tag / code block it's always empty in the markdown?

Clarification on Firecrawl Data Servers, Retention, and Deletion

User Segmentation and pincode level support

How can I make sure that my self-hosted firecrawl can handle large amount of request?

crawling isn't getting accurate data from site

Extract all PDF URLs from webpage

How to set concurrency for Firecrawl self-hosted

Does the self-hosted have a rate-limiter?

100% of URLs Domain?

JSON SCHEMA NOT WORKING

n8n - looping through 300 records to search each result - 500 error