Welcome to the official Cloudflare Developers server. Here you can ask for help and stay updated with the latest news

83,498Members

View on DiscordResources

Similar Threads

Was this page helpful?

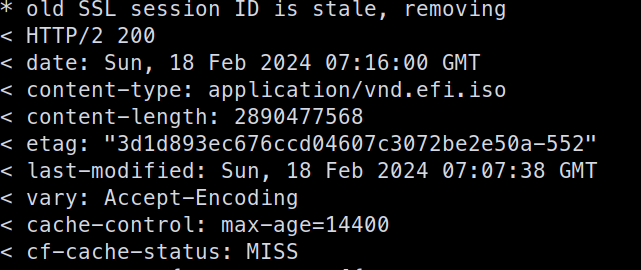

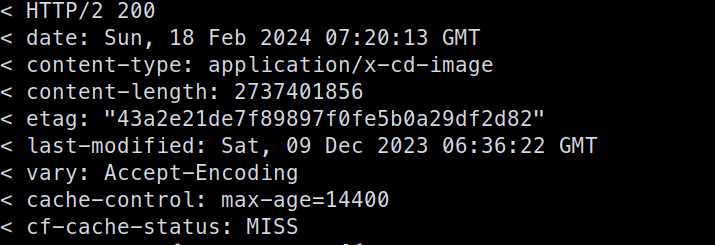

I am using rclone to upload files to my s3 bucket, and today I found this very interesting issue. When I upload files using rclone and it uses multi-part upload, the etag value returned is an invalid md5sum, but if I use singe part uploads, the etag value is a valid md5sum of the uploaded file. is this intentional? I have never seen such behavior with other s3 compatible storage providers. I am attaching a few screenshots for reference, the first one shows etag with multipart upload file, and the second one shows etag with single part / full / no multi part upload.

I am using rclone to upload files to my s3 bucket, and today I found this very interesting issue. When I upload files using rclone and it uses multi-part upload, the etag value returned is an invalid md5sum, but if I use singe part uploads, the etag value is a valid md5sum of the uploaded file. is this intentional? I have never seen such behavior with other s3 compatible storage providers. I am attaching a few screenshots for reference, the first one shows etag with multipart upload file, and the second one shows etag with single part / full / no multi part upload.

Tiered Cache currently is not compatible with responses from R2. These responses will act as if Tiered Cache is not configured.

Yep Ill do.

Yep Ill do.

Cache-Control