Thanks for the suggestion. I have followed the tutorial and re-trained the mistral model using the s

Thanks for the suggestion.

I have followed the tutorial and re-trained the mistral model using the suggested jupiter notebook

https://github.com/huggingface/autotrain-advanced/blob/main/colabs/AutoTrain_LLM.ipynb

my

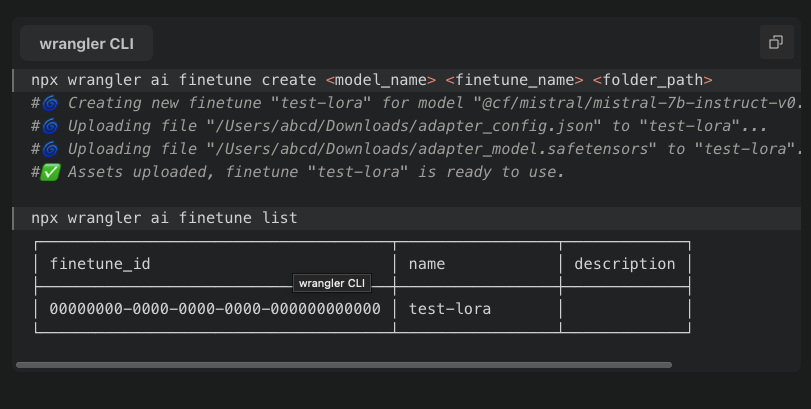

Do you notice anything wrong ? Because now , when I try to upload my

So to recap my tests:

Thanks again for the support

I have followed the tutorial and re-trained the mistral model using the suggested jupiter notebook

https://github.com/huggingface/autotrain-advanced/blob/main/colabs/AutoTrain_LLM.ipynb

my

adapter_config.json is now: see message above workers-ai

Do you notice anything wrong ? Because now , when I try to upload my

adapter_model.safetensors I recevie a new error from the wrangler✘ [ERROR] 🚨 Couldn't upload file: A request to the Cloudflare API (/accounts/1111122223334444/ai/finetunes/6a4a4a4a4a4a4a4a4-a5aa5a5a-aaaaaa/finetune-assets) failed. FILE_PARSE_ERROR: 'file' should be of valid safetensors type [code: 1000], quiting...So to recap my tests:

- The adapter previously trained using mlx_lm (https://github.com/ml-explore/mlx-examples , https://huggingface.co/docs/hub/en/mlx) is accepted during the fine-tune upload/creation process , but it generates the error

InferenceUpstreamError: ERROR 3028: Unknown internal errorwhen I try to run an inference. - The adapter trained using autotrain from huggingface is not accepted during the fine-tune upload/creation process , giving me the error described above.

rank r <=8 or quantization = None) that has not been specified into the documentation ?Thanks again for the support

GitHub AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

GitHub

Examples in the MLX framework. Contribute to ml-explore/mlx-examples development by creating an account on GitHub.