any plans to increase limits of non beta model? i mean having no limits

any plans to increase limits of non beta model? i mean having no limits

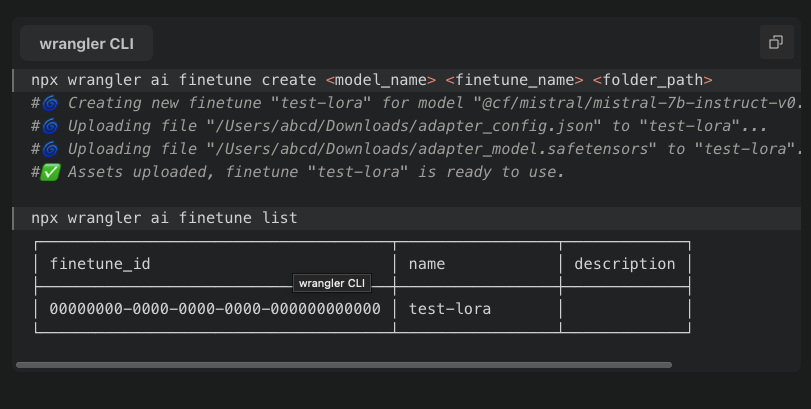

adapter_config.jsonadapter_config.json

adapter_model.safetensors✘ [ERROR] 🚨 Couldn't upload file: A request to the Cloudflare API (/accounts/1111122223334444/ai/finetunes/6a4a4a4a4a4a4a4a4-a5aa5a5a-aaaaaa/finetune-assets) failed. FILE_PARSE_ERROR: 'file' should be of valid safetensors type [code: 1000], quiting...InferenceUpstreamError: ERROR 3028: Unknown internal error

rank r <=8 or quantization = None AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

--target_modules q_proj,v_proj

@cf/meta/llama-3-8b-instruct@cf/meta/llama-2-7b-chat-fp16Active Models What is the best way to calculate neuron usage at runtime in worker?

What is the best way to calculate neuron usage at runtime in worker?FILE_PARSE_ERRORError: no available backend found. ERR: [wasm] RuntimeError: Aborted(both async and sync fetching of the wasm failed)

A change to the Workers Runtime must never break an application that is live in production.

This could be much clearer for first-time users.

This could be much clearer for first-time users.env.AI.run()ctx.passThroughOnExceptionpassThroughOnExceptionpassThroughOnException✘ [ERROR] 🚨 Couldn't upload file: A request to the Cloudflare API (/accounts/1111122223334444/ai/finetunes/6a4a4a4a4a4a4a4a4-a5aa5a5a-aaaaaa/finetune-assets) failed. FILE_PARSE_ERROR: 'file' should be of valid safetensors type [code: 1000], quiting...[wrangler:err] InferenceUpstreamError: AiError: undefined: ERROR 3001: Unknown internal error

at Ai.run (cloudflare-internal:ai-api:66:23)

at async Object.fetch (file:///C:/Users/Fathan/PetProject/cloudflare-demo/src/index.ts:15:21)

at async jsonError (file:///C:/Users/Fathan/PetProject/cloudflare-demo/node_modules/wrangler/templates/middleware/middleware-miniflare3-json-error.ts:22:10)

at async drainBody (file:///C:/Users/Fathan/PetProject/cloudflare-demo/node_modules/wrangler/templates/middleware/middleware-ensure-req-body-drained.ts:5:10)

[wrangler:inf] POST / 500 Internal Server Error (2467ms)const stream = await retrivalChain.stream({

input: 'what is hello world',

});

return new Response(stream, {

headers: {

'content-type': 'text/event-stream',

'Access-Control-Allow-Origin': '*',

},

});Argument of type 'IterableReadableStream<{ context: Document<Record<string, any>>[]; answer: string; } & { [key: string]: unknown; }>' is not assignable to parameter of type 'BodyInit | null | undefined'.

Type 'IterableReadableStream<{ context: Document<Record<string, any>>[]; answer: string; } & { [key: string]: unknown; }>' is not assignable to type 'ReadableStream<Uint8Array>'.

The types returned by 'getReader()' are incompatible between these types.

Type 'ReadableStreamDefaultReader<{ context: Document<Record<string, any>>[]; answer: string; } & { [key: string]: unknown; }>' is not assignable to type 'ReadableStreamDefaultReader<Uint8Array>'.

Type '{ context: Document<Record<string, any>>[]; answer: string; } & { [key: string]: unknown; }' is missing the following properties from type 'Uint8Array': BYTES_PER_ELEMENT, buffer, byteLength, byteOffset, and 27 more.ts(2345)

const stream: IterableReadableStream<{

context: Document<Record<string, any>>[];

answer: string;

} & {

[key: string]: unknown;

}>try {

await env.AI.run(...)

} catch (e) {

return new Response('handle errors here')

}