you'd probably want to just: ```js try { await env.AI.run(...) } catch (e) { return new Resp

you'd probably want to just:

and then consider retrying with errors if it makes sense.

and then consider retrying with errors if it makes sense.

try {

await env.AI.run(...)

} catch (e) {

return new Response('handle errors here')

}passThroughOnException is more intended for if you have an origin behind the workermax_tokens

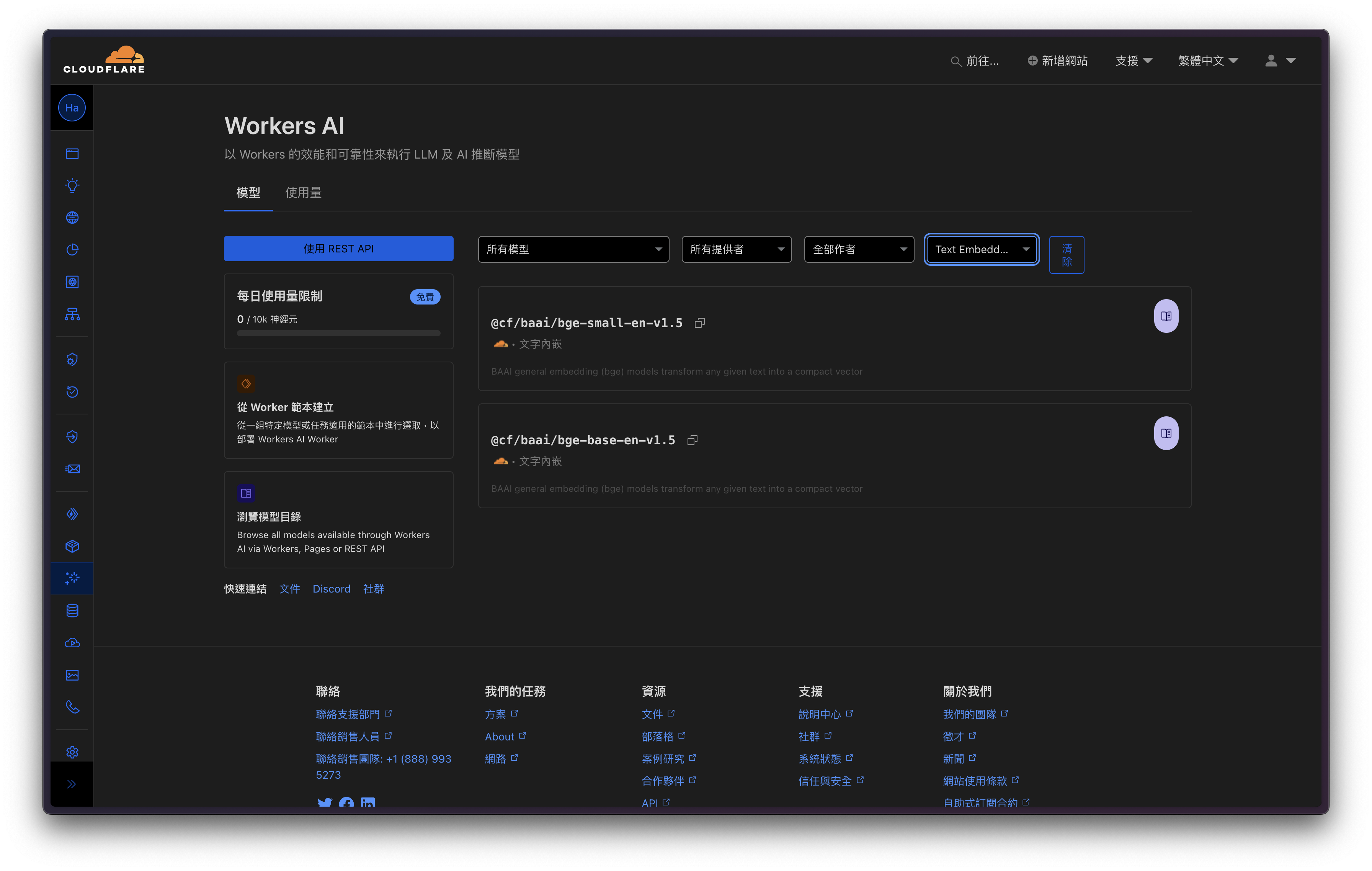

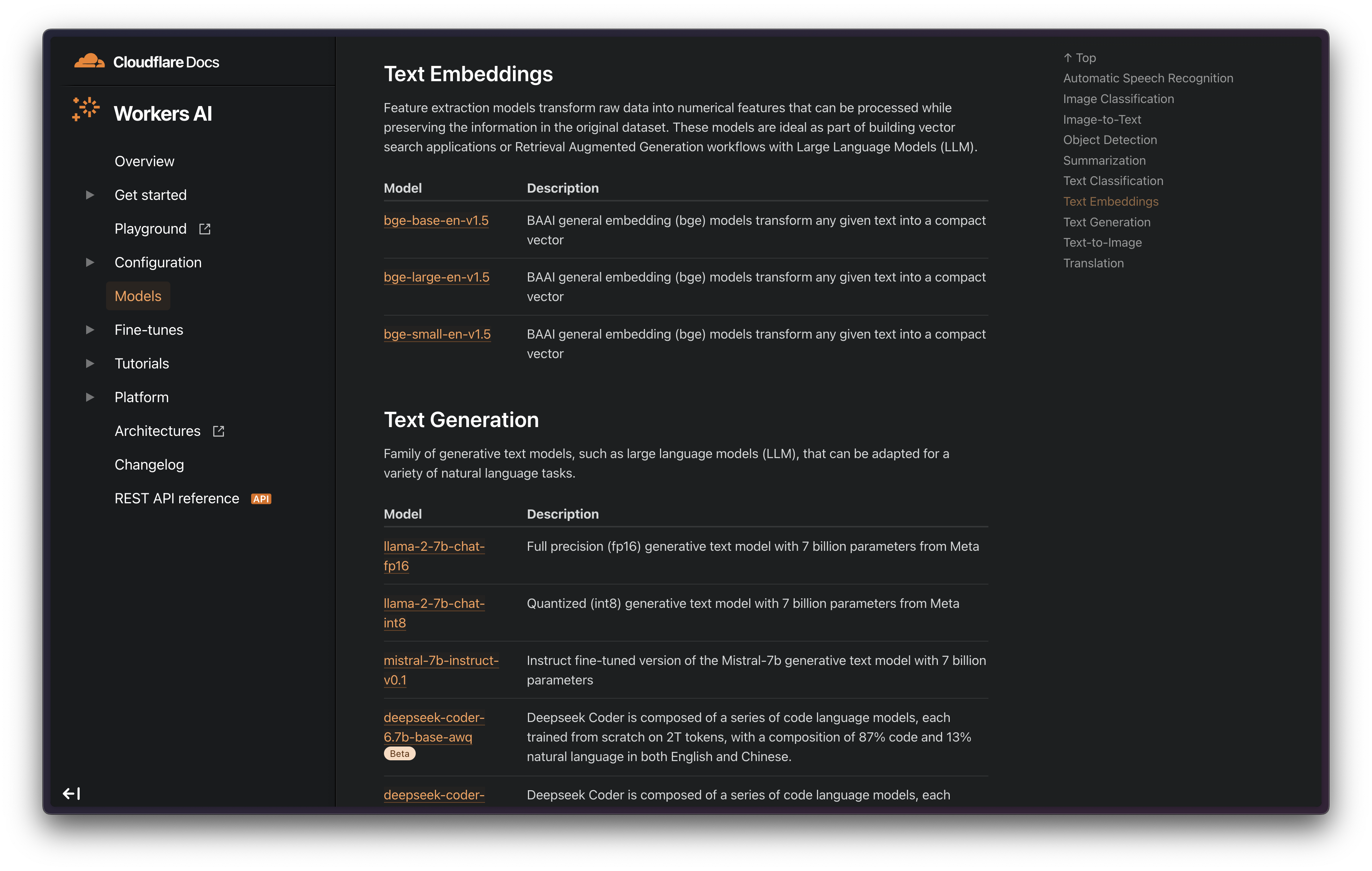

@cf/qwen/qwen1.5-7b-chat-awq has a context length way above 8k. Managed to chop up and squeeze in ~20k tokens, ~100k characters, and ask a question about a detail buried in the beginning of the text.@cf/llava-hf/llava-1.5-7b-hf and @cf/unum/uform-gen2-qwen-500m accept an image as input.bge-large-en-v1.5 appears on the model list, but not on the dashboard.

@cf/openchat/openchat-3.5-0106, @cf/qwen/qwen1.5-14b-chat-awq, @hf/google/gemma-7b-it are also gone. Seems like the dashboard has a limit of 50 models.eval or new Function cannot be used in Workers for security reasons. Based on the error message I assume it's trying to do some sort of schema validation which often uses new Function.passThroughOnExceptionmax_tokens@cf/qwen/qwen1.5-7b-chat-awq@cf/llava-hf/llava-1.5-7b-hf@cf/unum/uform-gen2-qwen-500mbge-large-en-v1.5@cf/openchat/openchat-3.5-0106@cf/qwen/qwen1.5-14b-chat-awq@hf/google/gemma-7b-it[ERROR] Error compiling schema, function code: const schema2 = scope.schema[2];const schema1 = scope.schema[1]......

X [ERROR] Error in fetch handler: EvalError: Code generation from strings disallowed for this contextevalnew Function