if it's a text generation model you can lower the `max_tokens`

if it's a text generation model you can lower the

max_tokensmax_tokens

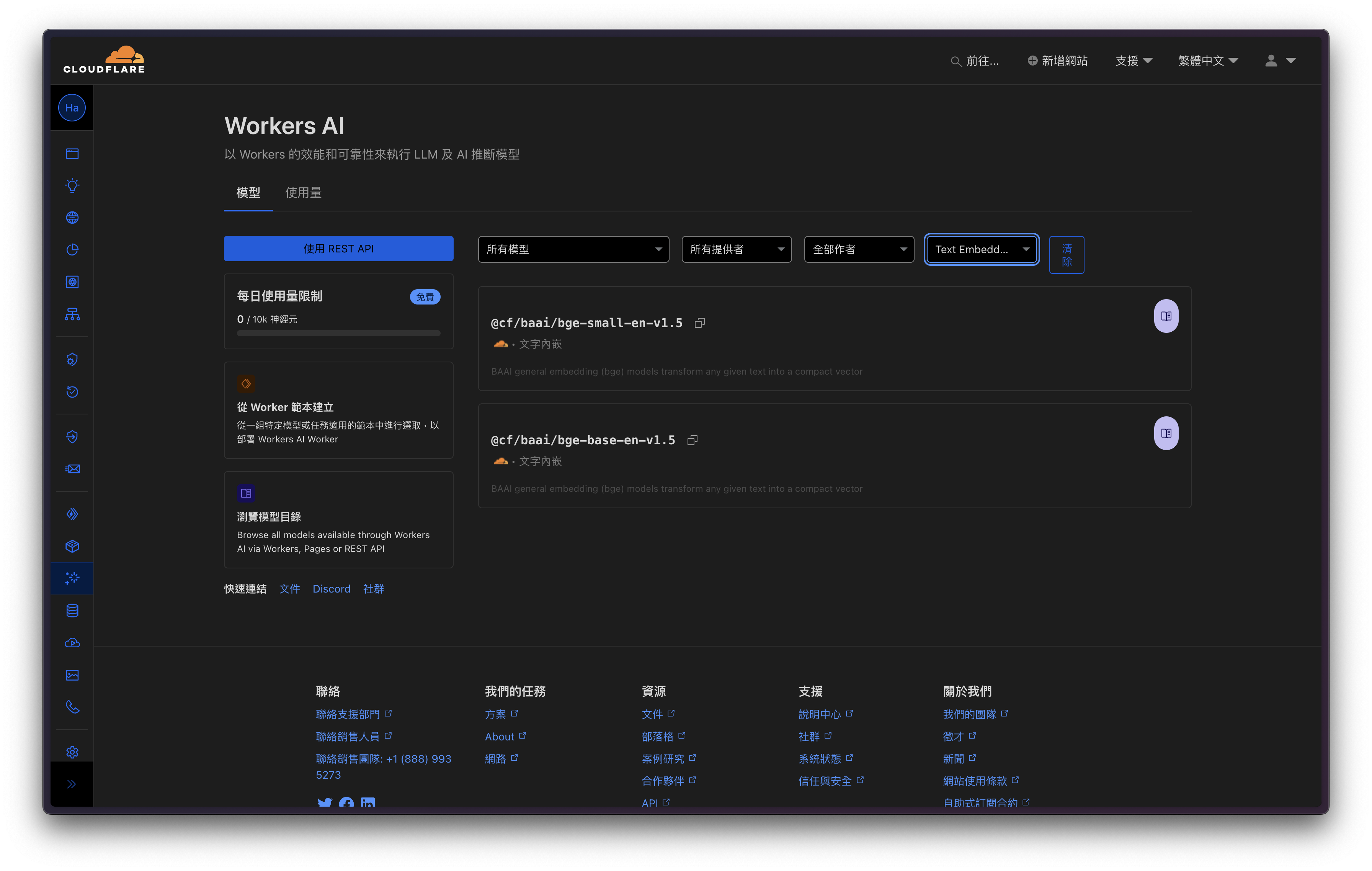

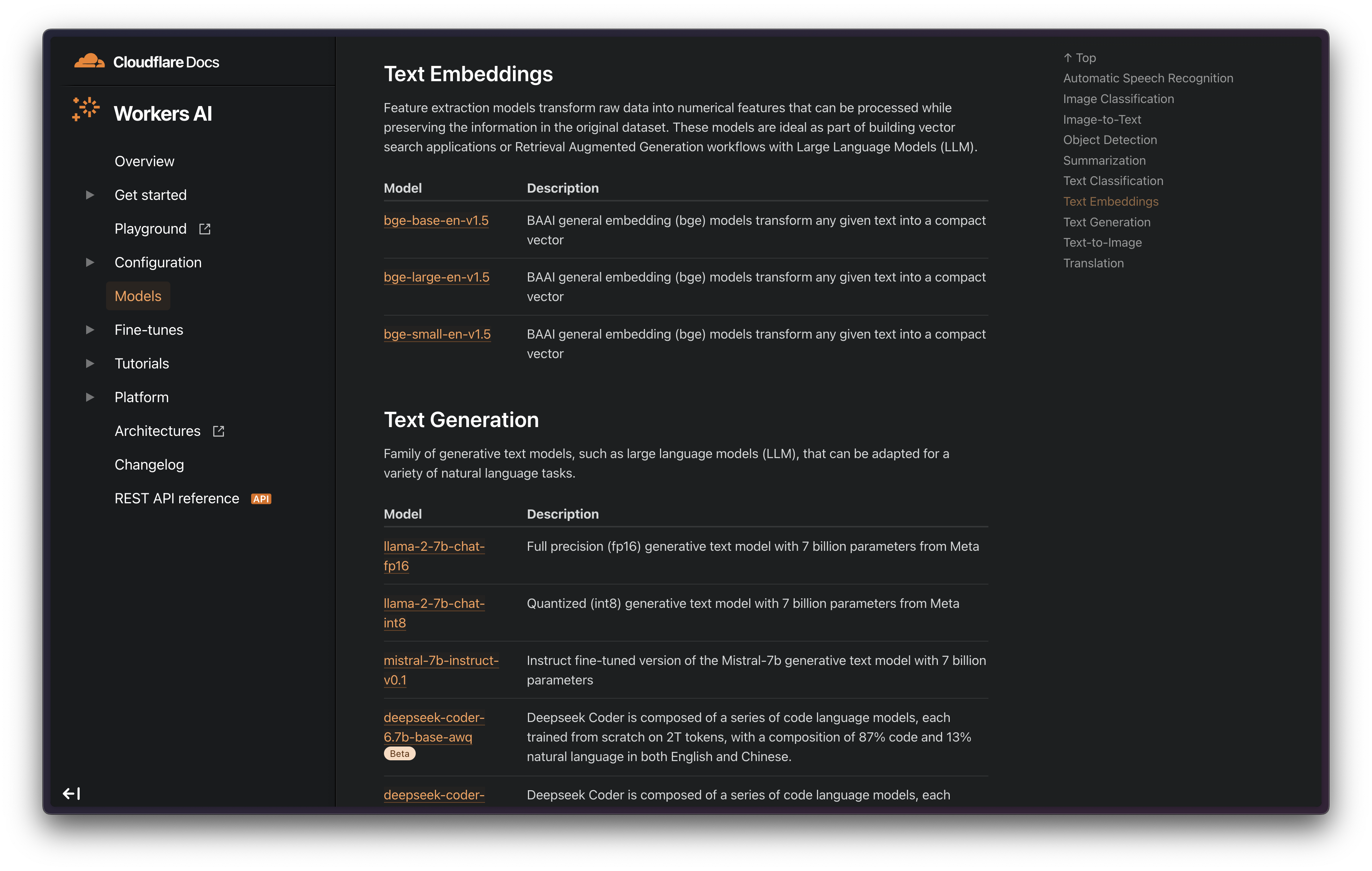

@cf/qwen/qwen1.5-7b-chat-awq@cf/llava-hf/llava-1.5-7b-hf@cf/unum/uform-gen2-qwen-500mbge-large-en-v1.5

@cf/openchat/openchat-3.5-0106@cf/qwen/qwen1.5-14b-chat-awq@hf/google/gemma-7b-itevalnew Function

pPOST http://workers-binding.ai/run?version=3 - Canceled @ 24/05/2024, 06:28:16[ERROR] Error compiling schema, function code: const schema2 = scope.schema[2];const schema1 = scope.schema[1]......

X [ERROR] Error in fetch handler: EvalError: Code generation from strings disallowed for this context