Hi, Issac. Is there a way to get rough estimation of neuron usage for a single stable diffusion infe

Hi, Issac. Is there a way to get rough estimation of neuron usage for a single stable diffusion inference?

@hf/thebloke/llamaguard-7b-awq there is a bit outdated, still uses llama 2 and it's not very smart, what about upgrading it to https://huggingface.co/meta-llama/Llama-Guard-3-8B which uses llama 3.1? And while at it perhaps also add https://huggingface.co/meta-llama/Prompt-Guard-86M

ai.run() request?

TypeError: The ReadableStream has been locked to a reader. prevent updateRecentImages works

top_p or repetition_penalty value to 0Cloudflare API Errordata: [DONE] event, 500 "Cloudflare API error" top_p & repetition_penalty is 0, so ideally we shouldn't get any error..@cf/meta/llama-3.1-8b-instruct-fp8

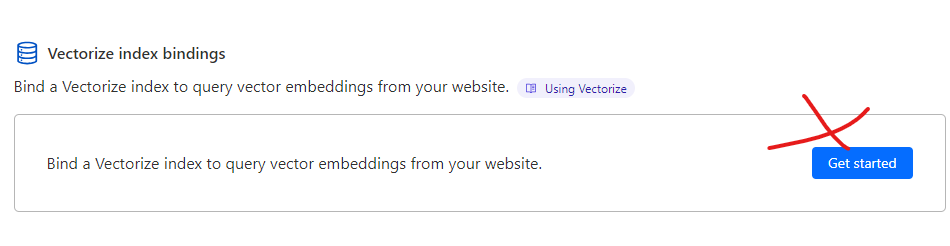

const embeddingResult = await env.AI.run('@cf/baai/bge-large-en-v1.5', {

text: value,

});

const embeddingBatch: number[][] = embeddingResult.data;

await env.VECTORIZE.upsert(

embeddingBatch.map((embedding, index) => ({

id: sourceId,

values: embedding,

namespace: 'default',

metadata: {

id: sessionId

},

}))

);export default {

async fetch(request, env) {

const inputs = {

prompt: "create an image that is 512x512. the background should be a solid, plain, yellow color. text over the background should say 'Learn How to Pronounce MySQL' in English. Text should be red and use an Arial font. ",

negative_prompt: "There shOuld not be any other effects or images.",

height: 512,

width: 1024

};

const response = await env.AI.run(

"@cf/bytedance/stable-diffusion-xl-lightning",

inputs

);

return new Response(response, {

headers: {

"content-type": "image/png",

},

});

},

};@hf/thebloke/llamaguard-7b-awqai.run()const response = await env.AI.run('@cf/meta/llama-2-7b-chat-int8', {

prompt: "tell me a joke about cloudflare";

});TypeError: The ReadableStream has been locked to a reader.top_ptop_prepetition_penaltyrepetition_penaltyCloudflare API Errordata: [DONE]500 "Cloudflare API error"const response = await ai.run(model || "@cf/stabilityai/stable-diffusion-xl-base-1.0", requestInput);

// Store the image name in R2, in background

ctx.waitUntil(updateRecentImages(imageName, (await generateThumbs(imageName, '400x', true)), input, env, response));

return new Response(response, {

headers: {

"content-type": "image/png",

},

});{

"errors": [

{

"message": "Server Error",

"code": 6001

}

],

"success": false,

"result": {},

"messages": []

}