another question: does gateway support IP based rate limiting

another question: does gateway support IP based rate limiting

X

•1/30/25, 6:36 PM

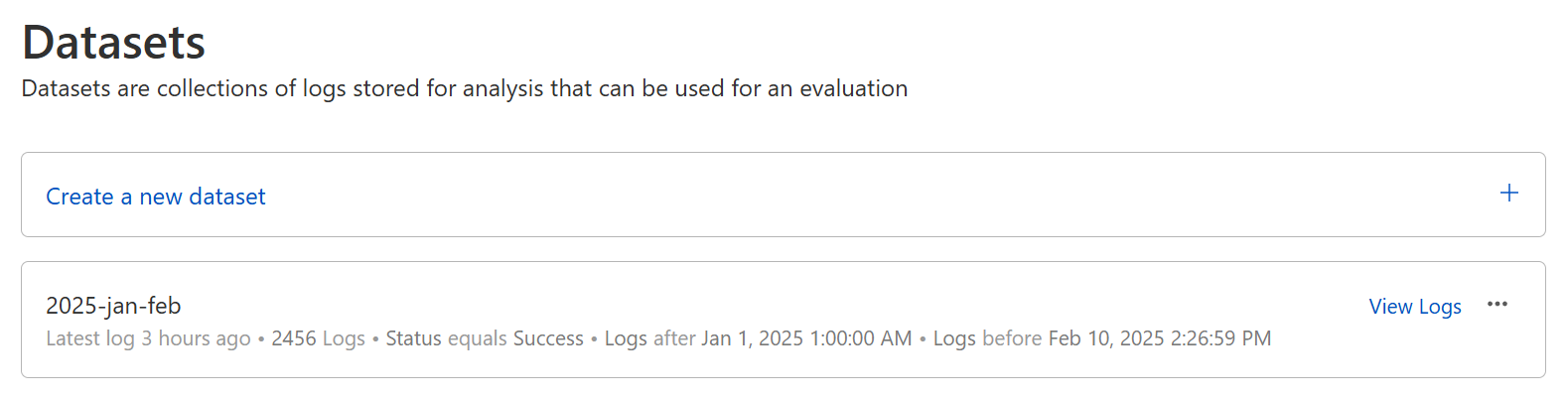

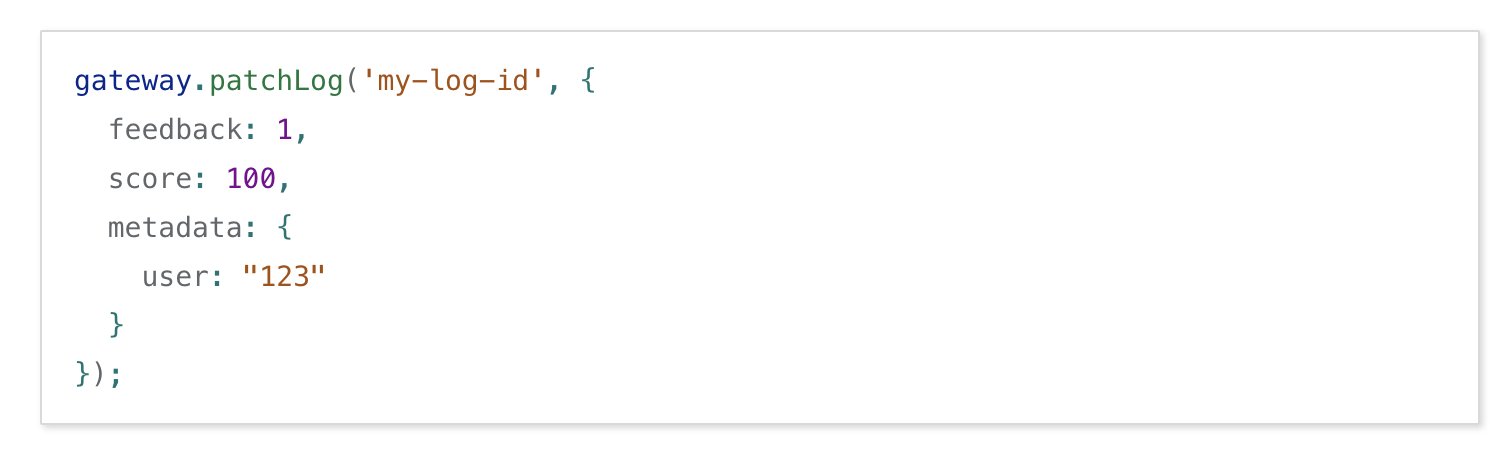

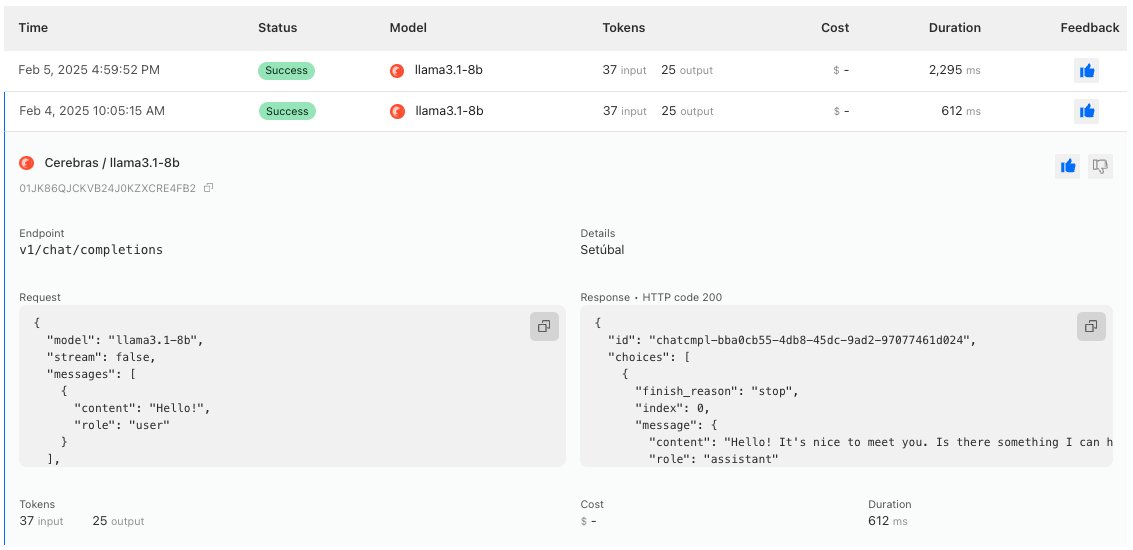

We've got 2 new exciting updates for AI Gateway!

We've got 2 new exciting updates for AI Gateway!

X

•2/6/25, 10:55 PM

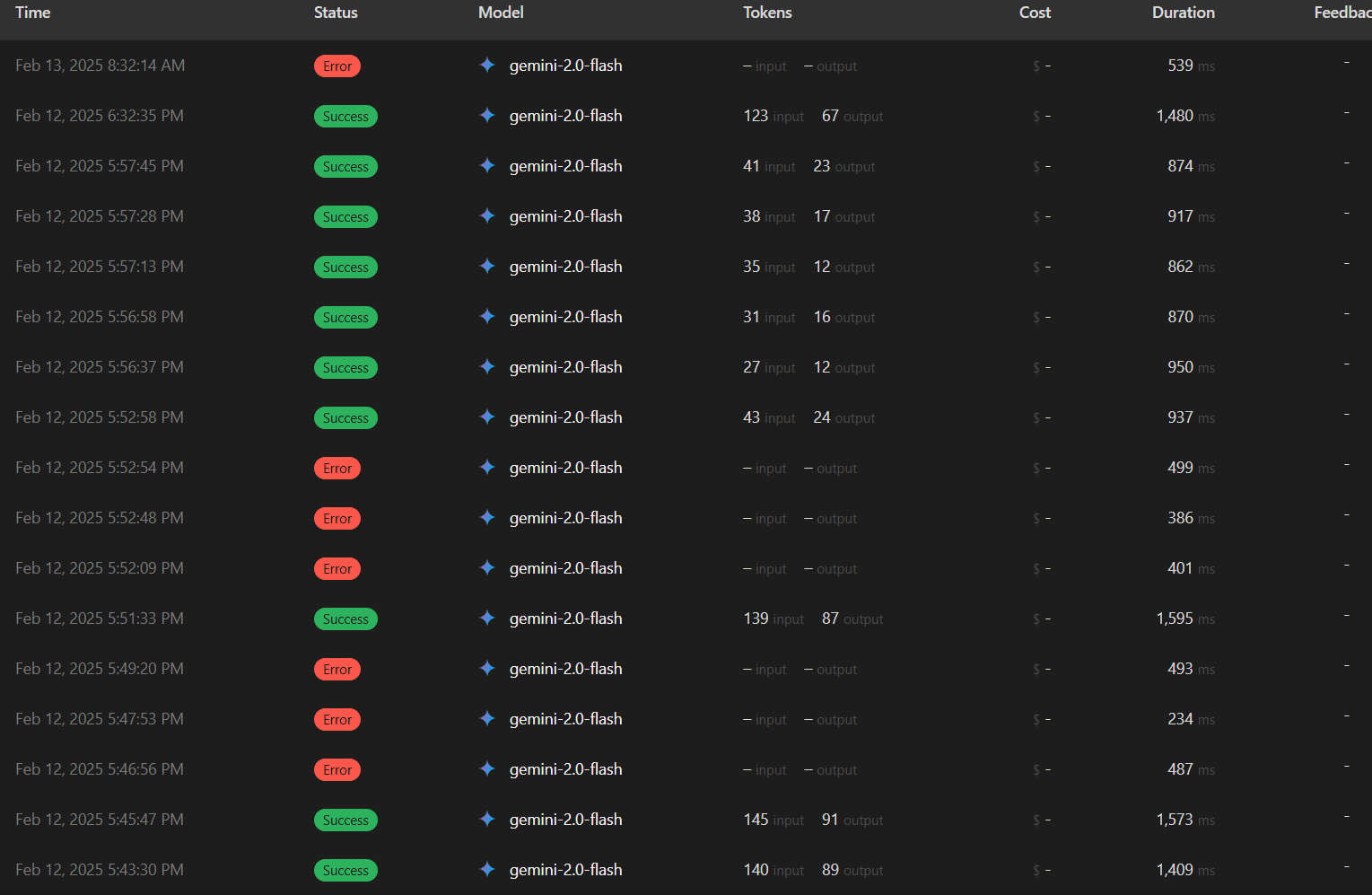

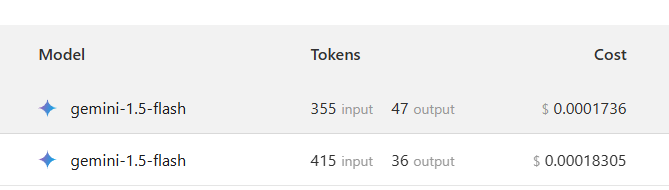

or am i missig something, is there a way to get usage out of it

or am i missig something, is there a way to get usage out of it